Configuration Details:

Operating System: Redhat Enterprise Linux 8.4 64 Bit

Oracle Grid Software version: 21.0.0.0 with latest patches

Oracle Grid Software version: 21.0.0.0 with latest patches

Cluster Node Details:

- rac1.localdomain

- rac2.localdomain

- rac3.localdomain -----> This is to be added in existing RAC cluster.

High level steps to be performed on node to be added before adding node i.e. rac3.localdomanin. Assuming server is ready.

Note:

I have cloned the existing rac1 machine and created rac3 machine. Because of this I have made below changes on rac3 server before adding node. If it is cloned machine then we have to remove cluster configuration files located on rac3 node. We are following-up the same steps. If it is fresh OS then you can refer "https://rupeshanantghubade.blogspot.com/2022/08/how-to-install-and-configure-oracle-21c.html" for OS parameters/network/kernel/limits/oracleasm settings.

- Ensure correct certified OS version is installed on new node.

- Ensure OS and kernel version are same across the nodes.

- Install required RPMs like Node1 and Node2 (Already available since this is cloned machine).

- Add network/IP details in /etc/hosts file on all three nodes.

- Add kernel and limits parameters in configuration files (Already available since this is cloned machine).

- Create user and groups and grant permissions (Already available since this is cloned machine).

- Create directory structures.

- Configure oracleasm (Already available since this is cloned machine).

- Configure SSH/passwordless authentication for grid user.

- Capture runcluvfy output and check any failed prereq and resolve the same.

- Start GUI installation from existing Node like rac1 or rac2.

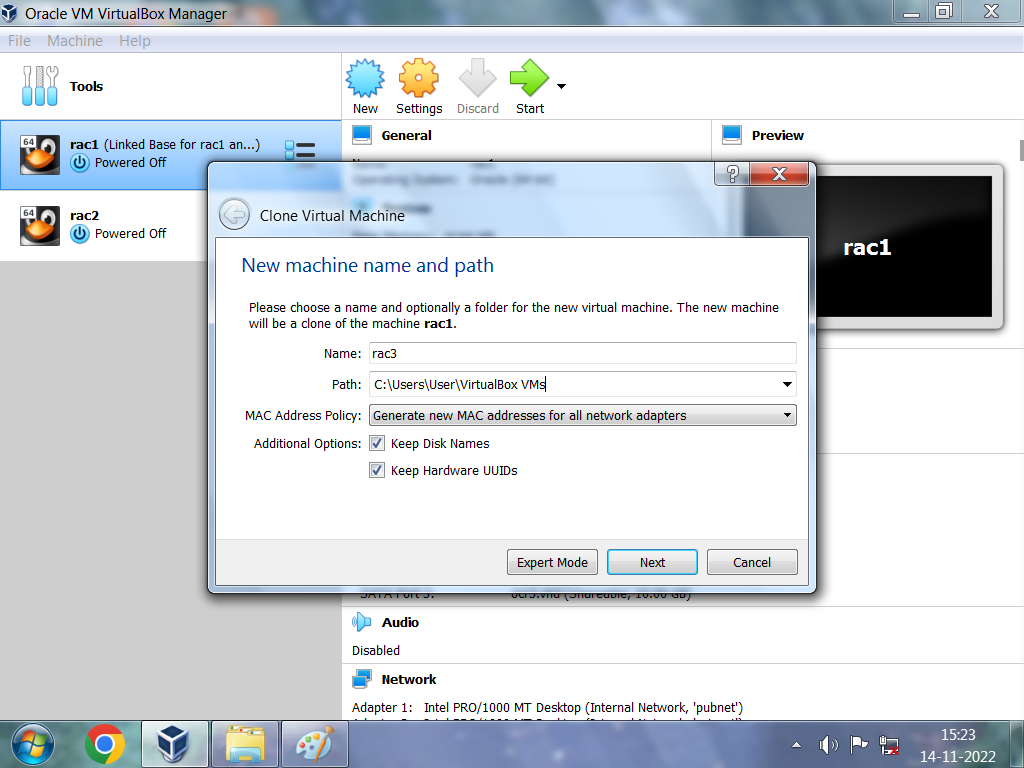

Step 1: Make a clone of existing machine rac1 and create new 3rd node as rac3.

Ensure rac1 machine is stopped before starting clone process.

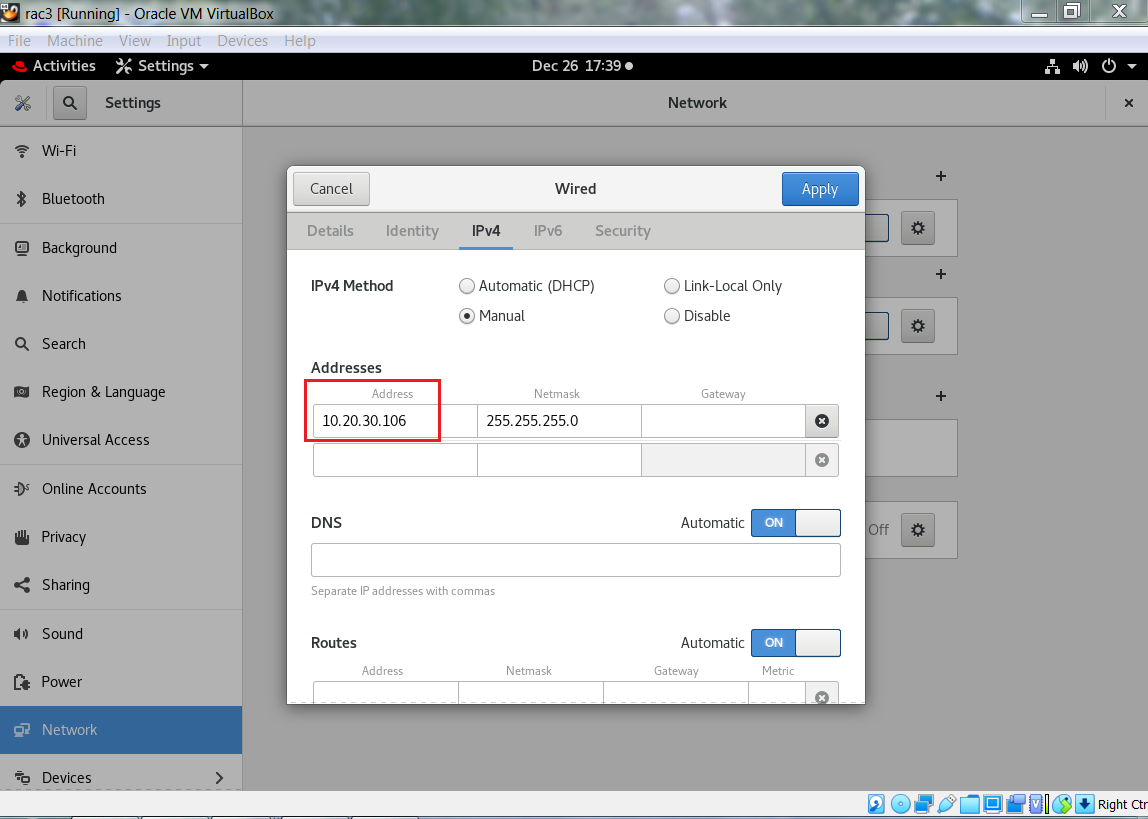

Add below public, private, VIP IP addresses in /etc/hosts file on all three nodes.

[root@rac3 bin]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#Public IP

10.20.30.101 rac1.localdomain rac1

10.20.30.102 rac2.localdomain rac2

10.20.30.106 rac3.localdomain rac3

#Private IP

10.1.2.201 rac1-priv.localdomain rac1-priv

10.1.2.202 rac2-priv.localdomain rac2-priv

10.1.2.203 rac3-priv.localdomain rac3-priv

#VIP IP

10.20.30.103 rac1-vip.localdomain rac1-vip

10.20.30.104 rac2-vip.localdomain rac2-vip

10.20.30.107 rac3-vip.localdomain rac3-vip

#scan IP

10.20.30.105 rac-scan.localdomain rac-scan

Also, make changes in /etc/hostname file as well.

Step 2: Delete below files and directories from rac3 node since this is cloned server and existing server contains existing cluster configuration files which need to be deleted before adding a node.

- Delete cluster audit logs, trace and trm files, listener logs, any software setup located in GI home on rac1 node from which we are going to perform add node operations. This is because if we do not this then this can take long time to copy files from source to target if number of files are more.

I have faced issue in past because of this where trace and trm files were in 50k and which wasted my almost more than 4-5 hours.

[grid@rac1 trace]$ pwd

/u01/app/grid/diag/crs/rac1/crs/trace

[grid@rac1 trace]$ rm -rf *.trc *.trm

[grid@rac1 rdbms]$ cd /u01/app/21.0.0/grid/rdbms/audit/

[grid@rac1 audit]$ ll

total 40

-rw-r----- 1 grid oinstall 844 Jun 24 16:14 +ASM1_ora_33571_20220624161407050637568856.aud

-rw-r----- 1 grid oinstall 852 Jun 24 16:14 +ASM1_ora_33571_20220624161407051626800084.aud

-rw-r----- 1 grid oinstall 844 Jun 24 16:14 +ASM1_ora_33572_20220624161407131271781772.aud

-rw-r----- 1 grid oinstall 844 Jun 24 16:14 +ASM1_ora_33585_20220624161407294565161918.aud

-rw-r----- 1 grid oinstall 844 Jun 24 16:16 +ASM1_ora_37601_20220624161635684490768555.aud

-rw-r----- 1 grid oinstall 842 Jul 9 05:46 +ASM1_ora_6049_20220709054601207552702266.aud

-rw-r----- 1 grid oinstall 842 Nov 14 17:51 +ASM1_ora_6439_20221114175123047994367218.aud

-rw-r----- 1 grid oinstall 842 Nov 14 17:09 +ASM1_ora_8096_20221114170910515442540237.aud

-rw-r----- 1 grid oinstall 842 Nov 14 16:45 +ASM1_ora_8758_20221114164507337283356084.aud

-rw-r----- 1 grid oinstall 842 Jun 24 16:14 null_ora_33545_20220624161406369008105598.aud

[grid@rac1 audit]$ pwd

/u01/app/21.0.0/grid/rdbms/audit

- Delete below rac related configuration files:

[root@rac3 ~]# rm -rf /etc/oracle

[root@rac3 ~]# rm -rf /var/tmp/.oracle

[root@rac3 ~]# rm -rf /tmp/.oracle

[root@rac3 ~]# rm -rf /etc/init.d/*has*

[root@rac3 ~]# rm -rf /etc/rc*.d/*has*

[root@rac3 ~]# rm -rf /etc/oratab

[root@rac3 ~]# rm -rf /etc/oraInst.loc

[root@rac3 ~]# rm -rf /usr/local/bin/dbhome

[root@rac3 ~]# rm -rf /usr/local/bin/oraenv

[root@rac3 ~]# rm -rf /usr/local/bin/coraenv

[root@rac3 ~]# rm -rf /u01

[root@rac3 ~]# rm -rf /var/tmp/.oracle

[root@rac3 ~]# rm -rf /tmp/.oracle

[root@rac3 ~]# rm -rf /etc/init.d/*has*

[root@rac3 ~]# rm -rf /etc/rc*.d/*has*

[root@rac3 ~]# rm -rf /etc/oratab

[root@rac3 ~]# rm -rf /etc/oraInst.loc

[root@rac3 ~]# rm -rf /usr/local/bin/dbhome

[root@rac3 ~]# rm -rf /usr/local/bin/oraenv

[root@rac3 ~]# rm -rf /usr/local/bin/coraenv

[root@rac3 ~]# rm -rf /u01

Ensure all files or directories have been deleted.

[root@rac3 ~]# ls -ld /etc/oracle

ls: cannot access '/etc/oracle': No such file or directory

[root@rac3 ~]# ls -ld /var/tmp/.oracle

ls: cannot access '/var/tmp/.oracle': No such file or directory

[root@rac3 ~]# ls -ld /tmp/.oracle

ls: cannot access '/tmp/.oracle': No such file or directory

[root@rac3 ~]# ls -ld /etc/init.d/*has*

ls: cannot access '/etc/init.d/*has*': No such file or directory

[root@rac3 ~]# ls -ld /etc/rc*.d/*has*

ls: cannot access '/etc/rc*.d/*has*': No such file or directory

[root@rac3 ~]# ls -ld /etc/oratab

ls: cannot access '/etc/oratab': No such file or directory

[root@rac3 ~]# ls -ld /etc/oraInst.loc

ls: cannot access '/etc/oraInst.loc': No such file or directory

[root@rac3 ~]# ls -ld /usr/local/bin/dbhome

ls: cannot access '/usr/local/bin/dbhome': No such file or directory

[root@rac3 ~]# ls -ld /usr/local/bin/oraenv

ls: cannot access '/usr/local/bin/oraenv': No such file or directory

[root@rac3 ~]# ls -ld /usr/local/bin/coraenv

ls: cannot access '/usr/local/bin/coraenv': No such file or directory

[root@rac3 ~]# ls -ld /u01

ls: cannot access '/u01': No such file or directory

Create below directories and grant permissions.

[root@rac3 grid]# cd /

[root@rac3 /]# mkdir -p /u01/app/21.0.0/grid

[root@rac3 /]# mkdir -p /u01/app/grid

[root@rac3 /]# mkdir -p /u01/app/oraInventory

[root@rac3 /]# chown -R grid:oinstall /u01/app/21.0.0/grid

[root@rac3 /]# chown -R grid:oinstall /u01/app/grid

[root@rac3 /]# chown -R grid:oinstall /u01/app/oraInventory

[root@rac3 /]# chmod -R 755 /u01/app/21.0.0/grid

[root@rac3 /]# chmod -R 755 /u01/app/grid

[root@rac3 /]# chmod -R 755 /u01/app/oraInventory

Step 3: Check readiness of the 3rd node to be added.

[grid@rac1 ~]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

[grid@rac1 ~]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

[grid@rac2 ~]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

VIP IPs of the node to be added must not be ping otherwise you will get error "IPs already assigned".

[root@rac1 ~]# grep -i "rac3" /etc/hosts

10.20.30.106 rac3.localdomain rac3

10.1.2.203 rac3-priv.localdomain rac3-priv

10.20.30.107 rac3-vip.localdomain rac3-vip

[root@rac1 ~]# ping rac3.localdomain

PING rac3.localdomain (10.20.30.106) 56(84) bytes of data.

64 bytes from rac3.localdomain (10.20.30.106): icmp_seq=1 ttl=64 time=0.041 ms

64 bytes from rac3.localdomain (10.20.30.106): icmp_seq=2 ttl=64 time=0.159 ms

^C

--- rac3.localdomain ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1041ms

rtt min/avg/max/mdev = 0.041/0.100/0.159/0.059 ms

[root@rac1 ~]# ping rac3-priv.localdomain

PING rac3-priv.localdomain (10.1.2.203) 56(84) bytes of data.

64 bytes from rac3-priv.localdomain (10.1.2.203): icmp_seq=1 ttl=64 time=1.99 ms

^C

--- rac3-priv.localdomain ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.986/1.986/1.986/0.000 ms

[root@rac1 ~]# ping rac3-vip.localdomain

PING rac3-vip.localdomain (10.20.30.107) 56(84) bytes of data.

From rac3.localdomain (10.20.30.106) icmp_seq=1 Destination Host Unreachable

From rac3.localdomain (10.20.30.106) icmp_seq=2 Destination Host Unreachable

From rac3.localdomain (10.20.30.106) icmp_seq=3 Destination Host Unreachable

^C

--- rac3-vip.localdomain ping statistics ---

4 packets transmitted, 0 received, +3 errors, 100% packet loss, time 3078ms

pipe 4

[grid@rac2 ~]$ grep -i "rac3" /etc/hosts

10.20.30.106 rac3.localdomain rac3

10.1.2.203 rac3-priv.localdomain rac3-priv

10.20.30.107 rac3-vip.localdomain rac3-vip

[grid@rac2 ~]$ ping rac3.localdomain

PING rac3.localdomain (10.20.30.106) 56(84) bytes of data.

64 bytes from rac3.localdomain (10.20.30.106): icmp_seq=1 ttl=64 time=1.46 ms

64 bytes from rac3.localdomain (10.20.30.106): icmp_seq=2 ttl=64 time=1.01 ms

^C

--- rac3.localdomain ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 1.007/1.235/1.464/0.231 ms

[grid@rac2 ~]$ ping rac3-priv.localdomain

PING rac3-priv.localdomain (10.1.2.203) 56(84) bytes of data.

64 bytes from rac3-priv.localdomain (10.1.2.203): icmp_seq=1 ttl=64 time=0.519 ms

64 bytes from rac3-priv.localdomain (10.1.2.203): icmp_seq=2 ttl=64 time=0.848 ms

^C

--- rac3-priv.localdomain ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1064ms

rtt min/avg/max/mdev = 0.519/0.683/0.848/0.166 ms

[grid@rac2 ~]$ ping rac3-vip.localdomain

PING rac3-vip.localdomain (10.20.30.107) 56(84) bytes of data.

From rac2.localdomain (10.20.30.102) icmp_seq=1 Destination Host Unreachable

From rac2.localdomain (10.20.30.102) icmp_seq=2 Destination Host Unreachable

From rac2.localdomain (10.20.30.102) icmp_seq=3 Destination Host Unreachable

^C

--- rac3-vip.localdomain ping statistics ---

4 packets transmitted, 0 received, +3 errors, 100% packet loss, time 3100ms

pipe 4

[root@rac3 ~]# grep -i "rac3" /etc/hosts

10.20.30.106 rac3.localdomain rac3

10.1.2.203 rac3-priv.localdomain rac3-priv

10.20.30.107 rac3-vip.localdomain rac3-vip

[root@rac3 ~]# ping rac3.localdomain

PING rac3.localdomain (10.20.30.106) 56(84) bytes of data.

64 bytes from rac3.localdomain (10.20.30.106): icmp_seq=1 ttl=64 time=0.153 ms

64 bytes from rac3.localdomain (10.20.30.106): icmp_seq=2 ttl=64 time=0.070 ms

^C

--- rac3.localdomain ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1023ms

rtt min/avg/max/mdev = 0.070/0.111/0.153/0.042 ms

[root@rac3 ~]# ping rac3-priv.localdomain

PING rac3-priv.localdomain (10.1.2.203) 56(84) bytes of data.

64 bytes from rac3-priv.localdomain (10.1.2.203): icmp_seq=1 ttl=64 time=0.063 ms

64 bytes from rac3-priv.localdomain (10.1.2.203): icmp_seq=2 ttl=64 time=0.056 ms

^C

--- rac3-priv.localdomain ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1057ms

rtt min/avg/max/mdev = 0.056/0.059/0.063/0.008 ms

[root@rac3 ~]# ping rac3-vip.localdomain

PING rac3-vip.localdomain (10.20.30.107) 56(84) bytes of data.

From rac3.localdomain (10.20.30.106) icmp_seq=1 Destination Host Unreachable

From rac3.localdomain (10.20.30.106) icmp_seq=2 Destination Host Unreachable

From rac3.localdomain (10.20.30.106) icmp_seq=3 Destination Host Unreachable

^C

--- rac3-vip.localdomain ping statistics ---

4 packets transmitted, 0 received, +3 errors, 100% packet loss, time 3078ms

pipe 4

Step 4: Configure SSH/passwordless authentication for grid user.

[grid@rac3 ~]$ rm -rf .ssh

[grid@rac3 ~]$ mkdir .ssh

[grid@rac3 ~]$ chmod 700 .ssh

[grid@rac3 ~]$ cd .ssh

[grid@rac3 .ssh]$ ssh-keygen -t rsa

[grid@rac3 .ssh]$ ssh-keygen -t dsa

[grid@rac3 .ssh]$ cat *.pub >> authorized_keys.rac1

[grid@rac3 .ssh]$ ll

-rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac3

-rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa

-rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub

-rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa

-rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub

scp authorized_keys.rac3 grid@rac1:/home/grid/.ssh/

scp authorized_keys.rac3 grid@rac2:/home/grid/.ssh/

From Node1:

scp authorized_keys.rac1 grid@rac3:/home/grid/.ssh/

From Node2:

scp authorized_keys.rac2 grid@rac3:/home/grid/.ssh/

[grid@rac1 .ssh]$ ll

total 28

-rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac1

-rw-r--r-- 1 grid oinstall 1186 Jun 18 23:00 authorized_keys.rac2

-rw-r--r-- 1 grid oinstall 1186 Jun 18 23:10 authorized_keys.rac3

-rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa

-rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub

-rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa

-rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub

-rw-r--r-- 1 grid oinstall 179 Jun 18 23:00 known_hosts

[grid@rac1 .ssh]$ cd $HOME/.ssh

[grid@rac1 .ssh]$ cat *.rac* >> authorized_keys

[grid@rac1 .ssh]$ chmod 600 authorized_keys

[grid@rac1 .ssh]$ ll

-rw------- 1 grid oinstall 2372 Jun 18 23:01 authorized_keys

-rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac1

-rw-r--r-- 1 grid oinstall 1186 Jun 18 23:00 authorized_keys.rac2

-rw-r--r-- 1 grid oinstall 1186 Jun 18 23:10 authorized_keys.rac3

-rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa

-rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub

-rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa

-rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub

-rw-r--r-- 1 grid oinstall 179 Jun 18 23:00 known_hosts

[grid@rac2 .ssh]$ cd $HOME/.ssh

[grid@rac2 .ssh]$ ll

-rw-r--r-- 1 grid oinstall 1186 Jun 18 23:00 authorized_keys.rac1

-rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac2

-rw-r--r-- 1 grid oinstall 1186 Jun 18 23:10 authorized_keys.rac3

-rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa

-rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub

-rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa

-rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub

-rw-r--r-- 1 grid oinstall 179 Jun 18 22:57 known_hosts

[grid@rac2 .ssh]$ cat *.rac* >> authorized_keys

[grid@rac2 .ssh]$ chmod 600 authorized_keys

[grid@rac2 .ssh]$ ll

-rw------- 1 grid oinstall 2372 Jun 18 23:01 authorized_keys

-rw-r--r-- 1 grid oinstall 1186 Jun 18 23:00 authorized_keys.rac1

-rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac2

-rw-r--r-- 1 grid oinstall 1186 Jun 18 23:10 authorized_keys.rac3

-rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa

-rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub

-rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa

-rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub

-rw-r--r-- 1 grid oinstall 179 Jun 18 22:57 known_hosts

Step 5: Execute cluvfy command with addnode option and fix cluvfy issues if any.

Here, I am ignoring memory errors since I have used memory less than the required, but you don't ignore this for actual production environment.

[grid@rac1 ~]$ hostname

rac1.localdomain

[grid@rac1 ~]$ id

uid=1001(grid) gid=2000(oinstall) groups=2000(oinstall),2100(asmadmin),2200(dba),2300(oper),2400(asmdba),2500(asmoper)

[grid@rac1 ~]$ cd $ORACLE_HOME

[grid@rac1 grid]$ pwd

/u01/app/21.0.0/grid

[grid@rac1 grid]$ ls -ltr *runcluv*

-rwxr-x--- 1 grid oinstall 628 Sep 4 2015 runcluvfy.sh

[grid@rac1 grid]$ ./runcluvfy.sh stage -pre nodeadd -n rac3 -verbose

This software is "264" days old. It is a best practice to update the CRS home by downloading and applying the latest release update. Refer to MOS note 756671.1 for more details.

Performing following verification checks ...

Physical Memory ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 3.6491GB (3826396.0KB) 8GB (8388608.0KB) failed

rac3 3.6491GB (3826396.0KB) 8GB (8388608.0KB) failed

Physical Memory ...FAILED (PRVF-7530)

Available Physical Memory ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 1.6858GB (1767672.0KB) 50MB (51200.0KB) passed

rac3 2.6303GB (2758072.0KB) 50MB (51200.0KB) passed

Available Physical Memory ...PASSED

Swap Size ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 3.5GB (3670012.0KB) 3.6491GB (3826396.0KB) passed

rac3 3.5GB (3670012.0KB) 3.6491GB (3826396.0KB) passed

Swap Size ...PASSED

Free Space: rac1:/usr,rac1:/var,rac1:/etc,rac1:/u01/app/21.0.0/grid,rac1:/sbin,rac1:/tmp ...

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/usr rac1 / 15.1631GB 25MB passed

/var rac1 / 15.1631GB 5MB passed

/etc rac1 / 15.1631GB 25MB passed

/u01/app/21.0.0/grid rac1 / 15.1631GB 6.9GB passed

/sbin rac1 / 15.1631GB 10MB passed

/tmp rac1 / 15.1631GB 1GB passed

Free Space: rac1:/usr,rac1:/var,rac1:/etc,rac1:/u01/app/21.0.0/grid,rac1:/sbin,rac1:/tmp ...PASSED

Free Space: rac3:/usr,rac3:/var,rac3:/etc,rac3:/u01/app/21.0.0/grid,rac3:/sbin,rac3:/tmp ...

Path Node Name Mount point Available Required Status

---------------- ------------ ------------ ------------ ------------ ------------

/usr rac3 / 15.1328GB 25MB passed

/var rac3 / 15.1328GB 5MB passed

/etc rac3 / 15.1328GB 25MB passed

/u01/app/21.0.0/grid rac3 / 15.1328GB 6.9GB passed

/sbin rac3 / 15.1328GB 10MB passed

/tmp rac3 / 15.1328GB 1GB passed

Free Space: rac3:/usr,rac3:/var,rac3:/etc,rac3:/u01/app/21.0.0/grid,rac3:/sbin,rac3:/tmp ...PASSED

User Existence: grid ...

Node Name Status Comment

------------ ------------------------ ------------------------

rac1 passed exists(1001)

rac3 passed exists(1001)

Users With Same UID: 1001 ...PASSED

User Existence: grid ...PASSED

User Existence: root ...

Node Name Status Comment

------------ ------------------------ ------------------------

rac1 passed exists(0)

rac3 passed exists(0)

Users With Same UID: 0 ...PASSED

User Existence: root ...PASSED

Group Existence: asmadmin ...

Node Name Status Comment

------------ ------------------------ ------------------------

rac1 passed exists

rac3 passed exists

Group Existence: asmadmin ...PASSED

Group Existence: asmoper ...

Node Name Status Comment

------------ ------------------------ ------------------------

rac1 passed exists

rac3 passed exists

Group Existence: asmoper ...PASSED

Group Existence: asmdba ...

Node Name Status Comment

------------ ------------------------ ------------------------

rac1 passed exists

rac3 passed exists

Group Existence: asmdba ...PASSED

Group Existence: oinstall ...

Node Name Status Comment

------------ ------------------------ ------------------------

rac1 passed exists

rac3 passed exists

Group Existence: oinstall ...PASSED

Group Membership: oinstall ...

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

rac1 yes yes yes passed

rac3 yes yes yes passed

Group Membership: oinstall ...PASSED

Group Membership: asmdba ...

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

rac1 yes yes yes passed

rac3 yes yes yes passed

Group Membership: asmdba ...PASSED

Group Membership: asmadmin ...

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

rac1 yes yes yes passed

rac3 yes yes yes passed

Group Membership: asmadmin ...PASSED

Group Membership: asmoper ...

Node Name User Exists Group Exists User in Group Status

---------------- ------------ ------------ ------------ ----------------

rac1 yes yes yes passed

rac3 yes yes yes passed

Group Membership: asmoper ...PASSED

Run Level ...

Node Name run level Required Status

------------ ------------------------ ------------------------ ----------

rac1 5 3,5 passed

rac3 5 3,5 passed

Run Level ...PASSED

Hard Limit: maximum open file descriptors ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac1 hard 65536 65536 passed

rac3 hard 65536 65536 passed

Hard Limit: maximum open file descriptors ...PASSED

Soft Limit: maximum open file descriptors ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac1 soft 1024 1024 passed

rac3 soft 1024 1024 passed

Soft Limit: maximum open file descriptors ...PASSED

Hard Limit: maximum user processes ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac1 hard 16384 16384 passed

rac3 hard 16384 16384 passed

Hard Limit: maximum user processes ...PASSED

Soft Limit: maximum user processes ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac1 soft 16384 2047 passed

rac3 soft 16384 2047 passed

Soft Limit: maximum user processes ...PASSED

Soft Limit: maximum stack size ...

Node Name Type Available Required Status

---------------- ------------ ------------ ------------ ----------------

rac1 soft 10240 10240 passed

rac3 soft 10240 10240 passed

Soft Limit: maximum stack size ...PASSED

Architecture ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 x86_64 x86_64 passed

rac3 x86_64 x86_64 passed

Architecture ...PASSED

OS Kernel Version ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 4.18.0-305.el8.x86_64 4.18.0 passed

rac3 4.18.0-305.el8.x86_64 4.18.0 passed

OS Kernel Version ...PASSED

OS Kernel Parameter: semmsl ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 250 250 250 passed

rac3 250 250 250 passed

OS Kernel Parameter: semmsl ...PASSED

OS Kernel Parameter: semmns ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 32000 32000 32000 passed

rac3 32000 32000 32000 passed

OS Kernel Parameter: semmns ...PASSED

OS Kernel Parameter: semopm ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 100 100 100 passed

rac3 100 100 100 passed

OS Kernel Parameter: semopm ...PASSED

OS Kernel Parameter: semmni ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 128 128 128 passed

rac3 128 128 128 passed

OS Kernel Parameter: semmni ...PASSED

OS Kernel Parameter: shmmax ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 4398046511104 4398046511104 1959114752 passed

rac3 4398046511104 4398046511104 1959114752 passed

OS Kernel Parameter: shmmax ...PASSED

OS Kernel Parameter: shmmni ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 4096 4096 4096 passed

rac3 4096 4096 4096 passed

OS Kernel Parameter: shmmni ...PASSED

OS Kernel Parameter: shmall ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 1073741824 1073741824 1073741824 passed

rac3 1073741824 1073741824 1073741824 passed

OS Kernel Parameter: shmall ...PASSED

OS Kernel Parameter: file-max ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 6815744 6815744 6815744 passed

rac3 6815744 6815744 6815744 passed

OS Kernel Parameter: file-max ...PASSED

OS Kernel Parameter: ip_local_port_range ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 between 9000 & 65500 between 9000 & 65500 between 9000 & 65535 passed

rac3 between 9000 & 65500 between 9000 & 65500 between 9000 & 65535 passed

OS Kernel Parameter: ip_local_port_range ...PASSED

OS Kernel Parameter: rmem_default ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 262144 262144 262144 passed

rac3 262144 262144 262144 passed

OS Kernel Parameter: rmem_default ...PASSED

OS Kernel Parameter: rmem_max ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 4194304 4194304 4194304 passed

rac3 4194304 4194304 4194304 passed

OS Kernel Parameter: rmem_max ...PASSED

OS Kernel Parameter: wmem_default ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 262144 262144 262144 passed

rac3 262144 262144 262144 passed

OS Kernel Parameter: wmem_default ...PASSED

OS Kernel Parameter: wmem_max ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 1048576 1048576 1048576 passed

rac3 1048576 1048576 1048576 passed

OS Kernel Parameter: wmem_max ...PASSED

OS Kernel Parameter: aio-max-nr ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 1048576 1048576 1048576 passed

rac3 1048576 1048576 1048576 passed

OS Kernel Parameter: aio-max-nr ...PASSED

OS Kernel Parameter: panic_on_oops ...

Node Name Current Configured Required Status Comment

---------------- ------------ ------------ ------------ ------------ ------------

rac1 1 1 1 passed

rac3 1 1 1 passed

OS Kernel Parameter: panic_on_oops ...PASSED

Package: kmod-20-21 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 kmod(x86_64)-25-17.el8 kmod(x86_64)-20-21 passed

rac3 kmod(x86_64)-25-17.el8 kmod(x86_64)-20-21 passed

Package: kmod-20-21 (x86_64) ...PASSED

Package: kmod-libs-20-21 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 kmod-libs(x86_64)-25-17.el8 kmod-libs(x86_64)-20-21 passed

rac3 kmod-libs(x86_64)-25-17.el8 kmod-libs(x86_64)-20-21 passed

Package: kmod-libs-20-21 (x86_64) ...PASSED

Package: binutils-2.30-49.0.2 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 binutils-2.30-93.el8 binutils-2.30-49.0.2 passed

rac3 binutils-2.30-93.el8 binutils-2.30-49.0.2 passed

Package: binutils-2.30-49.0.2 ...PASSED

Package: libgcc-8.2.1 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 libgcc(x86_64)-8.4.1-1.el8 libgcc(x86_64)-8.2.1 passed

rac3 libgcc(x86_64)-8.4.1-1.el8 libgcc(x86_64)-8.2.1 passed

Package: libgcc-8.2.1 (x86_64) ...PASSED

Package: libstdc++-8.2.1 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 libstdc++(x86_64)-8.4.1-1.el8 libstdc++(x86_64)-8.2.1 passed

rac3 libstdc++(x86_64)-8.4.1-1.el8 libstdc++(x86_64)-8.2.1 passed

Package: libstdc++-8.2.1 (x86_64) ...PASSED

Package: sysstat-10.1.5 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 sysstat-11.7.3-5.el8 sysstat-10.1.5 passed

rac3 sysstat-11.7.3-5.el8 sysstat-10.1.5 passed

Package: sysstat-10.1.5 ...PASSED

Package: ksh ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 ksh ksh passed

rac3 ksh ksh passed

Package: ksh ...PASSED

Package: make-4.2.1 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 make-4.2.1-10.el8 make-4.2.1 passed

rac3 make-4.2.1-10.el8 make-4.2.1 passed

Package: make-4.2.1 ...PASSED

Package: glibc-2.28 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 glibc(x86_64)-2.28-151.el8 glibc(x86_64)-2.28 passed

rac3 glibc(x86_64)-2.28-151.el8 glibc(x86_64)-2.28 passed

Package: glibc-2.28 (x86_64) ...PASSED

Package: glibc-devel-2.28 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 glibc-devel(x86_64)-2.28-151.el8 glibc-devel(x86_64)-2.28 passed

rac3 glibc-devel(x86_64)-2.28-151.el8 glibc-devel(x86_64)-2.28 passed

Package: glibc-devel-2.28 (x86_64) ...PASSED

Package: libaio-0.3.110 (x86_64) ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 libaio(x86_64)-0.3.112-1.el8 libaio(x86_64)-0.3.110 passed

rac3 libaio(x86_64)-0.3.112-1.el8 libaio(x86_64)-0.3.110 passed

Package: libaio-0.3.110 (x86_64) ...PASSED

Package: nfs-utils-2.3.3-14 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 nfs-utils-2.3.3-41.el8 nfs-utils-2.3.3-14 passed

rac3 nfs-utils-2.3.3-41.el8 nfs-utils-2.3.3-14 passed

Package: nfs-utils-2.3.3-14 ...PASSED

Package: smartmontools-6.6-3 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 smartmontools-7.1-1.el8 smartmontools-6.6-3 passed

rac3 smartmontools-7.1-1.el8 smartmontools-6.6-3 passed

Package: smartmontools-6.6-3 ...PASSED

Package: net-tools-2.0-0.51 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 net-tools-2.0-0.52.20160912git.el8 net-tools-2.0-0.51 passed

rac3 net-tools-2.0-0.52.20160912git.el8 net-tools-2.0-0.51 passed

Package: net-tools-2.0-0.51 ...PASSED

Package: policycoreutils-2.9-3 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 policycoreutils-2.9-14.el8 policycoreutils-2.9-3 passed

rac3 policycoreutils-2.9-14.el8 policycoreutils-2.9-3 passed

Package: policycoreutils-2.9-3 ...PASSED

Package: policycoreutils-python-utils-2.9-3 ...

Node Name Available Required Status

------------ ------------------------ ------------------------ ----------

rac1 policycoreutils-python-utils-2.9-14.el8 policycoreutils-python-utils-2.9-3 passed

rac3 policycoreutils-python-utils-2.9-14.el8 policycoreutils-python-utils-2.9-3 passed

Package: policycoreutils-python-utils-2.9-3 ...PASSED

Users With Same UID: 0 ...PASSED

Current Group ID ...PASSED

Root user consistency ...

Node Name Status

------------------------------------ ------------------------

rac1 passed

rac3 passed

Root user consistency ...PASSED

Node Addition ...

CRS Integrity ...PASSED

Clusterware Version Consistency ...PASSED

'/u01/app/21.0.0/grid' ...PASSED

Node Addition ...PASSED

Host name ...PASSED

Node Connectivity ...

Hosts File ...

Node Name Status

------------------------------------ ------------------------

rac1 passed

rac2 passed

rac3 passed

Hosts File ...PASSED

Interface information for node "rac1"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

enp0s3 10.20.30.101 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:6C:9A:FB 1500

enp0s3 10.20.30.105 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:6C:9A:FB 1500

enp0s3 10.20.30.103 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:6C:9A:FB 1500

enp0s3 10.20.30.107 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:6C:9A:FB 1500

enp0s8 10.1.2.201 10.1.2.0 0.0.0.0 UNKNOWN 08:00:27:7E:D7:1A 1500

Interface information for node "rac3"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

enp0s3 10.20.30.106 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:57:6A:1F 1500

enp0s8 10.1.2.203 10.1.2.0 0.0.0.0 UNKNOWN 08:00:27:2D:59:72 1500

Interface information for node "rac2"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

enp0s3 10.20.30.102 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:79:B4:29 1500

enp0s3 10.20.30.104 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:79:B4:29 1500

enp0s8 10.1.2.202 10.1.2.0 0.0.0.0 UNKNOWN 08:00:27:73:FE:D9 1500

Check: MTU consistency on the private interfaces of subnet "10.1.2.0"

Node Name IP Address Subnet MTU

---------------- ------------ ------------ ------------ ----------------

rac1 enp0s8 10.1.2.201 10.1.2.0 1500

rac3 enp0s8 10.1.2.203 10.1.2.0 1500

rac2 enp0s8 10.1.2.202 10.1.2.0 1500

Check: MTU consistency of the subnet "10.20.30.0".

Node Name IP Address Subnet MTU

---------------- ------------ ------------ ------------ ----------------

rac1 enp0s3 10.20.30.101 10.20.30.0 1500

rac1 enp0s3 10.20.30.105 10.20.30.0 1500

rac1 enp0s3 10.20.30.103 10.20.30.0 1500

rac1 enp0s3 10.20.30.107 10.20.30.0 1500

rac3 enp0s3 10.20.30.106 10.20.30.0 1500

rac2 enp0s3 10.20.30.102 10.20.30.0 1500

rac2 enp0s3 10.20.30.104 10.20.30.0 1500

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac1[enp0s8:10.1.2.201] rac3[enp0s8:10.1.2.203] yes

rac1[enp0s8:10.1.2.201] rac2[enp0s8:10.1.2.202] yes

rac3[enp0s8:10.1.2.203] rac2[enp0s8:10.1.2.202] yes

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac1[enp0s3:10.20.30.101] rac1[enp0s3:10.20.30.105] yes

rac1[enp0s3:10.20.30.101] rac1[enp0s3:10.20.30.103] yes

rac1[enp0s3:10.20.30.101] rac1[enp0s3:10.20.30.107] yes

rac1[enp0s3:10.20.30.101] rac3[enp0s3:10.20.30.106] yes

rac1[enp0s3:10.20.30.101] rac2[enp0s3:10.20.30.102] yes

rac1[enp0s3:10.20.30.101] rac2[enp0s3:10.20.30.104] yes

rac1[enp0s3:10.20.30.105] rac1[enp0s3:10.20.30.103] yes

rac1[enp0s3:10.20.30.105] rac1[enp0s3:10.20.30.107] yes

rac1[enp0s3:10.20.30.105] rac3[enp0s3:10.20.30.106] yes

rac1[enp0s3:10.20.30.105] rac2[enp0s3:10.20.30.102] yes

rac1[enp0s3:10.20.30.105] rac2[enp0s3:10.20.30.104] yes

rac1[enp0s3:10.20.30.103] rac1[enp0s3:10.20.30.107] yes

rac1[enp0s3:10.20.30.103] rac3[enp0s3:10.20.30.106] yes

rac1[enp0s3:10.20.30.103] rac2[enp0s3:10.20.30.102] yes

rac1[enp0s3:10.20.30.103] rac2[enp0s3:10.20.30.104] yes

rac1[enp0s3:10.20.30.107] rac3[enp0s3:10.20.30.106] yes

rac1[enp0s3:10.20.30.107] rac2[enp0s3:10.20.30.102] yes

rac1[enp0s3:10.20.30.107] rac2[enp0s3:10.20.30.104] yes

rac3[enp0s3:10.20.30.106] rac2[enp0s3:10.20.30.102] yes

rac3[enp0s3:10.20.30.106] rac2[enp0s3:10.20.30.104] yes

rac2[enp0s3:10.20.30.102] rac2[enp0s3:10.20.30.104] yes

Check that maximum (MTU) size packet goes through subnet ...PASSED

subnet mask consistency for subnet "10.1.2.0" ...PASSED

subnet mask consistency for subnet "10.20.30.0" ...PASSED

Node Connectivity ...PASSED

Multicast or broadcast check ...

Checking subnet "10.1.2.0" for multicast communication with multicast group "224.0.0.251"

Multicast or broadcast check ...PASSED

ASM Network ...PASSED

Device Checks for ASM ...Disks "/dev/oracleasm/disks/OCRDISK1,/dev/oracleasm/disks/OCRDISK2,/dev/oracleasm/disks/OCRDISK3" are managed by ASM.

Device Checks for ASM ...PASSED

ASMLib installation and configuration verification. ...

'/etc/init.d/oracleasm' ...PASSED

'/dev/oracleasm' ...PASSED

'/etc/sysconfig/oracleasm' ...PASSED

Node Name Status

------------------------------------ ------------------------

rac1 passed

rac3 passed

ASMLib installation and configuration verification. ...PASSED

OCR Integrity ...PASSED

Time zone consistency ...PASSED

Network Time Protocol (NTP) ...PASSED

User Not In Group "root": grid ...

Node Name Status Comment

------------ ------------------------ ------------------------

rac1 passed does not exist

rac3 passed does not exist

User Not In Group "root": grid ...PASSED

Time offset between nodes ...PASSED

resolv.conf Integrity ...PASSED

DNS/NIS name service ...PASSED

User Equivalence ...

Node Name Status

------------------------------------ ------------------------

rac2 passed

rac3 passed

User Equivalence ...PASSED

Software home: /u01/app/21.0.0/grid ...

Node Name Status Comment

------------ ------------------------ ------------------------

rac1 passed 1306 files verified

Software home: /u01/app/21.0.0/grid ...PASSED

/dev/shm mounted as temporary file system ...PASSED

zeroconf check ...PASSED

Pre-check for node addition was unsuccessful.

Checks did not pass for the following nodes:

rac1,rac3

Failures were encountered during execution of CVU verification request "stage -pre nodeadd".

Physical Memory ...FAILED

rac1: PRVF-7530 : Sufficient physical memory is not available on node "rac1"

[Required physical memory = 8GB (8388608.0KB)]

rac3: PRVF-7530 : Sufficient physical memory is not available on node "rac3"

[Required physical memory = 8GB (8388608.0KB)]

CVU operation performed: stage -pre nodeadd

Date: Dec 26, 2022 4:47:32 PM

Clusterware version: 21.0.0.0.0

CVU home: /u01/app/21.0.0/grid

Grid home: /u01/app/21.0.0/grid

User: grid

Operating system: Linux4.18.0-305.el8.x86_64

[grid@rac1 grid]$

Step 6: Start GUI installer by grid user from Node1.

Select option "Add more nodes to the cluster".

e.g.

Public Hostname: rac3.localdomain

Virtual Hostname: rac3-vip.localdomain

[root@rac1 bin]# cd /u01/app/21.0.0/grid/bin

[root@rac1 bin]# ls -ltr OCRDUMPFILE

-rw-r----- 1 root root 348488 Jul 9 05:51 OCRDUMPFILE

[root@rac1 bin]# chmod 777 OCRDUMPFILE

[root@rac1 bin]# ls -ltr OCRDUMPFILE

-rwxrwxrwx 1 root root 348488 Jul 9 05:51 OCRDUMPFILE

Click OK and again click NEXT to continue.

[root@rac3 ~]# id

uid=0(root) gid=0(root) groups=0(root)

[root@rac3 ~]# hostname

rac3.localdomain

[root@rac3 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@rac3 ~]#

[root@rac3 ~]# /u01/app/21.0.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/21.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

[root@rac3 ~]# /u01/app/21.0.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/21.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/21.0.0/grid/crs/install/crsconfig_params

2022-12-24 19:33:01: Got permissions of file /u01/app/grid/crsdata/rac3/crsconfig: 0775

2022-12-24 19:33:01: Got permissions of file /u01/app/grid/crsdata: 0775

2022-12-24 19:33:01: Got permissions of file /u01/app/grid/crsdata/rac3: 0775

The log of current session can be found at:

/u01/app/grid/crsdata/rac3/crsconfig/rootcrs_rac3_2022-12-24_07-33-01PM.log

2022/12/24 19:33:26 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2022/12/24 19:33:26 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2022/12/24 19:33:26 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2022/12/24 19:33:27 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2022/12/24 19:33:27 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2022/12/24 19:33:27 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2022/12/24 19:33:30 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2022/12/24 19:33:31 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2022/12/24 19:33:31 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2022/12/24 19:33:58 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2022/12/24 19:33:58 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2022/12/24 19:34:00 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2022/12/24 19:34:00 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2022/12/24 19:34:30 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2022/12/24 19:34:30 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2022/12/24 19:35:07 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2022/12/24 19:35:08 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

2022/12/24 19:35:11 CLSRSC-4002: Successfully installed Oracle Autonomous Health Framework (AHF).

2022/12/24 19:35:42 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2022/12/24 19:37:46 CLSRSC-343: Successfully started Oracle Clusterware stack

2022/12/24 19:37:46 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

clscfg: EXISTING configuration version 21 detected.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

2022/12/24 19:38:09 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2022/12/24 19:40:10 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@rac3 ~]#

Click on Close to finish the node addition.

You have successfully added a new node to the existing cluster by graphical method.

Step 7: Ensure all CRS services are up and running fine on newly added node.

[grid@rac3 ~]$ ps -ef | grep pmon

grid 133813 7031 0 19:38 ? 00:00:00 asm_pmon_+ASM3

grid 137723 137138 0 19:43 pts/0 00:00:00 grep --color=auto pmon

[grid@rac3 ~]$ ps -ef | grep tns

root 24 2 0 17:05 ? 00:00:00 [netns]

grid 133287 7031 0 19:38 ? 00:00:00 /u01/app/21.0.0/grid/bin/tnslsnr LISTENER -no_crs_notify -inherit

grid 133321 7031 0 19:38 ? 00:00:00 /u01/app/21.0.0/grid/bin/tnslsnr ASMNET1LSNR_ASM -no_crs_notify -inherit

grid 139466 139018 0 19:45 pts/0 00:00:00 grep --color=auto tns

[grid@rac3 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ONLINE ONLINE rac3 STABLE

ora.chad

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ONLINE ONLINE rac3 STABLE

ora.net1.network

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ONLINE ONLINE rac3 STABLE

ora.ons

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ONLINE ONLINE rac3 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 ONLINE ONLINE rac3 STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac2 STABLE

ora.OCR.dg(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 ONLINE ONLINE rac3 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE rac1 Started,STABLE

2 ONLINE ONLINE rac2 Started,STABLE

3 ONLINE ONLINE rac3 Started,STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 ONLINE ONLINE rac3 STABLE

ora.cdp1.cdp

1 ONLINE ONLINE rac2 STABLE

ora.cvu

1 ONLINE ONLINE rac2 STABLE

ora.qosmserver

1 ONLINE ONLINE rac2 STABLE

ora.rac1.vip

1 ONLINE ONLINE rac1 STABLE

ora.rac2.vip

1 ONLINE ONLINE rac2 STABLE

ora.rac3.vip

1 ONLINE ONLINE rac3 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac2 STABLE

[grid@rac3 ~]$ crsctl check cluster -all

**************************************************************

rac1:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

rac2:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

rac3:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

[grid@rac3 ~]$ olsnodes -s -t

rac1 Active Unpinned

rac2 Active Unpinned

rac3 Active Unpinned

[grid@rac3 ~]$ lsnrctl status

LSNRCTL for Linux: Version 21.0.0.0.0 - Production on 24-DEC-2022 19:45:54

Copyright (c) 1991, 2021, Oracle. All rights reserved.

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER)))

STATUS of the LISTENER

------------------------

Alias LISTENER

Version TNSLSNR for Linux: Version 21.0.0.0.0 - Production

Start Date 24-DEC-2022 19:38:13

Uptime 0 days 0 hr. 7 min. 40 sec

Trace Level off

Security ON: Local OS Authentication

SNMP OFF

Listener Parameter File /u01/app/21.0.0/grid/network/admin/listener.ora

Listener Log File /u01/app/grid/diag/tnslsnr/rac3/listener/alert/log.xml

Listening Endpoints Summary...

(DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=10.20.30.106)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=10.20.30.107)(PORT=1521)))

Services Summary...

Service "+ASM" has 1 instance(s).

Instance "+ASM3", status READY, has 1 handler(s) for this service...

Service "+ASM_OCR" has 1 instance(s).

Instance "+ASM3", status READY, has 1 handler(s) for this service...

The command completed successfully

[grid@rac1 grid]$ ./runcluvfy.sh stage -post nodeadd -n rac3 -verbose

This software is "264" days old. It is a best practice to update the CRS home by downloading and applying the latest release update. Refer to MOS note 756671.1 for more details.

Performing following verification checks ...

Node Connectivity ...

Hosts File ...

Node Name Status

------------------------------------ ------------------------

rac1 passed

rac3 passed

rac2 passed

Hosts File ...PASSED

Interface information for node "rac1"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

enp0s3 10.20.30.101 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:6C:9A:FB 1500

enp0s3 10.20.30.105 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:6C:9A:FB 1500

enp0s3 10.20.30.103 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:6C:9A:FB 1500

enp0s8 10.1.2.201 10.1.2.0 0.0.0.0 UNKNOWN 08:00:27:7E:D7:1A 1500

Interface information for node "rac3"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

enp0s3 10.20.30.106 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:57:6A:1F 1500

enp0s3 10.20.30.107 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:57:6A:1F 1500

enp0s8 10.1.2.203 10.1.2.0 0.0.0.0 UNKNOWN 08:00:27:2D:59:72 1500

Interface information for node "rac2"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

enp0s3 10.20.30.102 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:79:B4:29 1500

enp0s3 10.20.30.104 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:79:B4:29 1500

enp0s8 10.1.2.202 10.1.2.0 0.0.0.0 UNKNOWN 08:00:27:73:FE:D9 1500

Check: MTU consistency on the private interfaces of subnet "10.1.2.0"

Node Name IP Address Subnet MTU

---------------- ------------ ------------ ------------ ----------------

rac1 enp0s8 10.1.2.201 10.1.2.0 1500

rac3 enp0s8 10.1.2.203 10.1.2.0 1500

rac2 enp0s8 10.1.2.202 10.1.2.0 1500

Check: MTU consistency of the subnet "10.20.30.0".

Node Name IP Address Subnet MTU

---------------- ------------ ------------ ------------ ----------------

rac1 enp0s3 10.20.30.101 10.20.30.0 1500

rac1 enp0s3 10.20.30.105 10.20.30.0 1500

rac1 enp0s3 10.20.30.103 10.20.30.0 1500

rac3 enp0s3 10.20.30.106 10.20.30.0 1500

rac3 enp0s3 10.20.30.107 10.20.30.0 1500

rac2 enp0s3 10.20.30.102 10.20.30.0 1500

rac2 enp0s3 10.20.30.104 10.20.30.0 1500

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac1[enp0s8:10.1.2.201] rac3[enp0s8:10.1.2.203] yes

rac1[enp0s8:10.1.2.201] rac2[enp0s8:10.1.2.202] yes

rac3[enp0s8:10.1.2.203] rac2[enp0s8:10.1.2.202] yes

Source Destination Connected?

------------------------------ ------------------------------ ----------------

rac1[enp0s3:10.20.30.101] rac1[enp0s3:10.20.30.105] yes

rac1[enp0s3:10.20.30.101] rac1[enp0s3:10.20.30.103] yes

rac1[enp0s3:10.20.30.101] rac3[enp0s3:10.20.30.106] yes

rac1[enp0s3:10.20.30.101] rac3[enp0s3:10.20.30.107] yes

rac1[enp0s3:10.20.30.101] rac2[enp0s3:10.20.30.102] yes

rac1[enp0s3:10.20.30.101] rac2[enp0s3:10.20.30.104] yes

rac1[enp0s3:10.20.30.105] rac1[enp0s3:10.20.30.103] yes

rac1[enp0s3:10.20.30.105] rac3[enp0s3:10.20.30.106] yes

rac1[enp0s3:10.20.30.105] rac3[enp0s3:10.20.30.107] yes

rac1[enp0s3:10.20.30.105] rac2[enp0s3:10.20.30.102] yes

rac1[enp0s3:10.20.30.105] rac2[enp0s3:10.20.30.104] yes

rac1[enp0s3:10.20.30.103] rac3[enp0s3:10.20.30.106] yes

rac1[enp0s3:10.20.30.103] rac3[enp0s3:10.20.30.107] yes

rac1[enp0s3:10.20.30.103] rac2[enp0s3:10.20.30.102] yes

rac1[enp0s3:10.20.30.103] rac2[enp0s3:10.20.30.104] yes

rac3[enp0s3:10.20.30.106] rac3[enp0s3:10.20.30.107] yes

rac3[enp0s3:10.20.30.106] rac2[enp0s3:10.20.30.102] yes

rac3[enp0s3:10.20.30.106] rac2[enp0s3:10.20.30.104] yes

rac3[enp0s3:10.20.30.107] rac2[enp0s3:10.20.30.102] yes

rac3[enp0s3:10.20.30.107] rac2[enp0s3:10.20.30.104] yes

rac2[enp0s3:10.20.30.102] rac2[enp0s3:10.20.30.104] yes

Check that maximum (MTU) size packet goes through subnet ...PASSED

subnet mask consistency for subnet "10.1.2.0" ...PASSED

subnet mask consistency for subnet "10.20.30.0" ...PASSED

Node Connectivity ...PASSED

Cluster Integrity ...

Node Name

------------------------------------

rac1

rac2

rac3

Cluster Integrity ...PASSED

Node Addition ...

CRS Integrity ...PASSED

Clusterware Version Consistency ...PASSED

'/u01/app/21.0.0/grid' ...PASSED

Node Addition ...PASSED

Multicast or broadcast check ...

Checking subnet "10.1.2.0" for multicast communication with multicast group "224.0.0.251"

Multicast or broadcast check ...PASSED

Node Application Existence ...

Checking existence of VIP node application (required)

Node Name Required Running? Comment

------------ ------------------------ ------------------------ ----------

rac1 yes yes passed

rac3 yes yes passed

rac2 yes yes passed

Checking existence of NETWORK node application (required)

Node Name Required Running? Comment

------------ ------------------------ ------------------------ ----------

rac1 yes yes passed

rac3 yes yes passed

rac2 yes yes passed

Checking existence of ONS node application (optional)

Node Name Required Running? Comment

------------ ------------------------ ------------------------ ----------

rac1 no yes passed

rac3 no yes passed

rac2 no yes passed

Node Application Existence ...PASSED

Single Client Access Name (SCAN) ...

SCAN Name Node Running? ListenerName Port Running?

---------------- ------------ ------------ ------------ ------------ ------------

rac-scan rac1 true LISTENER_SCAN1 1530 true

Checking TCP connectivity to SCAN listeners...

Node ListenerName TCP connectivity?

------------ ------------------------ ------------------------

rac3 LISTENER_SCAN1 yes

DNS/NIS name service 'rac-scan' ...

Name Service Switch Configuration File Integrity ...PASSED

DNS/NIS name service 'rac-scan' ...FAILED (PRVG-1101)

Single Client Access Name (SCAN) ...FAILED (PRVG-11372, PRVG-1101)

User Not In Group "root": grid ...

Node Name Status Comment

------------ ------------------------ ------------------------

rac3 passed does not exist

User Not In Group "root": grid ...PASSED

Clock Synchronization ...

Node Name Status

------------------------------------ ------------------------

rac3 passed

Node Name State

------------------------------------ ------------------------

rac3 Active

Node Name Time Offset Status

------------ ------------------------ ------------------------

rac3 0.0 passed

Clock Synchronization ...PASSED

Post-check for node addition was unsuccessful.

Checks did not pass for the following nodes:

rac1,rac3

Failures were encountered during execution of CVU verification request "stage -post nodeadd".

Single Client Access Name (SCAN) ...FAILED

PRVG-11372 : Number of SCAN IP addresses that SCAN "rac-scan" resolved to did

not match the number of SCAN VIP resources

DNS/NIS name service 'rac-scan' ...FAILED

PRVG-1101 : SCAN name "rac-scan" failed to resolve

CVU operation performed: stage -post nodeadd

Date: Dec 26, 2022 5:00:45 PM

Clusterware version: 21.0.0.0.0

CVU home: /u01/app/21.0.0/grid

Grid home: /u01/app/21.0.0/grid

User: grid

Operating system: Linux4.18.0-305.el8.x86_64

I will post manual node addition method soon.....

Please comment if you like this post !

Amazing steps. Thanks Rupesh ji.. But in the last post clyfy step.. Thereis one error of scan.. Can we ignore it? Since We can see all the nodes are being connected to other in above log

ReplyDeleteThanks Manoj for your comment ! You can ignore this error message since we are not using DNS server and also the SCAN is working fine.

DeletePrecisely explained I am looking forward for Manual node addition.

ReplyDeleteSure, I will add manual steps as well.

Delete