Steps to install and configure Oracle 12c Grid Infrastructure -- Part - I

Environment Configuration Details:

Oracle Grid Infrastructure Software version: 12.1.0.2

RAC: YES

No. Of Nodes: 2-Node RAC Cluster

Operating System: Oracle Linux 6.3 64 Bit

Points to be checked before starting RAC installation prerequisites:

1) Am I downloading GRID software of correct version?

2) Is GRID Infrastructure certified on current Operating System ?

3) Is my GRID software architecture 32 bit or 64 bit ?

4) Is Operating System architecture 32 bit or 64 bit ?

5) Is Operating System Kernel Version compatible with software to be installed?

2) Is GRID Infrastructure certified on current Operating System ?

3) Is my GRID software architecture 32 bit or 64 bit ?

4) Is Operating System architecture 32 bit or 64 bit ?

5) Is Operating System Kernel Version compatible with software to be installed?

6) Is my server runlevel 3 or 5 ?

7) Oracle strongly recommends to disable Transparent HugePages and use standard HugePages for enhanced performance.

8) The Oracle Clusterware version must be equal to or greater than the Oracle RAC version that you plan to install.

9) Use identical server hardware on each node to simplify server maintenance.

Step 1: Certification Matrix

You can see Oracle 12.1.0.2 Real Application Clusters is certified on Oracle Linux 6.

Supported kernel versions for Oracle Linux 6:

- Update 2 or higher, 2.6.39-200.24.1.el6uek.x86_64 or later UEK2 kernels

- Update 4 or higher, 3.8.13-16 or later UEK3 kernels

- Update 7 or higher, 4.1.12-32 or later UEK4 kernels

- Oracle Linux 6 with the Red Hat Compatible kernel: 2.6.32-71.el6.x86_64 or later

Step 2: Hardware Requirement

You can initiate mail to customer with below hardware requirement to configure Oracle 12.1.0.2 RAC on Linux 6.

Please note that below are the minimum requirements for installation purpose only. You can later increase it as per the business requirement.

- Public, VIP, and SCAN IPs can be in same or different series, but subnet should be same.

- Private IP series and subnet should be different than public,VIP, and SCAN series and subnet.

- For Grid Infrastructure Installation, only OCR and /u01 storage is needed. Res of the storage requirement is given for installing database software and DB creation purpose.

- 200 GB space is given considering GRID+Oracle software and future patches. Here, I have configured only 50 GB space for storing GRID+ORACLE setup files on on testing environment.

|

Minimum Requirement for configuring Oracle

12.1.0.2 Two Node RAC cluster on Linux

|

||

|

Specification Name

|

Node1

|

Node2

|

|

Hardware

Requirement

|

||

|

Physical RAM (GB)

|

4

|

4

|

|

Swap (GB)

|

4

|

4

|

|

CPUs

|

2

|

2

|

|

|

||

|

IP

Requirement

|

||

|

Public IPs

|

1

|

1

|

|

Private IPs

|

1

|

1

|

|

VIPs

|

1

|

1

|

|

Scan IPs

|

1

or 3

|

|

|

|

||

|

Storage

requirement

|

||

|

OCR

|

10

GB * 3 disks

|

Shared

|

|

DATA

|

500

GB * 2 disks

|

Shared

|

|

REDO1

|

50

GB * 1 disk

|

Shared

|

|

REDO2

|

50

GB * 1 disk

|

Shared

|

|

ARCH

|

500

GB * 1 disk

|

Shared

|

|

/u01

|

200

GB * disk

|

Non-shared

|

|

/backup

|

500

* 2 disks

|

Non-shared

|

Step 3: Virtual Box Configuration

Assuming you have already installed Virtual Box and have ready Linux Virtual Image. If not then refer "Step by Step installation and configuration of Oracle 11g two Node RAC on Oracle Linux 7.5" for installing Virtual Box or Linux.

Here, I have ready virtual Box image with Linux 6.3 installed. Double click on the Virtual Box and start importing existing VDI Image of Linux setup. Click on NEW option.

Here is the option to import existing VDI image file.

You can see my VDI Image file is located under D: drive.

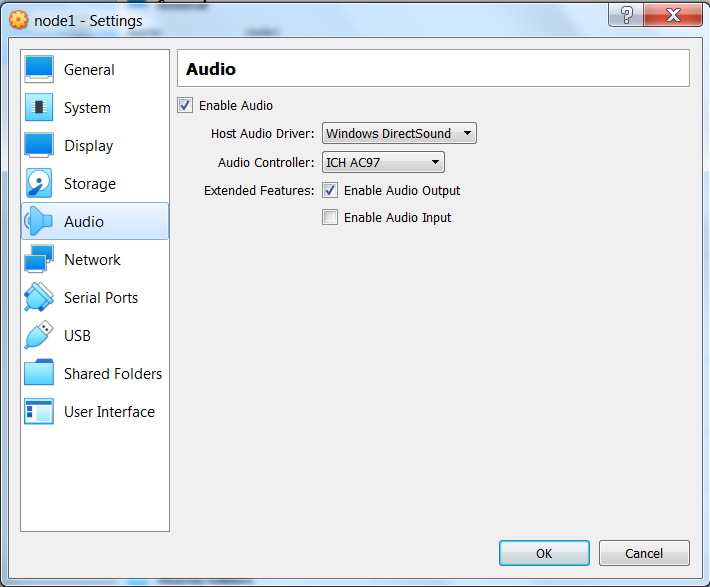

Now make below changes in VirtualBox settings. Ensure the ethernet card names should be common for all the cluster nodes. Let's example, on Node1 - public ethernet card name is pubnet and private ethernet card name is privnet. Similarly, on Node2, public ethernet card name is pubnet and private ethernet card name is privnet.

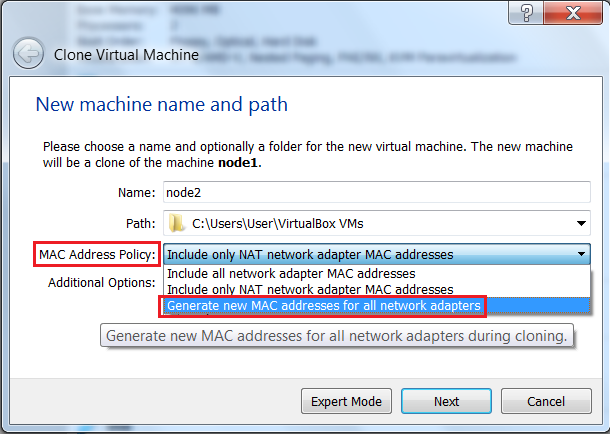

Now 1st Node1 is ready. It's time to clone the 1st Node to create Node2.

In older releases, you had to change MAC addresses for each and every ethernet card manually, but in newer Virtual Box release, there is an option to automatically generate new MAC addresses for all ethernet cards.

You can either select Full Clone or Linked Clone option.

Now make Virtual Box changes for Node2 as well.

You can see below screenshots where MAC addresses are generated automatically on Node2.

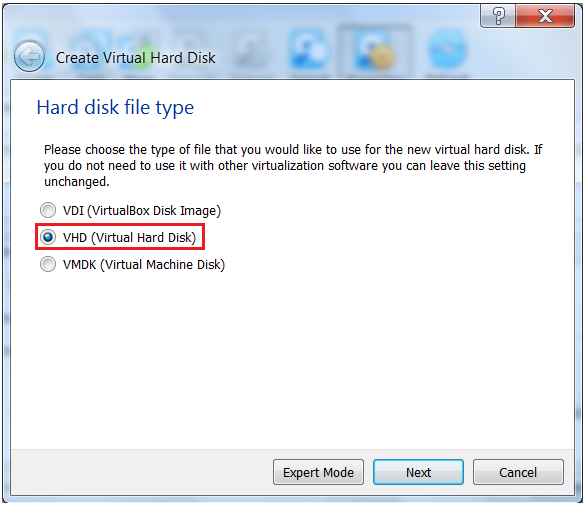

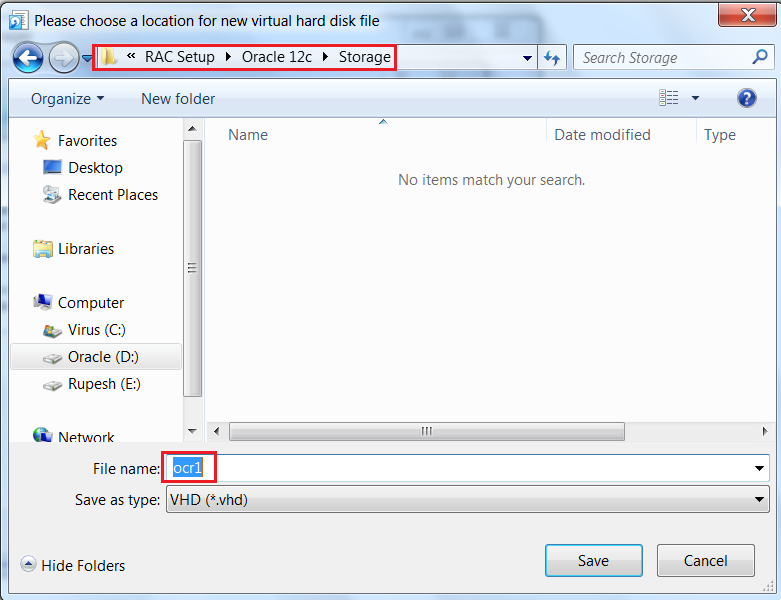

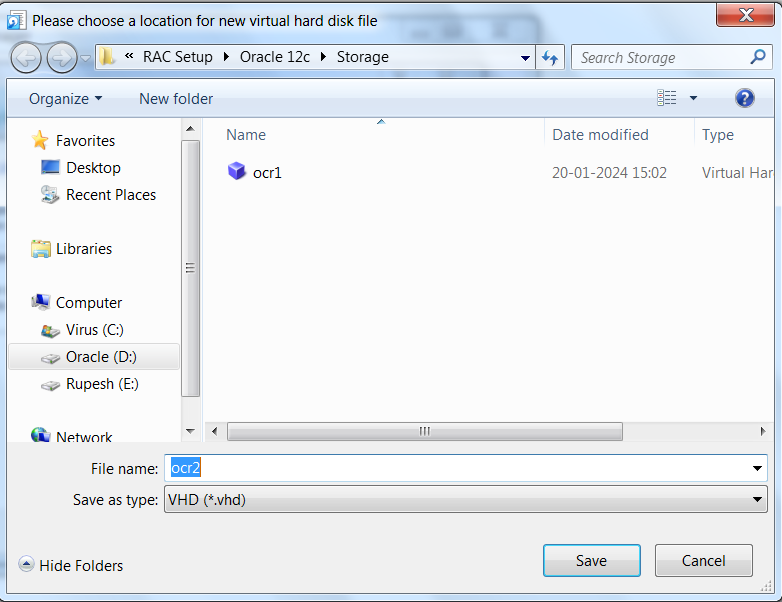

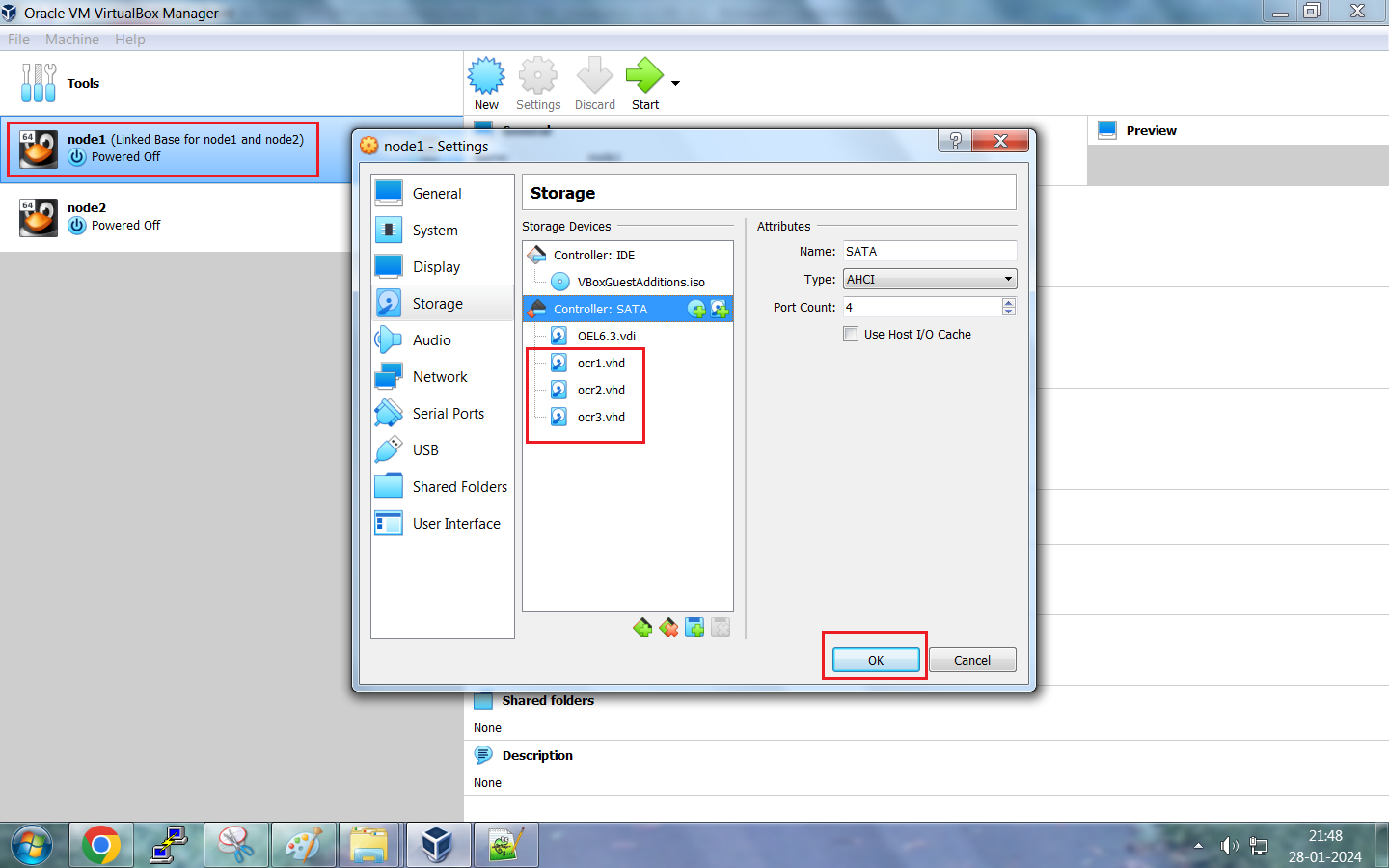

Step 4: Storage Allocation for GRID Installation

As per the storage requirement, create OCR disks for GRID installation. Go to File and then click on "Virtual Media Manager" option.

You will get below pop-up box. Click on Create option and select the disk location on your desktop. Here, I am allocating 10 GB for each OCR disks since this is my testing environment. You can go ahead with more than 10 GB and above table configuration for actual production installation. I have given additional storage considering database creation.

Step 5: Linux Server configuration

Make below changes on both Linux servers to copy/paste bidirectionally from your desktop to VirtualBox and vice-versa.

Now power off both the machines to update the settings.

#Make sure below RPM package (or later versions) are installed on both the Linux servers. -: Oracle Linux 6 and Red Hat Enterprise Linux 6 :- - binutils-2.20.51.0.2-5.11.el6 (x86_64) - compat-libcap1-1.10-1 (x86_64) - compat-libstdc++-33-3.2.3-69.el6 (x86_64) - compat-libstdc++-33-3.2.3-69.el6.i686 - gcc-4.4.4-13.el6 (x86_64) - gcc-c++-4.4.4-13.el6 (x86_64) - glibc-2.12-1.7.el6 (i686) - glibc-2.12-1.7.el6 (x86_64) - glibc-devel-2.12-1.7.el6 (x86_64) - glibc-devel-2.12-1.7.el6.i686 - ksh - libgcc-4.4.4-13.el6 (i686) - libgcc-4.4.4-13.el6 (x86_64) - libstdc++-4.4.4-13.el6 (x86_64) - libstdc++-4.4.4-13.el6.i686 - libstdc++-devel-4.4.4-13.el6 (x86_64) - libstdc++-devel-4.4.4-13.el6.i686 - libaio-0.3.107-10.el6 (x86_64) - libaio-0.3.107-10.el6.i686 - libaio-devel-0.3.107-10.el6 (x86_64) - libaio-devel-0.3.107-10.el6.i686 - libXext-1.1 (x86_64) - libXext-1.1 (i686) - libXtst-1.0.99.2 (x86_64) - libXtst-1.0.99.2 (i686) - libX11-1.3 (x86_64) - libX11-1.3 (i686) - libXau-1.0.5 (x86_64) - libXau-1.0.5 (i686) - libxcb-1.5 (x86_64) - libxcb-1.5 (i686) - libXi-1.3 (x86_64) - libXi-1.3 (i686) - make-3.81-19.el6 - sysstat-9.0.4-11.el6 (x86_64) - nfs-utils-1.2.3-15.0.1 - oracleasm-support-2.1.5-1.el6.x86_64 - oraclelinux-release-6Server-3.0.2.x86_64 - oraclelinux-release-notes-6Server-7.x86_64 - cvuqdisk-1.0.9-1.rpm (Located under GRID_SETUP_LOCATION/rpm) |

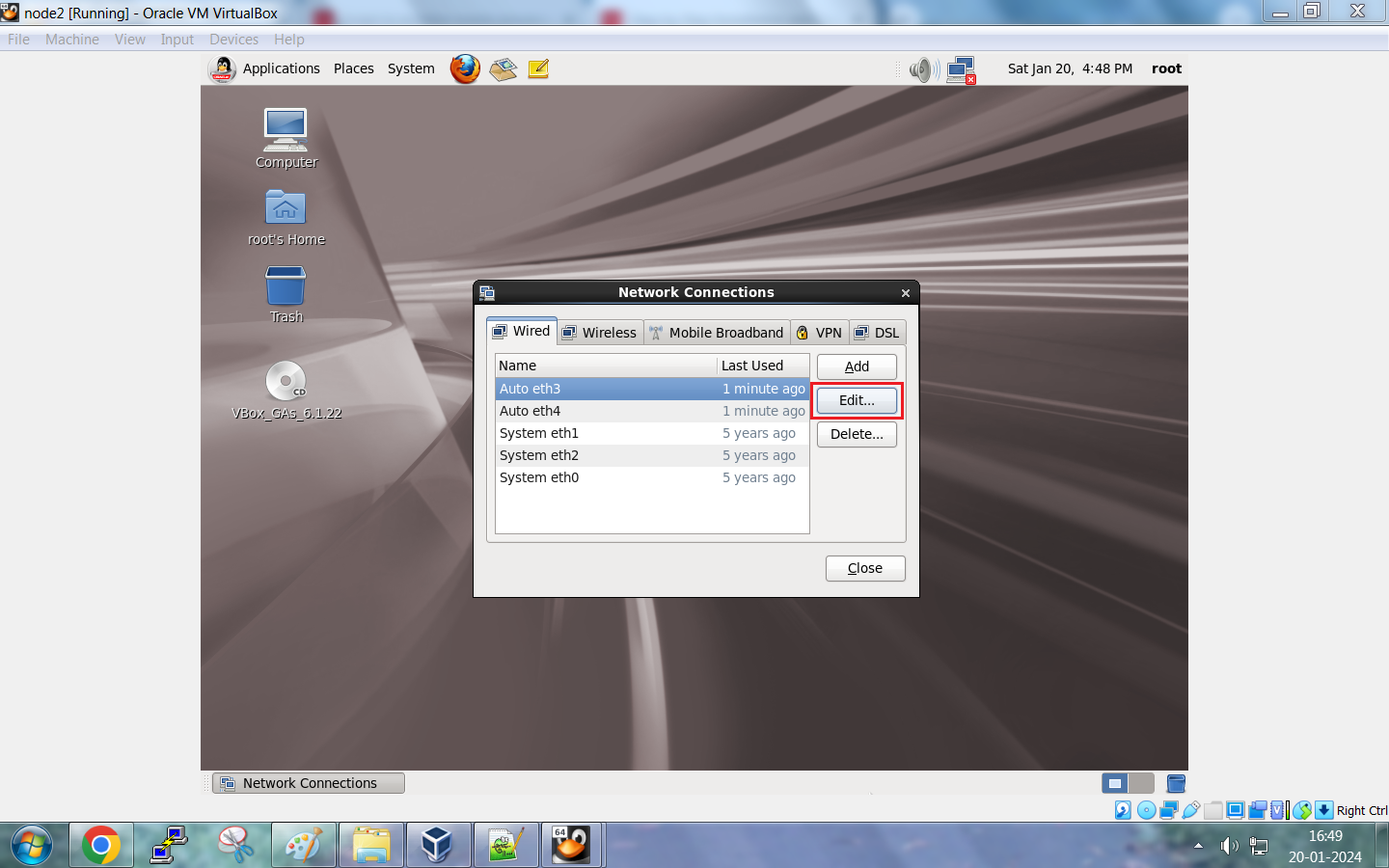

Step 6: Network configuration

#Add below entries in /etc/hosts file on both the nodes. [root@rac1 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.28.1 rac1.localdomain rac1 192.168.28.2 rac2.localdomain rac2 192.168.1.11 rac1-priv.localdomain rac1-priv 192.168.1.12 rac2-priv.localdomain rac2-priv 192.168.28.101 rac1-vip.localdomain rac1-vip 192.168.28.102 rac2-vip.localdomain rac2-vip 192.168.28.201 rac-scan.localdomain rac-scan 192.168.28.202 rac-scan.localdomain rac-scan 192.168.28.203 rac-scan.localdomain rac-scan [root@rac2 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.28.1 rac1.localdomain rac1 192.168.28.2 rac2.localdomain rac2 192.168.1.11 rac1-priv.localdomain rac1-priv 192.168.1.12 rac2-priv.localdomain rac2-priv 192.168.28.101 rac1-vip.localdomain rac1-vip 192.168.28.102 rac2-vip.localdomain rac2-vip 192.168.28.201 rac-scan.localdomain rac-scan 192.168.28.202 rac-scan.localdomain rac-scan 192.168.28.203 rac-scan.localdomain rac-scan |

Make below changes for public and private ethernet cards on both Linux servers.

Make the similar changes for Node2 as well.

Ensure SELINUX is disabled in /etc/selinux/config file on both the nodes. If not then edit the file and make the changes.

#cat /etc/selinux/config

#vi /etc/selinux/config

Step 7: Mark the OCR disks as shareable

Once the disks are marked as Shareable, then attach these disks to both the nodes so that disks will be visible to both the nodes.

My GRID+RDBMS software is located on below directory on my desktop and hence I am sharing this folder to both Virtual Machines so that it will be accessible to both Linux servers. Refer below screenshots to make share folder.

Step 8: Server readiness

#User and Group Creation Node1: [root@rac1 ~]# useradd grid [root@rac1 ~]# passwd grid Changing password for user grid. New password: BAD PASSWORD: it is too short BAD PASSWORD: is too simple Retype new password: passwd: all authentication tokens updated successfully. [root@rac1 ~]# groupadd -g 2000 oinstall [root@rac1 ~]# groupadd -g 2200 dba [root@rac1 ~]# groupadd -g 2100 asmadmin [root@rac1 ~]# groupadd -g 2300 oper [root@rac1 ~]# groupadd -g 2400 asmdba [root@rac1 ~]# groupadd -g 2500 asmoper [root@rac1 ~]# usermod -g oinstall -G asmadmin,dba,oper,asmdba,asmoper grid [root@rac1 ~]# usermod -g oinstall -G asmadmin,dba,oper,asmdba,asmoper oracle [root@rac1 ~]# id grid uid=54323(grid) gid=54321(oinstall) groups=54321(oinstall),54322(dba),2100(asmadmin),2300(oper),2400(asmdba),2500(asmoper) [root@rac1 ~]# id oracle uid=54321(oracle) gid=54321(oinstall) groups=54321(oinstall),54322(dba),2100(asmadmin),2300(oper),2400(asmdba),2500(asmoper) Node2: [root@rac2 ~]# useradd grid [root@rac2 ~]# passwd grid Changing password for user grid. New password: BAD PASSWORD: it is too short BAD PASSWORD: is too simple Retype new password: passwd: all authentication tokens updated successfully. [root@rac2 ~]# groupadd -g 2000 oinstall [root@rac2 ~]# groupadd -g 2200 dba [root@rac2 ~]# groupadd -g 2100 asmadmin [root@rac2 ~]# groupadd -g 2300 oper [root@rac2 ~]# groupadd -g 2400 asmdba [root@rac2 ~]# groupadd -g 2500 asmoper root@rac2 ~]# usermod -g oinstall -G asmadmin,dba,oper,asmdba,asmoper grid [root@rac2 ~]# usermod -g oinstall -G asmadmin,dba,oper,asmdba,asmoper oracle [root@rac2 ~]# id grid uid=54323(grid) gid=54321(oinstall) groups=54321(oinstall),54322(dba),2100(asmadmin),2300(oper),2400(asmdba),2500(asmoper) [root@rac2 ~]# id oracle uid=54321(oracle) gid=54321(oinstall) groups=54321(oinstall),54322(dba),2100(asmadmin),2300(oper),2400(asmdba),2500(asmoper) #Directory Creation and Permissions Node1: [root@rac1 ~]# mkdir -p /u01/app/grid [root@rac1 ~]# mkdir -p /u01/app/12.1.0.2/grid [root@rac1 ~]# mkdir -p /u01/app/oraInventory [root@rac1 ~]# mkdir -p /u01/app/oracle [root@rac1 ~]# chown -R grid:oinstall /u01/app/grid [root@rac1 ~]# chown -R grid:oinstall /u01/app/12.1.0.2/grid [root@rac1 ~]# chown -R grid:oinstall /u01/app/oraInventory [root@rac1 ~]# chown -R oracle:oinstall /u01/app/oracle [root@rac1 ~]# chmod -R 755 /u01/app/grid [root@rac1 ~]# chmod -R 755 /u01/app/12.1.0.2/grid [root@rac1 ~]# chmod -R 755 /u01/app/oraInventory [root@rac1 ~]# chmod -R 755 /u01/app/oracle Node2: [root@rac2 ~]# mkdir -p /u01/app/grid [root@rac2 ~]# mkdir -p /u01/app/12.1.0.2/grid [root@rac2 ~]# mkdir -p /u01/app/oraInventory [root@rac2 ~]# mkdir -p /u01/app/oracle [root@rac2 ~]# chown -R grid:oinstall /u01/app/grid [root@rac2 ~]# chown -R grid:oinstall /u01/app/12.1.0.2/grid [root@rac2 ~]# chown -R grid:oinstall /u01/app/oraInventory [root@rac2 ~]# chown -R oracle:oinstall /u01/app/oracle [root@rac2 ~]# chmod -R 755 /u01/app/grid [root@rac2 ~]# chmod -R 755 /u01/app/12.1.0.2/grid [root@rac2 ~]# chmod -R 755 /u01/app/oraInventory [root@rac2 ~]# chmod -R 755 /u01/app/oracle #Kernel and limits parameters Node1: [root@rac1 ~]# vi /etc/security/limits.conf grid soft nofile 1024 grid hard nofile 65536 grid soft nproc 2047 grid hard nproc 16384 grid soft stack 10240 grid hard stack 32768 oracle soft nofile 1024 oracle hard nofile 65536 oracle soft nproc 2047 oracle hard nproc 16384 oracle soft stack 10240 oracle hard stack 32768 [root@rac1 ~]# vi /etc/sysctl.conf fs.file-max = 6815744 kernel.sem = 250 32000 100 128 kernel.shmmni = 4096 kernel.shmall = 1073741824 kernel.shmmax = 4398046511104 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048576 fs.aio-max-nr = 1048576 net.ipv4.ip_local_port_range = 9000 65500 [root@rac1 ~]# sysctl -p Node2: [root@rac2 ~]# vi /etc/security/limits.conf grid soft nofile 1024 grid hard nofile 65536 grid soft nproc 2047 grid hard nproc 16384 grid soft stack 10240 grid hard stack 32768 oracle soft nofile 1024 oracle hard nofile 65536 oracle soft nproc 2047 oracle hard nproc 16384 oracle soft stack 10240 oracle hard stack 32768 [root@rac2 ~]# vi /etc/sysctl.conf fs.file-max = 6815744 kernel.sem = 250 32000 100 128 kernel.shmmni = 4096 kernel.shmall = 1073741824 kernel.shmmax = 4398046511104 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048576 fs.aio-max-nr = 1048576 net.ipv4.ip_local_port_range = 9000 65500 [root@rac2 ~]# sysctl -p |

#ssh configuration for grid user on both nodes. Please note that ssh configuration or ssh authentication or password less authentication can be configured during GUI installation or manual method. Node1: [root@rac1 ~]# su - grid [grid@rac1 ~]$ cd /home/grid [grid@rac1 ~]$ rm -rf .ssh [grid@rac1 ~]$ mkdir .ssh [grid@rac1 ~]$ chmod 700 .ssh [grid@rac1 ~]$ cd .ssh [grid@rac1 .ssh]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_rsa. Your public key has been saved in /home/grid/.ssh/id_rsa.pub. The key fingerprint is: a6:11:85:08:15:bc:f8:62:b9:0d:36:7d:10:ae:08:d3 grid@rac1.localdomain The key's randomart image is: +--[ RSA 2048]----+ | .+oo .. | | + .. | | . o o. | |o E + . | |.o = .. S | |. O o .+ | | o * .. | | . . | | | +-----------------+ [grid@rac1 .ssh]$ ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_dsa. Your public key has been saved in /home/grid/.ssh/id_dsa.pub. The key fingerprint is: 91:b2:0f:68:4a:33:1a:21:ba:26:80:4f:9b:cc:39:d5 grid@rac1.localdomain The key's randomart image is: +--[ DSA 1024]----+ | | | . | |o . o | |+. o o . | |= = + E S | |.X X o | |+.X . | |o . | | | +-----------------+ [grid@rac1 .ssh]$ cat *.pub >> authorized_keys.rac1 [grid@rac1 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 403 Jan 28 22:04 id_rsa.pub -rw------- 1 grid oinstall 1675 Jan 28 22:04 id_rsa -rw-r--r-- 1 grid oinstall 611 Jan 28 22:05 id_dsa.pub -rw------- 1 grid oinstall 668 Jan 28 22:05 id_dsa -rw-r--r-- 1 grid oinstall 1014 Jan 28 22:05 authorized_keys.rac1 Node2: [root@rac2 ~]# su - grid [grid@rac2 ~]$ cd /home/grid [grid@rac2 ~]$ rm -rf .ssh [grid@rac2 ~]$ mkdir .ssh [grid@rac2 ~]$ chmod 700 .ssh [grid@rac2 ~]$ cd .ssh [grid@rac2 .ssh]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_rsa. Your public key has been saved in /home/grid/.ssh/id_rsa.pub. The key fingerprint is: c8:8e:45:bb:35:a0:21:0d:d9:7e:ad:7d:e6:44:4b:e8 grid@rac2.localdomain The key's randomart image is: +--[ RSA 2048]----+ | .o | | .o. | | ..o o. . | | ..=.+o o | | ..=+So . | | +.oE.= | | . o = | | . | | | +-----------------+ [grid@rac2 .ssh]$ ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_dsa. Your public key has been saved in /home/grid/.ssh/id_dsa.pub. The key fingerprint is: 28:75:ea:64:12:31:28:8d:28:a4:ba:23:74:59:90:8d grid@rac2.localdomain The key's randomart image is: +--[ DSA 1024]----+ |o+ o* | |* oE.+ | |o. ... . | |. oo + | |.. oo = S | |... * | |+ . | |.. | | | +-----------------+ [grid@rac2 .ssh]$ cat *.pub >> authorized_keys.rac2 [grid@rac2 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 403 Jan 28 22:08 id_rsa.pub -rw------- 1 grid oinstall 1675 Jan 28 22:08 id_rsa -rw-r--r-- 1 grid oinstall 611 Jan 28 22:08 id_dsa.pub -rw------- 1 grid oinstall 668 Jan 28 22:08 id_dsa -rw-r--r-- 1 grid oinstall 1014 Jan 28 22:08 authorized_keys.rac2 Node1: [grid@rac1 .ssh]$ scp authorized_keys.rac1 grid@rac2:/home/grid/.ssh/ grid@rac2's password: authorized_keys.rac1 100% 1014 1.0KB/s 00:00 Node2: grid [grid@rac2 .ssh]$ scp authorized_keys.rac2 grid@rac1:/home/grid/.ssh/ grid@rac1's password: authorized_keys.rac2 100% 1014 1.0KB/s 00:00 Node1: [grid@rac1 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 403 Jan 28 22:04 id_rsa.pub -rw------- 1 grid oinstall 1675 Jan 28 22:04 id_rsa -rw-r--r-- 1 grid oinstall 611 Jan 28 22:05 id_dsa.pub -rw------- 1 grid oinstall 668 Jan 28 22:05 id_dsa -rw-r--r-- 1 grid oinstall 1014 Jan 28 22:05 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 399 Jan 28 22:05 known_hosts -rw-r--r-- 1 grid oinstall 1014 Jan 28 22:10 authorized_keys.rac2 Node2: [grid@rac2 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 403 Jan 28 22:08 id_rsa.pub -rw------- 1 grid oinstall 1675 Jan 28 22:08 id_rsa -rw-r--r-- 1 grid oinstall 611 Jan 28 22:08 id_dsa.pub -rw------- 1 grid oinstall 668 Jan 28 22:08 id_dsa -rw-r--r-- 1 grid oinstall 1014 Jan 28 22:08 authorized_keys.rac2 -rw-r--r-- 1 grid oinstall 1014 Jan 28 22:09 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 399 Jan 28 22:10 known_hosts Node1: [grid@rac1 .ssh]$ cat *.rac* >> authorized_keys [grid@rac1 .ssh]$ chmod 600 authorized_keys [grid@rac1 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 403 Jan 28 22:04 id_rsa.pub -rw------- 1 grid oinstall 1675 Jan 28 22:04 id_rsa -rw-r--r-- 1 grid oinstall 611 Jan 28 22:05 id_dsa.pub -rw------- 1 grid oinstall 668 Jan 28 22:05 id_dsa -rw-r--r-- 1 grid oinstall 1014 Jan 28 22:05 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 399 Jan 28 22:05 known_hosts -rw-r--r-- 1 grid oinstall 1014 Jan 28 22:10 authorized_keys.rac2 -rw------- 1 grid oinstall 2028 Jan 28 22:12 authorized_keys Node2: [grid@rac2 .ssh]$ cat *.rac* >> authorized_keys [grid@rac2 .ssh]$ chmod 600 authorized_keys [grid@rac2 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 403 Jan 28 22:08 id_rsa.pub -rw------- 1 grid oinstall 1675 Jan 28 22:08 id_rsa -rw-r--r-- 1 grid oinstall 611 Jan 28 22:08 id_dsa.pub -rw------- 1 grid oinstall 668 Jan 28 22:08 id_dsa -rw-r--r-- 1 grid oinstall 1014 Jan 28 22:08 authorized_keys.rac2 -rw-r--r-- 1 grid oinstall 1014 Jan 28 22:09 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 399 Jan 28 22:10 known_hosts -rw------- 1 grid oinstall 2028 Jan 28 22:13 authorized_keys You can test ssh connection as below: From Node1: ssh node1 From Node1: ssh node2 From Node2: ssh node2 From Node2: ssh node1 Node1: [grid@rac1 ~]$ id uid=54323(grid) gid=54321(oinstall) groups=54321(oinstall),2100(asmadmin),2300(oper),2400(asmdba),2500(asmoper),54322(dba) [grid@rac1 ~]$ ssh rac1 The authenticity of host 'rac1 (192.168.28.1)' can't be established. RSA key fingerprint is f8:9d:df:cf:65:f2:74:2c:a6:38:05:64:2b:b9:fc:8e. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac1,192.168.28.1' (RSA) to the list of known hosts. [grid@rac1 ~]$ ssh rac1 Last login: Sun Jan 28 22:13:56 2024 from rac1 [grid@rac1 ~]$ ssh rac2 [grid@rac2 ~]$ ssh rac1 Last login: Sun Jan 28 22:14:00 2024 from rac1 Node2: [grid@rac2 ~]$ id uid=54323(grid) gid=54321(oinstall) groups=54321(oinstall),2100(asmadmin),2300(oper),2400(asmdba),2500(asmoper),54322(dba) [grid@rac2 ~]$ ssh rac2 The authenticity of host 'rac2 (192.168.28.2)' can't be established. RSA key fingerprint is f8:9d:df:cf:65:f2:74:2c:a6:38:05:64:2b:b9:fc:8e. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac2,192.168.28.2' (RSA) to the list of known hosts. Last login: Sun Jan 28 22:14:03 2024 from rac1 [grid@rac2 ~]$ ssh rac2 Last login: Sun Jan 28 22:14:28 2024 from rac2 [grid@rac2 ~]$ ssh rac1 Last login: Sun Jan 28 22:14:08 2024 from rac2 |

#Install RPM "cvuqdisk-1.0.9-1.rpm" [root@rac1 ~]# cd /u01/setup/grid/grid/rpm/ [root@rac1 rpm]# ls -ltr -rwxr-xr-x 1 grid oinstall 8976 Jul 1 2014 cvuqdisk-1.0.9-1.rpm [root@rac1 rpm]# rpm -ivh cvuqdisk-1.0.9-1.rpm Preparing... ########################################### [100%] Using default group oinstall to install package 1:cvuqdisk ########################################### [100%] [root@rac1 rpm]# scp cvuqdisk-1.0.9-1.rpm rac2:/tmp [root@rac2 tmp]# rpm -ivh cvuqdisk-1.0.9-1.rpm Preparing... ########################################### [100%] Using default group oinstall to install package 1:cvuqdisk ########################################### [100%] #Move "resolve.conf" file to avoid DNS related errors. [root@rac1 ~]# mv /etc/resolv.conf /etc/resolv.conf_bkp [root@rac2 ~]# mv /etc/resolv.conf /etc/resolv.conf_bkp #Add parameter "NOZEROCONF=yes" NETWORKING=yes HOSTNAME=rac1.localdomain NOZEROCONF=yes [root@rac2 ~]# cat /etc/sysconfig/network NETWORKING=yes HOSTNAME=rac2.localdomain NOZEROCONF=yes #Ensure "avahi-daemon" is not running. [root@rac1 grid]# service avahi-daemon status avahi-daemon (pid 2068) is running... [root@rac1 grid]# service avahi-daemon stop Shutting down Avahi daemon: [ OK ] [root@rac1 grid]# chkconfig avahi-daemon off [root@rac2 grid]# service avahi-daemon status avahi-daemon (pid 3054) is running... [root@rac2 grid]# service avahi-daemon stop Shutting down Avahi daemon: [ OK ] [root@rac2 grid]# chkconfig avahi-daemon off #Check if firewall is not running. [root@rac1 network-scripts]# service iptables stop iptables: Flushing firewall rules: [ OK ] iptables: Setting chains to policy ACCEPT: nat mangle filte[ OK ] iptables: Unloading modules: [ OK ] [root@rac1 network-scripts]# service iptables status iptables: Firewall is not running. [root@rac1 network-scripts]# chkconfig iptables off [root@rac2 ~]# service iptables stop iptables: Flushing firewall rules: [ OK ] iptables: Setting chains to policy ACCEPT: nat mangle filte[ OK ] iptables: Unloading modules: [ OK ] [root@rac2 ~]# service iptables status iptables: Firewall is not running. [root@rac2 ~]# chkconfig iptables off #Ensure NTPD is stopped. [root@rac1 ~]# service ntpd status [root@rac1 ~]# service ntpd stop [root@rac1 ~]# chkconfig ntpd off [root@rac1 ~]# mv /etc/ntp.conf /etc/ntp.conf.orig [root@rac1 ~]# rm /var/run/ntpd.pid [root@rac2 ~]# service ntpd status [root@rac2 ~]# service ntpd stop [root@rac2 ~]# chkconfig ntpd off [root@rac2 ~]# mv /etc/ntp.conf /etc/ntp.conf.orig [root@rac2 ~]# rm /var/run/ntpd.pid |

#Create logical partitions by allocated OCR disks. Execute these commands from any one node. No need to execute these from both the nodes since these are shared devices. [root@rac1 ~]# id uid=0(root) gid=0(root) groups=0(root),492(sfcb) [root@rac1 ~]# fdisk -l Disk /dev/sdb: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdc: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sda: 64.4 GB, 64424509440 bytes 255 heads, 63 sectors/track, 7832 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x0004fb69 Device Boot Start End Blocks Id System /dev/sda1 * 1 64 512000 83 Linux Partition 1 does not end on cylinder boundary. /dev/sda2 64 7833 62401536 8e Linux LVM Disk /dev/sdd: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/mapper/vg_rac1-lv_root: 53.7 GB, 53687091200 bytes 255 heads, 63 sectors/track, 6527 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/mapper/vg_rac1-lv_swap: 4160 MB, 4160749568 bytes 255 heads, 63 sectors/track, 505 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/mapper/vg_rac1-lv_home: 6048 MB, 6048186368 bytes 255 heads, 63 sectors/track, 735 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 From the above output, we can see that the OCR disk devices are /dev/sdb, /dev/sbc, and /dev/sdd. Refer below commands to create logical partitions. [root@rac1 ~]# fdisk /dev/sdb Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel Building a new DOS disklabel with disk identifier 0xcbefd982. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won't be recoverable. Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) WARNING: DOS-compatible mode is deprecated. It's strongly recommended to switch off the mode (command 'c') and change display units to sectors (command 'u'). Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 1 First cylinder (1-1305, default 1): Using default value 1 Last cylinder, +cylinders or +size{K,M,G} (1-1305, default 1305): Using default value 1305 Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks. [root@rac1 ~]# fdisk -l /dev/sdb Disk /dev/sdb: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xcbefd982 Device Boot Start End Blocks Id System /dev/sdb1 1 1305 10482381 83 Linux [root@rac1 ~]# fdisk /dev/sdc Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel Building a new DOS disklabel with disk identifier 0x3e4c8b4b. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won't be recoverable. Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) WARNING: DOS-compatible mode is deprecated. It's strongly recommended to switch off the mode (command 'c') and change display units to sectors (command 'u'). Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 1 First cylinder (1-1305, default 1): Using default value 1 Last cylinder, +cylinders or +size{K,M,G} (1-1305, default 1305): Using default value 1305 Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks. [root@rac1 ~]# fdisk -l /dev/sdc Disk /dev/sdc: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x3e4c8b4b Device Boot Start End Blocks Id System /dev/sdc1 1 1305 10482381 83 Linux [root@rac1 ~]# fdisk /dev/sdd Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel Building a new DOS disklabel with disk identifier 0xa4cfdb6d. Changes will remain in memory only, until you decide to write them. After that, of course, the previous content won't be recoverable. Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite) WARNING: DOS-compatible mode is deprecated. It's strongly recommended to switch off the mode (command 'c') and change display units to sectors (command 'u'). Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 1 First cylinder (1-1305, default 1): Using default value 1 Last cylinder, +cylinders or +size{K,M,G} (1-1305, default 1305): Using default value 1305 Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks. [root@rac1 ~]# fdisk -l /dev/sdd Disk /dev/sdd: 10.7 GB, 10737418240 bytes 255 heads, 63 sectors/track, 1305 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0xa4cfdb6d Device Boot Start End Blocks Id System /dev/sdd1 1 1305 10482381 83 Linux |

#oracleasm configuration You can check the oracleasm status before creating logical disks. Execute below commands on both the nodes. Node1: [root@rac1 ~]# oracleasm status Checking if ASM is loaded: no Checking if /dev/oracleasm is mounted: no [root@rac1 ~]# oracleasm init Creating /dev/oracleasm mount point: /dev/oracleasm Loading module "oracleasm": oracleasm Mounting ASMlib driver filesystem: /dev/oracleasm [root@rac1 ~]# oracleasm status Checking if ASM is loaded: yes Checking if /dev/oracleasm is mounted: yes [root@rac1 ~]# oracleasm configure -i Configuring the Oracle ASM library driver. This will configure the on-boot properties of the Oracle ASM library driver. The following questions will determine whether the driver is loaded on boot and what permissions it will have. The current values will be shown in brackets ('[]'). Hitting <ENTER> without typing an answer will keep that current value. Ctrl-C will abort. Default user to own the driver interface []: grid Default group to own the driver interface []: oinstall Start Oracle ASM library driver on boot (y/n) [n]: y Scan for Oracle ASM disks on boot (y/n) [y]: y Writing Oracle ASM library driver configuration: done Node2: [root@rac2 ~]# oracleasm status Checking if ASM is loaded: no Checking if /dev/oracleasm is mounted: no [root@rac2 ~]# oracleasm init Creating /dev/oracleasm mount point: /dev/oracleasm Loading module "oracleasm": oracleasm Mounting ASMlib driver filesystem: /dev/oracleasm [root@rac2 ~]# oracleasm status Checking if ASM is loaded: yes Checking if /dev/oracleasm is mounted: yes [root@rac2 ~]# oracleasm configure -i Configuring the Oracle ASM library driver. This will configure the on-boot properties of the Oracle ASM library driver. The following questions will determine whether the driver is loaded on boot and what permissions it will have. The current values will be shown in brackets ('[]'). Hitting <ENTER> without typing an answer will keep that current value. Ctrl-C will abort. Default user to own the driver interface []: grid Default group to own the driver interface []: oinstall Start Oracle ASM library driver on boot (y/n) [n]: y Scan for Oracle ASM disks on boot (y/n) [y]: y Writing Oracle ASM library driver configuration: done |

#Create logical disks by created partitions. Do not execute "createdisk" command on all the nodes. Execute "createdisk" command from any one node and execute "scandisks" command to scan the disks on another node. Node1: [root@rac1 ~]# id uid=0(root) gid=0(root) groups=0(root),492(sfcb) [root@rac1 ~]# oracleasm createdisk OCRDISK1 /dev/sdb1 Writing disk header: done Instantiating disk: done [root@rac1 ~]# oracleasm createdisk OCRDISK2 /dev/sdc1 Writing disk header: done Instantiating disk: done [root@rac1 ~]# oracleasm createdisk OCRDISK3 /dev/sdd1 Writing disk header: done Instantiating disk: done [root@rac1 ~]# oracleasm listdisks OCRDISK1 OCRDISK2 OCRDISK3 Node2: [root@rac2 ~]# id uid=0(root) gid=0(root) groups=0(root),492(sfcb) [root@rac2 ~]# oracleasm listdisks You can see in the above command that, it did not listed created disks which requires scandisks command 1st time. [root@rac2 ~]# oracleasm scandisks Reloading disk partitions: done Cleaning any stale ASM disks... Scanning system for ASM disks... Instantiating disk "OCRDISK1" Instantiating disk "OCRDISK2" Instantiating disk "OCRDISK3" [root@rac2 ~]# oracleasm listdisks OCRDISK1 OCRDISK2 OCRDISK3 |

Step 9: Grid Installation

#Login as grid user and unzip the GRID Infrastructure Setup located under /u01/setup/grid. [grid@rac1 ~]$ id uid=54323(grid) gid=54321(oinstall) groups=54321(oinstall),2100(asmadmin),2300(oper),2400(asmdba),2500(asmoper),54322(dba) [grid@rac1 ~]$ cd /u01/setup/grid/ [grid@rac1 grid]$ ls -ltr -rwxr-xr-x 1 grid oinstall 1747043545 Jan 28 22:44 V46096_oracle_grid_oracle_grid_01_1_of_2.zip -rwxr-xr-x 1 grid oinstall 646972897 Jan 28 22:44 V46096_oracle_grid_oracle_grid_01_2_of_2.zip [grid@rac1 grid]$ unzip V46096_oracle_grid_oracle_grid_01_1_of_2.zip Archive: V46096_oracle_grid_oracle_grid_01_1_of_2.zip creating: grid/ creating: grid/rpm/ inflating: grid/rpm/cvuqdisk-1.0.9-1.rpm creating: grid/response/ inflating: grid/response/grid_install.rsp creating: grid/sshsetup/ inflating: grid/sshsetup/sshUserSetup.sh inflating: grid/runInstaller inflating: grid/welcome.html creating: grid/install/ creating: grid/install/resource/ inflating: grid/install/resource/cons_es.nls inflating: grid/install/resource/cons.nls inflating: grid/install/resource/cons_ko.nls ..... creating: grid/stage/sizes/ inflating: grid/stage/sizes/oracle.crs12.1.0.2.0Complete.sizes.properties extracting: grid/stage/sizes/oracle.crs.Complete.sizes.properties inflating: grid/stage/sizes/oracle.crs.12.1.0.2.0.sizes.properties [grid@rac1 grid]$ unzip V46096_oracle_grid_oracle_grid_01_2_of_2.zip Archive: V46096_oracle_grid_oracle_grid_01_2_of_2.zip creating: grid/stage/Components/oracle.has.crs/ creating: grid/stage/Components/oracle.has.crs/12.1.0.2.0/ creating: grid/stage/Components/oracle.has.crs/12.1.0.2.0/1/ creating: grid/stage/Components/oracle.has.crs/12.1.0.2.0/1/DataFiles/ inflating: grid/stage/Components/oracle.has.crs/12.1.0.2.0/1/DataFiles/filegroup65.jar inflating: grid/stage/Components/oracle.has.crs/12.1.0.2.0/1/DataFiles/filegroup3.jar inflating: grid/stage/Components/oracle.has.crs/12.1.0.2.0/1/DataFiles/filegroup6.jar ..... inflating: grid/stage/Components/oracle.rdbms/12.1.0.2.0/1/DataFiles/filegroup66.jar inflating: grid/stage/Components/oracle.rdbms/12.1.0.2.0/1/DataFiles/filegroup4.jar inflating: grid/stage/Components/oracle.rdbms/12.1.0.2.0/1/DataFiles/filegroup19.jar inflating: grid/install/.oui [grid@rac1 grid]$ ls -ltr drwxr-xr-x 7 grid oinstall 4096 Jul 7 2014 grid -rwxr-xr-x 1 grid oinstall 1747043545 Jan 28 22:44 V46096_oracle_grid_oracle_grid_01_1_of_2.zip -rwxr-xr-x 1 grid oinstall 646972897 Jan 28 22:44 V46096_oracle_grid_oracle_grid_01_2_of_2.zip [grid@rac1$ cd /u01/setup/grid/grid [grid@rac1 grid]$ ls -ltr -rwxr-xr-x 1 grid oinstall 500 Feb 7 2013 welcome.html -rwxr-xr-x 1 grid oinstall 5085 Dec 20 2013 runcluvfy.sh -rwxr-xr-x 1 grid oinstall 8534 Jul 7 2014 runInstaller drwxr-xr-x 2 grid oinstall 4096 Jul 7 2014 rpm drwxrwxr-x 2 grid oinstall 4096 Jul 7 2014 sshsetup drwxrwxr-x 2 grid oinstall 4096 Jul 7 2014 response drwxr-xr-x 14 grid oinstall 4096 Jul 7 2014 stage drwxr-xr-x 4 grid oinstall 4096 Jan 28 22:53 install #Login as grid user and run cluvfy before starting the GRID Infrastructure Installation [grid@rac1 grid]$ id uid=54323(grid) gid=54321(oinstall) groups=54321(oinstall),2100(asmadmin),2300(oper),2400(asmdba),2500(asmoper),54322(dba) [grid@rac1 grid]$ pwd /u01/setup/grid/grid [grid@rac1 grid]$ ls -ltr -rwxr-xr-x 1 grid oinstall 500 Feb 7 2013 welcome.html -rwxr-xr-x 1 grid oinstall 5085 Dec 20 2013 runcluvfy.sh -rwxr-xr-x 1 grid oinstall 8534 Jul 7 2014 runInstaller drwxr-xr-x 2 grid oinstall 4096 Jul 7 2014 rpm drwxrwxr-x 2 grid oinstall 4096 Jul 7 2014 sshsetup drwxrwxr-x 2 grid oinstall 4096 Jul 7 2014 response drwxr-xr-x 14 grid oinstall 4096 Jul 7 2014 stage drwxr-xr-x 4 grid oinstall 4096 Jan 28 22:53 install [grid@rac1 grid]$ ./runcluvfy.sh stage -pre crsinst -n rac1,rac2 -verbose Performing pre-checks for cluster services setup Checking node reachability... Check: Node reachability from node "rac1" Destination Node Reachable? ------------------------------------ ------------------------ rac1 yes rac2 yes Result: Node reachability check passed from node "rac1" Checking user equivalence... Check: User equivalence for user "grid" Node Name Status ------------------------------------ ------------------------ rac2 passed rac1 passed Result: User equivalence check passed for user "grid" Checking node connectivity... Checking hosts config file... Node Name Status ------------------------------------ ------------------------ rac1 passed rac2 passed Verification of the hosts config file successful Interface information for node "rac1" Name IP Address Subnet Gateway Def. Gateway HW Address MTU ------ --------------- --------------- --------------- --------------- ----------------- ------ eth3 192.168.28.1 192.168.28.0 0.0.0.0 UNKNOWN 08:00:27:DB:07:5B 1500 eth4 192.168.1.11 192.168.1.0 0.0.0.0 UNKNOWN 08:00:27:12:9F:38 1500 virbr0 192.168.122.1 192.168.122.0 0.0.0.0 UNKNOWN 52:54:00:DB:BD:CE 1500 Interface information for node "rac2" Name IP Address Subnet Gateway Def. Gateway HW Address MTU ------ --------------- --------------- --------------- --------------- ----------------- ------ eth3 192.168.28.2 192.168.28.0 0.0.0.0 UNKNOWN 08:00:27:21:86:E3 1500 eth4 192.168.1.12 192.168.1.0 0.0.0.0 UNKNOWN 08:00:27:8F:9F:B2 1500 virbr0 192.168.122.1 192.168.122.0 0.0.0.0 UNKNOWN 52:54:00:DB:BD:CE 1500 Check: Node connectivity of subnet "192.168.28.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- rac1[192.168.28.1] rac2[192.168.28.2] yes Result: Node connectivity passed for subnet "192.168.28.0" with node(s) rac1,rac2 Check: TCP connectivity of subnet "192.168.28.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- rac1 : 192.168.28.1 rac1 : 192.168.28.1 passed rac2 : 192.168.28.2 rac1 : 192.168.28.1 passed rac1 : 192.168.28.1 rac2 : 192.168.28.2 passed rac2 : 192.168.28.2 rac2 : 192.168.28.2 passed Result: TCP connectivity check passed for subnet "192.168.28.0" Check: Node connectivity of subnet "192.168.1.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- rac1[192.168.1.11] rac2[192.168.1.12] yes Result: Node connectivity passed for subnet "192.168.1.0" with node(s) rac1,rac2 Check: TCP connectivity of subnet "192.168.1.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- rac1 : 192.168.1.11 rac1 : 192.168.1.11 passed rac2 : 192.168.1.12 rac1 : 192.168.1.11 passed rac1 : 192.168.1.11 rac2 : 192.168.1.12 passed rac2 : 192.168.1.12 rac2 : 192.168.1.12 passed Result: TCP connectivity check passed for subnet "192.168.1.0" Check: Node connectivity of subnet "192.168.122.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- rac1[192.168.122.1] rac2[192.168.122.1] yes Result: Node connectivity passed for subnet "192.168.122.0" with node(s) rac1,rac2 Check: TCP connectivity of subnet "192.168.122.0" Source Destination Connected? ------------------------------ ------------------------------ ---------------- rac1 : 192.168.122.1 rac1 : 192.168.122.1 failed ERROR: PRVG-11850 : The system call "connect" failed with error "111" while executing exectask on node "rac1" Connection refused rac2 : 192.168.122.1 rac1 : 192.168.122.1 passed rac1 : 192.168.122.1 rac2 : 192.168.122.1 failed ERROR: PRVG-11850 : The system call "connect" failed with error "111" while executing exectask on node "rac1" Connection refused rac2 : 192.168.122.1 rac2 : 192.168.122.1 passed Result: TCP connectivity check failed for subnet "192.168.122.0" Interfaces found on subnet "192.168.28.0" that are likely candidates for a private interconnect are: rac1 eth3:192.168.28.1 rac2 eth3:192.168.28.2 Interfaces found on subnet "192.168.1.0" that are likely candidates for a private interconnect are: rac1 eth4:192.168.1.11 rac2 eth4:192.168.1.12 WARNING: Could not find a suitable set of interfaces for VIPs Checking subnet mask consistency... Subnet mask consistency check passed for subnet "192.168.28.0". Subnet mask consistency check passed for subnet "192.168.1.0". Subnet mask consistency check passed for subnet "192.168.122.0". Subnet mask consistency check passed. ERROR: PRVG-1172 : The IP address "192.168.122.1" is on multiple interfaces "virbr0" on nodes "rac1,rac2" Result: Node connectivity check failed Checking multicast communication... Checking subnet "192.168.28.0" for multicast communication with multicast group "224.0.0.251"... Check of subnet "192.168.28.0" for multicast communication with multicast group "224.0.0.251" passed. Check of multicast communication passed. Checking ASMLib configuration. Node Name Status ------------------------------------ ------------------------ rac1 passed rac2 passed Result: Check for ASMLib configuration passed. Check: Total memory Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 3.7425GB (3924288.0KB) 4GB (4194304.0KB) failed rac1 3.7425GB (3924288.0KB) 4GB (4194304.0KB) failed Result: Total memory check failed Check: Available memory Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 3.3636GB (3527016.0KB) 50MB (51200.0KB) passed rac1 3.3532GB (3516076.0KB) 50MB (51200.0KB) passed Result: Available memory check passed Check: Swap space Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 3.875GB (4063228.0KB) 3.7425GB (3924288.0KB) passed rac1 3.875GB (4063228.0KB) 3.7425GB (3924288.0KB) passed Result: Swap space check passed Check: Free disk space for "rac2:/usr,rac2:/var,rac2:/etc,rac2:/sbin" Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /usr rac2 / 41.7568GB 65MB passed /var rac2 / 41.7568GB 65MB passed /etc rac2 / 41.7568GB 65MB passed /sbin rac2 / 41.7568GB 65MB passed Result: Free disk space check passed for "rac2:/usr,rac2:/var,rac2:/etc,rac2:/sbin" Check: Free disk space for "rac1:/usr,rac1:/var,rac1:/etc,rac1:/sbin" Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /usr rac1 / 38.4236GB 65MB passed /var rac1 / 38.4236GB 65MB passed /etc rac1 / 38.4236GB 65MB passed /sbin rac1 / 38.4236GB 65MB passed Result: Free disk space check passed for "rac1:/usr,rac1:/var,rac1:/etc,rac1:/sbin" Check: Free disk space for "rac2:/tmp" Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /tmp rac2 /tmp 41.7568GB 1GB passed Result: Free disk space check passed for "rac2:/tmp" Check: Free disk space for "rac1:/tmp" Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /tmp rac1 /tmp 38.4229GB 1GB passed Result: Free disk space check passed for "rac1:/tmp" Check: User existence for "grid" Node Name Status Comment ------------ ------------------------ ------------------------ rac2 passed exists(54323) rac1 passed exists(54323) Checking for multiple users with UID value 54323 Result: Check for multiple users with UID value 54323 passed Result: User existence check passed for "grid" Check: Group existence for "oinstall" Node Name Status Comment ------------ ------------------------ ------------------------ rac2 passed exists rac1 passed exists Result: Group existence check passed for "oinstall" Check: Group existence for "dba" Node Name Status Comment ------------ ------------------------ ------------------------ rac2 passed exists rac1 passed exists Result: Group existence check passed for "dba" Check: Membership of user "grid" in group "oinstall" [as Primary] Node Name User Exists Group Exists User in Group Primary Status ---------------- ------------ ------------ ------------ ------------ ------------ rac2 yes yes yes yes passed rac1 yes yes yes yes passed Result: Membership check for user "grid" in group "oinstall" [as Primary] passed Check: Membership of user "grid" in group "dba" Node Name User Exists Group Exists User in Group Status ---------------- ------------ ------------ ------------ ---------------- rac2 yes yes yes passed rac1 yes yes yes passed Result: Membership check for user "grid" in group "dba" passed Check: Run level Node Name run level Required Status ------------ ------------------------ ------------------------ ---------- rac2 5 3,5 passed rac1 5 3,5 passed Result: Run level check passed Check: Hard limits for "maximum open file descriptors" Node Name Type Available Required Status ---------------- ------------ ------------ ------------ ---------------- rac2 hard 65536 65536 passed rac1 hard 65536 65536 passed Result: Hard limits check passed for "maximum open file descriptors" Check: Soft limits for "maximum open file descriptors" Node Name Type Available Required Status ---------------- ------------ ------------ ------------ ---------------- rac2 soft 1024 1024 passed rac1 soft 1024 1024 passed Result: Soft limits check passed for "maximum open file descriptors" Check: Hard limits for "maximum user processes" Node Name Type Available Required Status ---------------- ------------ ------------ ------------ ---------------- rac2 hard 16384 16384 passed rac1 hard 16384 16384 passed Result: Hard limits check passed for "maximum user processes" Check: Soft limits for "maximum user processes" Node Name Type Available Required Status ---------------- ------------ ------------ ------------ ---------------- rac2 soft 2047 2047 passed rac1 soft 2047 2047 passed Result: Soft limits check passed for "maximum user processes" Check: System architecture Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 x86_64 x86_64 passed rac1 x86_64 x86_64 passed Result: System architecture check passed Check: Kernel version Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 2.6.39-200.24.1.el6uek.x86_64 2.6.39 passed rac1 2.6.39-200.24.1.el6uek.x86_64 2.6.39 passed Result: Kernel version check passed Check: Kernel parameter for "semmsl" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 250 250 250 passed rac2 250 250 250 passed Result: Kernel parameter check passed for "semmsl" Check: Kernel parameter for "semmns" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 32000 32000 32000 passed rac2 32000 32000 32000 passed Result: Kernel parameter check passed for "semmns" Check: Kernel parameter for "semopm" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 100 100 100 passed rac2 100 100 100 passed Result: Kernel parameter check passed for "semopm" Check: Kernel parameter for "semmni" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 128 128 128 passed rac2 128 128 128 passed Result: Kernel parameter check passed for "semmni" Check: Kernel parameter for "shmmax" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 4398046511104 4398046511104 2009235456 passed rac2 4398046511104 4398046511104 2009235456 passed Result: Kernel parameter check passed for "shmmax" Check: Kernel parameter for "shmmni" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 4096 4096 4096 passed rac2 4096 4096 4096 passed Result: Kernel parameter check passed for "shmmni" Check: Kernel parameter for "shmall" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 1073741824 1073741824 392428 passed rac2 1073741824 1073741824 392428 passed Result: Kernel parameter check passed for "shmall" Check: Kernel parameter for "file-max" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 6815744 6815744 6815744 passed rac2 6815744 6815744 6815744 passed Result: Kernel parameter check passed for "file-max" Check: Kernel parameter for "ip_local_port_range" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 between 9000 & 65500 between 9000 & 65500 between 9000 & 65535 passed rac2 between 9000 & 65500 between 9000 & 65500 between 9000 & 65535 passed Result: Kernel parameter check passed for "ip_local_port_range" Check: Kernel parameter for "rmem_default" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 262144 262144 262144 passed rac2 262144 262144 262144 passed Result: Kernel parameter check passed for "rmem_default" Check: Kernel parameter for "rmem_max" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 4194304 4194304 4194304 passed rac2 4194304 4194304 4194304 passed Result: Kernel parameter check passed for "rmem_max" Check: Kernel parameter for "wmem_default" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 262144 262144 262144 passed rac2 262144 262144 262144 passed Result: Kernel parameter check passed for "wmem_default" Check: Kernel parameter for "wmem_max" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 1048576 1048576 1048576 passed rac2 1048576 1048576 1048576 passed Result: Kernel parameter check passed for "wmem_max" Check: Kernel parameter for "aio-max-nr" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 1048576 1048576 1048576 passed rac2 1048576 1048576 1048576 passed Result: Kernel parameter check passed for "aio-max-nr" Check: Kernel parameter for "panic_on_oops" Node Name Current Configured Required Status Comment ---------------- ------------ ------------ ------------ ------------ ------------ rac1 1 unknown 1 failed (ignorable) Configured value incorrect. rac2 1 unknown 1 failed (ignorable) Configured value incorrect. PRVG-1206 : Check cannot be performed for configured value of kernel parameter "panic_on_oops" on node "rac1" PRVG-1206 : Check cannot be performed for configured value of kernel parameter "panic_on_oops" on node "rac2" Result: Kernel parameter check passed for "panic_on_oops" Check: Package existence for "binutils" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 binutils-2.20.51.0.2-5.34.el6 binutils-2.20.51.0.2 passed rac1 binutils-2.20.51.0.2-5.34.el6 binutils-2.20.51.0.2 passed Result: Package existence check passed for "binutils" Check: Package existence for "compat-libcap1" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 compat-libcap1-1.10-1 compat-libcap1-1.10 passed rac1 compat-libcap1-1.10-1 compat-libcap1-1.10 passed Result: Package existence check passed for "compat-libcap1" Check: Package existence for "compat-libstdc++-33(x86_64)" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 compat-libstdc++-33(x86_64)-3.2.3-69.el6 compat-libstdc++-33(x86_64)-3.2.3 passed rac1 compat-libstdc++-33(x86_64)-3.2.3-69.el6 compat-libstdc++-33(x86_64)-3.2.3 passed Result: Package existence check passed for "compat-libstdc++-33(x86_64)" Check: Package existence for "libgcc(x86_64)" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 libgcc(x86_64)-4.4.6-4.el6 libgcc(x86_64)-4.4.4 passed rac1 libgcc(x86_64)-4.4.6-4.el6 libgcc(x86_64)-4.4.4 passed Result: Package existence check passed for "libgcc(x86_64)" Check: Package existence for "libstdc++(x86_64)" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 libstdc++(x86_64)-4.4.6-4.el6 libstdc++(x86_64)-4.4.4 passed rac1 libstdc++(x86_64)-4.4.6-4.el6 libstdc++(x86_64)-4.4.4 passed Result: Package existence check passed for "libstdc++(x86_64)" Check: Package existence for "libstdc++-devel(x86_64)" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 libstdc++-devel(x86_64)-4.4.6-4.el6 libstdc++-devel(x86_64)-4.4.4 passed rac1 libstdc++-devel(x86_64)-4.4.6-4.el6 libstdc++-devel(x86_64)-4.4.4 passed Result: Package existence check passed for "libstdc++-devel(x86_64)" Check: Package existence for "sysstat" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 sysstat-9.0.4-20.el6 sysstat-9.0.4 passed rac1 sysstat-9.0.4-20.el6 sysstat-9.0.4 passed Result: Package existence check passed for "sysstat" Check: Package existence for "gcc" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 gcc-4.4.6-4.el6 gcc-4.4.4 passed rac1 gcc-4.4.6-4.el6 gcc-4.4.4 passed Result: Package existence check passed for "gcc" Check: Package existence for "gcc-c++" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 gcc-c++-4.4.6-4.el6 gcc-c++-4.4.4 passed rac1 gcc-c++-4.4.6-4.el6 gcc-c++-4.4.4 passed Result: Package existence check passed for "gcc-c++" Check: Package existence for "ksh" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 ksh ksh passed rac1 ksh ksh passed Result: Package existence check passed for "ksh" Check: Package existence for "make" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 make-3.81-20.el6 make-3.81 passed rac1 make-3.81-20.el6 make-3.81 passed Result: Package existence check passed for "make" Check: Package existence for "glibc(x86_64)" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 glibc(x86_64)-2.12-1.80.el6 glibc(x86_64)-2.12 passed rac1 glibc(x86_64)-2.12-1.80.el6 glibc(x86_64)-2.12 passed Result: Package existence check passed for "glibc(x86_64)" Check: Package existence for "glibc-devel(x86_64)" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 glibc-devel(x86_64)-2.12-1.80.el6 glibc-devel(x86_64)-2.12 passed rac1 glibc-devel(x86_64)-2.12-1.80.el6 glibc-devel(x86_64)-2.12 passed Result: Package existence check passed for "glibc-devel(x86_64)" Check: Package existence for "libaio(x86_64)" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 libaio(x86_64)-0.3.107-10.el6 libaio(x86_64)-0.3.107 passed rac1 libaio(x86_64)-0.3.107-10.el6 libaio(x86_64)-0.3.107 passed Result: Package existence check passed for "libaio(x86_64)" Check: Package existence for "libaio-devel(x86_64)" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 libaio-devel(x86_64)-0.3.107-10.el6 libaio-devel(x86_64)-0.3.107 passed rac1 libaio-devel(x86_64)-0.3.107-10.el6 libaio-devel(x86_64)-0.3.107 passed Result: Package existence check passed for "libaio-devel(x86_64)" Check: Package existence for "nfs-utils" Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac2 nfs-utils-1.2.3-26.el6 nfs-utils-1.2.3-15 passed rac1 nfs-utils-1.2.3-26.el6 nfs-utils-1.2.3-15 passed Result: Package existence check passed for "nfs-utils" Checking availability of ports "6200,6100" required for component "Oracle Notification Service (ONS)" Node Name Port Number Protocol Available Status ---------------- ------------ ------------ ------------ ---------------- rac2 6200 TCP yes successful rac1 6200 TCP yes successful rac2 6100 TCP yes successful rac1 6100 TCP yes successful Result: Port availability check passed for ports "6200,6100" Checking availability of ports "42424" required for component "Oracle Cluster Synchronization Services (CSSD)" Node Name Port Number Protocol Available Status ---------------- ------------ ------------ ------------ ---------------- rac2 42424 TCP yes successful rac1 42424 TCP yes successful Result: Port availability check passed for ports "42424" Checking for multiple users with UID value 0 Result: Check for multiple users with UID value 0 passed Check: Current group ID Result: Current group ID check passed Starting check for consistency of primary group of root user Node Name Status ------------------------------------ ------------------------ rac2 passed rac1 passed Check for consistency of root user's primary group passed Starting Clock synchronization checks using Network Time Protocol(NTP)... Checking existence of NTP configuration file "/etc/ntp.conf" across nodes Node Name File exists? ------------------------------------ ------------------------ rac2 no rac1 no Network Time Protocol(NTP) configuration file not found on any of the nodes. Oracle Cluster Time Synchronization Service(CTSS) can be used instead of NTP for time synchronization on the cluster nodes No NTP Daemons or Services were found to be running Result: Clock synchronization check using Network Time Protocol(NTP) passed Checking Core file name pattern consistency... Core file name pattern consistency check passed. Checking to make sure user "grid" is not in "root" group Node Name Status Comment ------------ ------------------------ ------------------------ rac2 passed does not exist rac1 passed does not exist Result: User "grid" is not part of "root" group. Check passed Check default user file creation mask Node Name Available Required Comment ------------ ------------------------ ------------------------ ---------- rac2 0022 0022 passed rac1 0022 0022 passed Result: Default user file creation mask check passed Checking integrity of file "/etc/resolv.conf" across nodes File "/etc/resolv.conf" does not exist on any node of the cluster. Skipping further checks Check for integrity of file "/etc/resolv.conf" passed Check: Time zone consistency Result: Time zone consistency check passed Checking integrity of name service switch configuration file "/etc/nsswitch.conf" ... Checking if "hosts" entry in file "/etc/nsswitch.conf" is consistent across nodes... Checking file "/etc/nsswitch.conf" to make sure that only one "hosts" entry is defined More than one "hosts" entry does not exist in any "/etc/nsswitch.conf" file All nodes have same "hosts" entry defined in file "/etc/nsswitch.conf" Check for integrity of name service switch configuration file "/etc/nsswitch.conf" passed Checking daemon "avahi-daemon" is not configured and running Check: Daemon "avahi-daemon" not configured Node Name Configured Status ------------ ------------------------ ------------------------ rac2 no passed rac1 no passed Daemon not configured check passed for process "avahi-daemon" Check: Daemon "avahi-daemon" not running Node Name Running? Status ------------ ------------------------ ------------------------ rac2 no passed rac1 no passed Daemon not running check passed for process "avahi-daemon" Starting check for /dev/shm mounted as temporary file system ... Check for /dev/shm mounted as temporary file system passed Starting check for /boot mount ... Check for /boot mount passed Starting check for zeroconf check ... Check for zeroconf check passed Pre-check for cluster services setup was unsuccessful on all the nodes. In the above runcluvfy output, below checks are failed. 1) PRVG-1172 : The IP address "192.168.122.1" is on multiple interfaces "virbr0" on nodes "rac1,rac2" This error is for virbr0 ethernet which is related to virtualization and not related to our RAC IPs and hence I am ignoring this. 2) Result: Total memory check failed Since this is my testing server, I am ignoring this, but you don't ignore this on actual production server. 3) PRVG-1206 : Check cannot be performed for configured value of kernel parameter "panic_on_oops" on node "rac1" PRVG-1206 : Check cannot be performed for configured value of kernel parameter "panic_on_oops" on node "rac2" The parameter "panic_on_oops" is already set to value 1 , but still it is giving error which is ignorable . Also, the status is showing as "failed (ignorable)". |

Login as grid user and run "./runInstaller" command to start the Grid Infrastructure Installation.

Select 1st option "Install and Configure Oracle Grid Infrastructure for a Cluster". Please find description for each option.

1) Install and Configure Oracle Grid Infrastructure for a Cluster:

Select this option if you want to install and configure fresh new setup of Oracle RAC.

2) Install and Configure Oracle Grid Infrastructure for a Standalone Server:

Select this option if you want to install Oracle Grid Infrastructure for standalone or single instance database or Oracle Restart.

3) Upgrade Oracle Grid Infrastructure for a Cluster:

Select this option if you want to upgrade an existing Oracle Clusterware and ASM.

4) Install Oracle Grid Infrastructure Software Only:

Select this option if you want to install the only software binaries for Oracle Clusterware or Oracle Restart and Automatic Storage Management on the local node without configuration during installation.

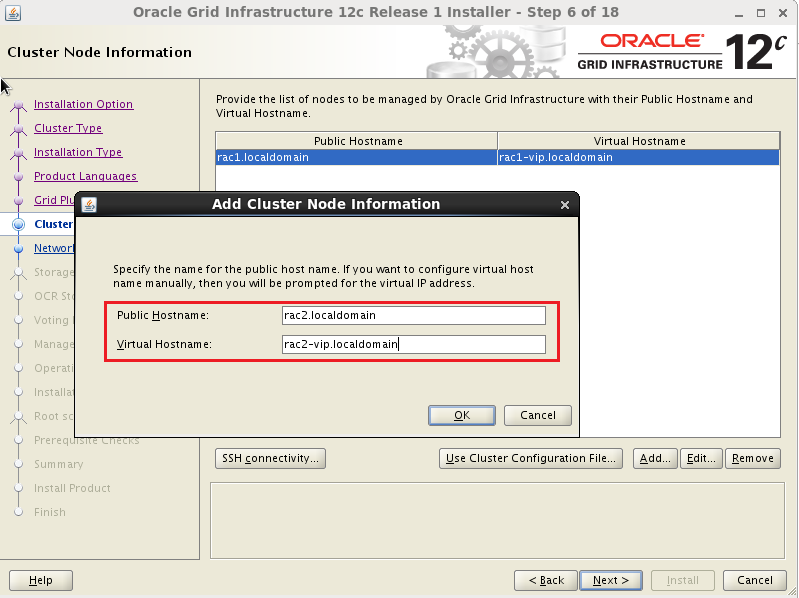

In the below screenshot, you have to change the scan-name and PORT. For scan-name, you can check your /etc/hosts file.

Here, by default, local node details will be captured by the installer. You have to add remaining cluster member details. Refer your /etc/hosts file.

In the below screen, you have to locate your logical OCR disks. We have created three OCR disks for NORMAL redundancy purpose. Your created disks will be located in "/dev/oracleasm/disks/" location and hence you have to change the discovery path.

In You can see the below screenshot. After changing discovery path, all OCR disks are visible now.

Here, you have to add proper GRID_HOME path.

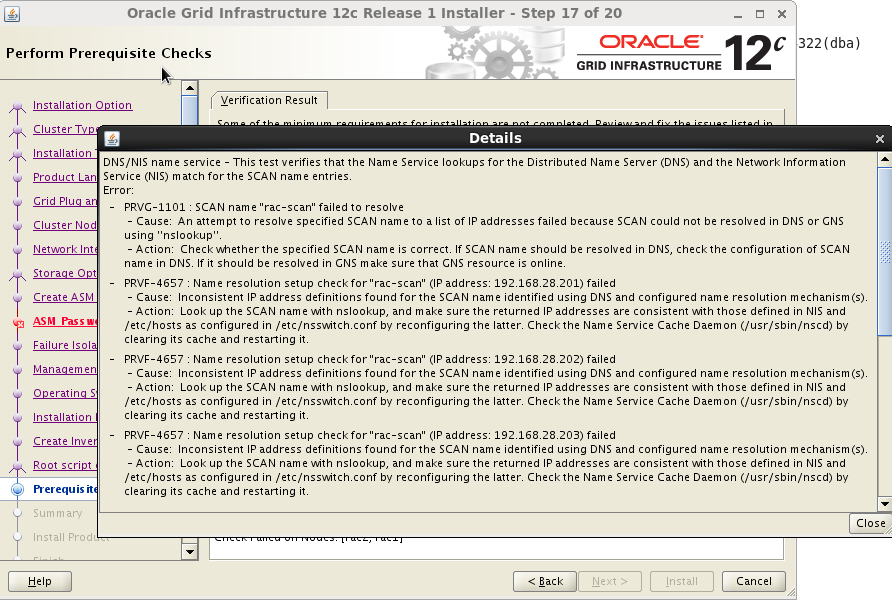

In precheck verification screen, you can see below checks are failed.

- Physical Memory

I am ignoring this since this is my testing server, but you don't ignore for actual production server.

- panic_on_oops

This parameter value is already set to 1. This is ignorable.

- Device checks for ASM

Ignoring this since checks are proper.

- DNS/NIS name service

Ignoring this since we are not using DNS and all IPs are mentioned as static in /etc/hosts file.

In Click on "Ignore All" option and click on NEXT to continue.

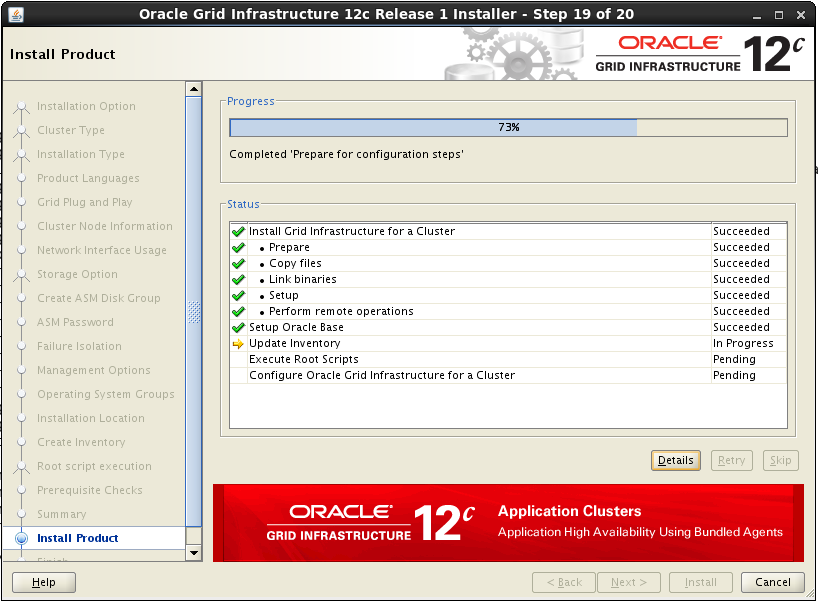

During installation, you will get pop-up box. Take a new terminal by root user and execute scripts "orainstRoot.sh" and "root.sh" on all the nodes one by one.

#Execute /u01/app/oraInventory/orainstRoot.sh Node1: [root@rac1 ~]# id uid=0(root) gid=0(root) groups=0(root),492(sfcb) [root@rac1 ~]# /u01/app/oraInventory/orainstRoot.sh Changing permissions of /u01/app/oraInventory. Adding read,write permissions for group. Removing read,write,execute permissions for world. Changing groupname of /u01/app/oraInventory to oinstall. The execution of the script is complete. Node2: [root@rac2 ~]# id uid=0(root) gid=0(root) groups=0(root),492(sfcb) [root@rac2 ~]# /u01/app/oraInventory/orainstRoot.sh Changing permissions of /u01/app/oraInventory. Adding read,write permissions for group. Removing read,write,execute permissions for world. Changing groupname of /u01/app/oraInventory to oinstall. The execution of the script is complete. |

#Execute /u01/app/12.1.0.2/grid/root.sh Node1: [root@rac1 ~]# id uid=0(root) gid=0(root) groups=0(root),492(sfcb) [root@rac1 ~]# /u01/app/12.1.0.2/grid/root.sh Performing root user operation. The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /u01/app/12.1.0.2/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ... Creating /etc/oratab file... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /u01/app/12.1.0.2/grid/crs/install/crsconfig_params 2024/02/01 16:36:16 CLSRSC-4001: Installing Oracle Trace File Analyzer (TFA) Collector. 2024/02/01 16:36:44 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector. 2024/02/01 16:36:45 CLSRSC-363: User ignored prerequisites during installation OLR initialization - successful root wallet root wallet cert root cert export peer wallet profile reader wallet pa wallet peer wallet keys pa wallet keys peer cert request pa cert request peer cert pa cert peer root cert TP profile reader root cert TP pa root cert TP peer pa cert TP pa peer cert TP profile reader pa cert TP profile reader peer cert TP peer user cert pa user cert 2024/02/01 16:37:24 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.conf' CRS-4133: Oracle High Availability Services has been stopped. CRS-4123: Oracle High Availability Services has been started. CRS-4133: Oracle High Availability Services has been stopped. CRS-4123: Oracle High Availability Services has been started. CRS-2672: Attempting to start 'ora.evmd' on 'rac1' CRS-2672: Attempting to start 'ora.mdnsd' on 'rac1' CRS-2676: Start of 'ora.mdnsd' on 'rac1' succeeded CRS-2676: Start of 'ora.evmd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'rac1' CRS-2676: Start of 'ora.gpnpd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1' CRS-2672: Attempting to start 'ora.gipcd' on 'rac1' CRS-2676: Start of 'ora.cssdmonitor' on 'rac1' succeeded CRS-2676: Start of 'ora.gipcd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'rac1' CRS-2672: Attempting to start 'ora.diskmon' on 'rac1' CRS-2676: Start of 'ora.diskmon' on 'rac1' succeeded CRS-2676: Start of 'ora.cssd' on 'rac1' succeeded ASM created and started successfully. Disk Group DG_OCR created successfully. CRS-2672: Attempting to start 'ora.crf' on 'rac1' CRS-2672: Attempting to start 'ora.storage' on 'rac1' CRS-2676: Start of 'ora.storage' on 'rac1' succeeded CRS-2676: Start of 'ora.crf' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.crsd' on 'rac1' CRS-2676: Start of 'ora.crsd' on 'rac1' succeeded CRS-4256: Updating the profile Successful addition of voting disk 974f0d4a4a574f4cbf5885d0e9832530. Successful addition of voting disk db02b518d4a34fdfbfcb3dda0738656b. Successful addition of voting disk a62c79972e2a4febbf13a3957e515bfc. Successfully replaced voting disk group with +DG_OCR. CRS-4256: Updating the profile CRS-4266: Voting file(s) successfully replaced ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 974f0d4a4a574f4cbf5885d0e9832530 (/dev/oracleasm/disks/OCRDISK1) [DG_OCR] 2. ONLINE db02b518d4a34fdfbfcb3dda0738656b (/dev/oracleasm/disks/OCRDISK2) [DG_OCR] 3. ONLINE a62c79972e2a4febbf13a3957e515bfc (/dev/oracleasm/disks/OCRDISK3) [DG_OCR] Located 3 voting disk(s). CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac1' CRS-2673: Attempting to stop 'ora.crsd' on 'rac1' CRS-2677: Stop of 'ora.crsd' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.storage' on 'rac1' CRS-2673: Attempting to stop 'ora.mdnsd' on 'rac1' CRS-2673: Attempting to stop 'ora.gpnpd' on 'rac1' CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'rac1' CRS-2677: Stop of 'ora.storage' on 'rac1' succeeded CRS-2677: Stop of 'ora.drivers.acfs' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.crf' on 'rac1' CRS-2673: Attempting to stop 'ora.ctssd' on 'rac1' CRS-2673: Attempting to stop 'ora.evmd' on 'rac1' CRS-2673: Attempting to stop 'ora.asm' on 'rac1' CRS-2677: Stop of 'ora.crf' on 'rac1' succeeded CRS-2677: Stop of 'ora.ctssd' on 'rac1' succeeded CRS-2677: Stop of 'ora.evmd' on 'rac1' succeeded CRS-2677: Stop of 'ora.gpnpd' on 'rac1' succeeded CRS-2677: Stop of 'ora.mdnsd' on 'rac1' succeeded CRS-2677: Stop of 'ora.asm' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.cluster_interconnect.haip' on 'rac1' CRS-2677: Stop of 'ora.cluster_interconnect.haip' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.cssd' on 'rac1' CRS-2677: Stop of 'ora.cssd' on 'rac1' succeeded CRS-2673: Attempting to stop 'ora.gipcd' on 'rac1' CRS-2677: Stop of 'ora.gipcd' on 'rac1' succeeded CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac1' has completed CRS-4133: Oracle High Availability Services has been stopped. CRS-4123: Starting Oracle High Availability Services-managed resources CRS-2672: Attempting to start 'ora.mdnsd' on 'rac1' CRS-2672: Attempting to start 'ora.evmd' on 'rac1' CRS-2676: Start of 'ora.mdnsd' on 'rac1' succeeded CRS-2676: Start of 'ora.evmd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'rac1' CRS-2676: Start of 'ora.gpnpd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.gipcd' on 'rac1' CRS-2676: Start of 'ora.gipcd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac1' CRS-2676: Start of 'ora.cssdmonitor' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'rac1' CRS-2672: Attempting to start 'ora.diskmon' on 'rac1' CRS-2676: Start of 'ora.diskmon' on 'rac1' succeeded CRS-2676: Start of 'ora.cssd' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'rac1' CRS-2672: Attempting to start 'ora.ctssd' on 'rac1' CRS-2676: Start of 'ora.ctssd' on 'rac1' succeeded CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.asm' on 'rac1' CRS-2676: Start of 'ora.asm' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.storage' on 'rac1' CRS-2676: Start of 'ora.storage' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.crf' on 'rac1' CRS-2676: Start of 'ora.crf' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.crsd' on 'rac1' CRS-2676: Start of 'ora.crsd' on 'rac1' succeeded CRS-6023: Starting Oracle Cluster Ready Services-managed resources CRS-6017: Processing resource auto-start for servers: rac1 CRS-6016: Resource auto-start has completed for server rac1 CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources CRS-4123: Oracle High Availability Services has been started. 2024/02/01 16:44:22 CLSRSC-343: Successfully started Oracle Clusterware stack CRS-2672: Attempting to start 'ora.asm' on 'rac1' CRS-2676: Start of 'ora.asm' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.DG_OCR.dg' on 'rac1' CRS-2676: Start of 'ora.DG_OCR.dg' on 'rac1' succeeded 2024/02/01 16:46:15 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded Node2: [root@rac2 ~]# id uid=0(root) gid=0(root) groups=0(root),492(sfcb) [root@rac2 ~]# /u01/app/12.1.0.2/grid/root.sh Performing root user operation. The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /u01/app/12.1.0.2/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ... Creating /etc/oratab file... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /u01/app/12.1.0.2/grid/crs/install/crsconfig_params 2024/02/01 16:47:07 CLSRSC-4001: Installing Oracle Trace File Analyzer (TFA) Collector. 2024/02/01 16:47:33 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector. 2024/02/01 16:47:36 CLSRSC-363: User ignored prerequisites during installation OLR initialization - successful 2024/02/01 16:48:57 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.conf' CRS-4133: Oracle High Availability Services has been stopped. CRS-4123: Oracle High Availability Services has been started. CRS-4133: Oracle High Availability Services has been stopped. CRS-4123: Oracle High Availability Services has been started. CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'rac2' CRS-2673: Attempting to stop 'ora.drivers.acfs' on 'rac2' CRS-2677: Stop of 'ora.drivers.acfs' on 'rac2' succeeded CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'rac2' has completed CRS-4133: Oracle High Availability Services has been stopped. CRS-4123: Starting Oracle High Availability Services-managed resources CRS-2672: Attempting to start 'ora.mdnsd' on 'rac2' CRS-2672: Attempting to start 'ora.evmd' on 'rac2' CRS-2676: Start of 'ora.mdnsd' on 'rac2' succeeded CRS-2676: Start of 'ora.evmd' on 'rac2' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'rac2' CRS-2676: Start of 'ora.gpnpd' on 'rac2' succeeded CRS-2672: Attempting to start 'ora.gipcd' on 'rac2' CRS-2676: Start of 'ora.gipcd' on 'rac2' succeeded CRS-2672: Attempting to start 'ora.cssdmonitor' on 'rac2' CRS-2676: Start of 'ora.cssdmonitor' on 'rac2' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'rac2' CRS-2672: Attempting to start 'ora.diskmon' on 'rac2' CRS-2676: Start of 'ora.diskmon' on 'rac2' succeeded CRS-2676: Start of 'ora.cssd' on 'rac2' succeeded CRS-2672: Attempting to start 'ora.cluster_interconnect.haip' on 'rac2' CRS-2672: Attempting to start 'ora.ctssd' on 'rac2' CRS-2676: Start of 'ora.ctssd' on 'rac2' succeeded CRS-2676: Start of 'ora.cluster_interconnect.haip' on 'rac2' succeeded CRS-2672: Attempting to start 'ora.asm' on 'rac2' CRS-2676: Start of 'ora.asm' on 'rac2' succeeded CRS-2672: Attempting to start 'ora.storage' on 'rac2' CRS-2676: Start of 'ora.storage' on 'rac2' succeeded CRS-2672: Attempting to start 'ora.crf' on 'rac2' CRS-2676: Start of 'ora.crf' on 'rac2' succeeded CRS-2672: Attempting to start 'ora.crsd' on 'rac2' CRS-2676: Start of 'ora.crsd' on 'rac2' succeeded CRS-6017: Processing resource auto-start for servers: rac2 CRS-2673: Attempting to stop 'ora.LISTENER_SCAN1.lsnr' on 'rac1' CRS-2672: Attempting to start 'ora.net1.network' on 'rac2' CRS-2677: Stop of 'ora.LISTENER_SCAN1.lsnr' on 'rac1' succeeded CRS-2676: Start of 'ora.net1.network' on 'rac2' succeeded CRS-2673: Attempting to stop 'ora.scan1.vip' on 'rac1' CRS-2672: Attempting to start 'ora.ons' on 'rac2' CRS-2677: Stop of 'ora.scan1.vip' on 'rac1' succeeded CRS-2672: Attempting to start 'ora.scan1.vip' on 'rac2' CRS-2676: Start of 'ora.scan1.vip' on 'rac2' succeeded CRS-2672: Attempting to start 'ora.LISTENER_SCAN1.lsnr' on 'rac2' CRS-2676: Start of 'ora.ons' on 'rac2' succeeded CRS-2676: Start of 'ora.LISTENER_SCAN1.lsnr' on 'rac2' succeeded CRS-6016: Resource auto-start has completed for server rac2 CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources CRS-4123: Oracle High Availability Services has been started. 2024/02/01 16:53:24 CLSRSC-343: Successfully started Oracle Clusterware stack 2024/02/01 16:53:39 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded |

At this stage, installer will take some time to create GIMR(Grid Infrastructure Management Repository). You can check installation log file for any ORA- messages.

[root@rac1 cfgtoollogs]# cat /u01/app/oraInventory/logs/installActions2024-02-01_03-49-42PM.log | grep -i fail

INFO: PRVG-1101 : SCAN name "rac-scan" failed to resolve

INFO: PRVF-4657 : Name resolution setup check for "rac-scan" (IP address: 192.168.28.201) failed

INFO: PRVF-4657 : Name resolution setup check for "rac-scan" (IP address: 192.168.28.202) failed

INFO: PRVF-4657 : Name resolution setup check for "rac-scan" (IP address: 192.168.28.203) failed

INFO: Verification of SCAN VIP and listener setup failed

Click on Skip to continue and finish the installation.