Environment Details:

Note: Please consider hostnames of both cluster nodes as rac1 and rac2 as I have removed actual hostnames for security reasons. |

For Oracle Grid Infrastructure upgrade, downtime is not required. You can perform upgrade in rolling fashion. You can upgrade Oracle Grid Infrastructure in below ways: 1) Rolling Upgrade: This involves upgrading individual nodes without stopping Oracle Grid Infrastructure on other nodes in the cluster. 2) Non-rolling Upgrade: This involves bringing down all the nodes except one. A complete cluster outage occurs while the root script stops the old Oracle Clusterware stack and starts the new Oracle Clusterware stack on the node where you initiate the upgrade. After upgrade is completed, the new Oracle Clusterware is started on all the nodes. 3) Upgrade the DR Server first: You can plan to upgrade GRID Infrastructure on DR server first if configured. Once DR cluster is upgraded, you can perform the swithover operation so that the DR will be acting as Primary which will be available for application and you can upgrade cluster on old Primary DB server which is working as DR now. Note that some services are disabled when one or more nodes are in the process of being upgraded. All upgrades are out-of-place upgrades, meaning that the software binaries are placed in a different Grid home from the Grid home used for the prior release. What is In-place upgrade ? - Upgrade existing home. What is Out-of-place upgrade ? - Install separate binaries in separate location and upgrade that one. |

Below are the supported paths for Oracle Grid Infrastructure 19c Upgrade: - Oracle Grid Infrastructure upgrade from 11.2.0.4 to Oracle Grid Infrastructure 19c - Oracle Grid Infrastructure upgrade from 12.1.0.2 to Oracle Grid Infrastructure 19c - Oracle Grid Infrastructure upgrade from 12.2.0.1 to Oracle Grid Infrastructure 19c - Oracle Grid Infrastructure upgrade from 18c to Oracle Grid Infrastructure 19c |

Points to be checked before starting Oracle GRID Infrastructure upgrade:

|

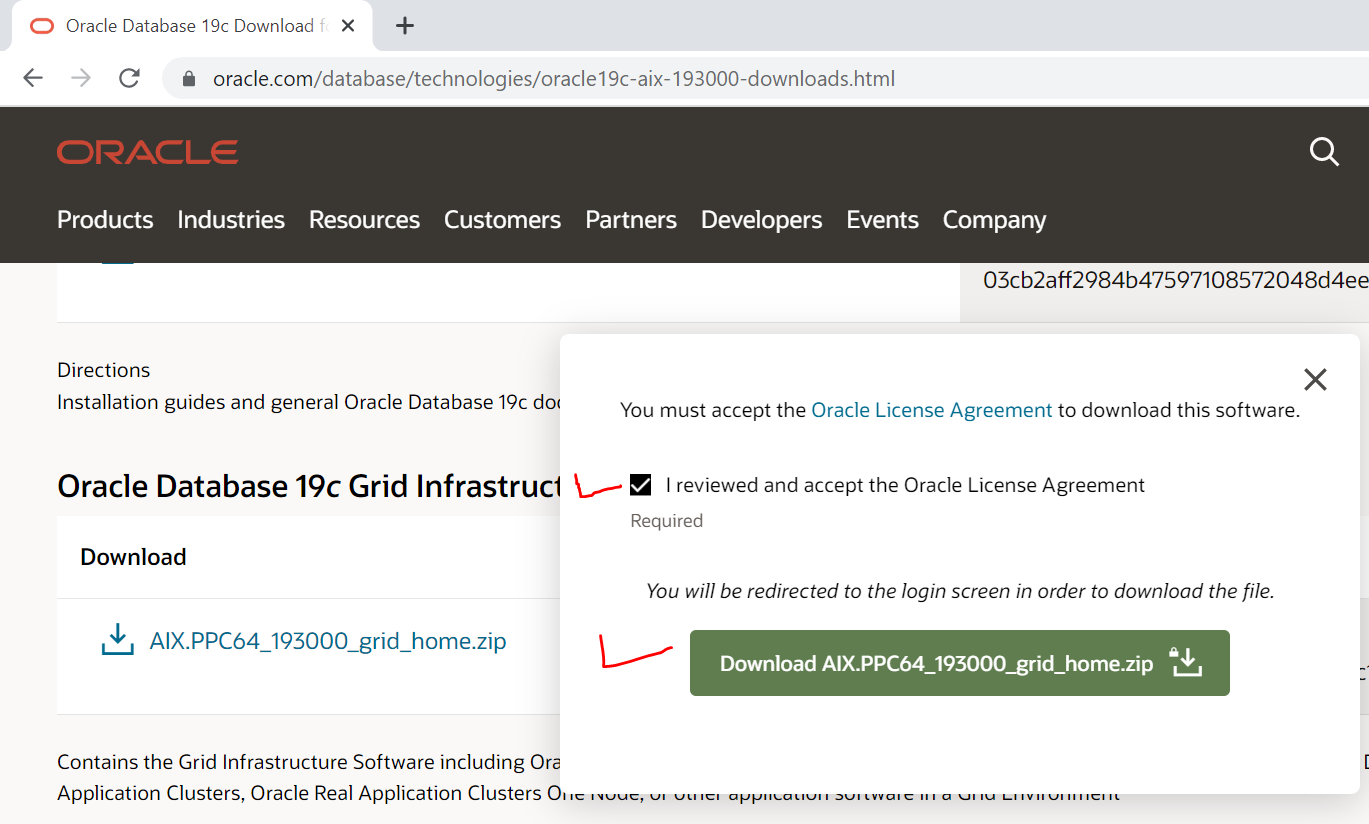

Step 1: Download Oracle Grid Infrastructure 19c Software from Oracle Site. Also, download latest and recommended patches with latest OPatch version setup. Transfer the setup and patches to target server. |

Step 2: Create below directories for Oracle GRID 19c Home. Unzip the GRID software, patches, and opatch setup. #mkdir -p /grid19c/app/19.3.0.0/grid #chmod -R 775 /grid19c/app/19.3.0.0/grid #chown -R grid:oinstall /grid19c/app/19.3.0.0/grid Patch location: /grid19c/patch_19cRU/32545008 #chmod -R 775 /grid19c/patch_19cRU/32545008 #chown -R grid:oinstall /grid19c/patch_19cRU/32545008 {rac1} /grid19c/app/19.3.0.0/grid/OPatch $ ./opatch version OPatch Version: 12.2.0.1.17 OPatch succeeded. {rac1} /grid19c/app/19.3.0.0/grid $ mv OPatch OPatch_150921 {rac1} /grid19c/app/19.3.0.0/grid $ cp /grid19c/patch_19cRU/p6880880_190000_AIX64-5L.zip . {rac1} /grid19c/app/19.3.0.0/grid $ unzip p6880880_190000_AIX64-5L.zip Archive: p6880880_190000_AIX64-5L.zip creating: OPatch/ inflating: OPatch/README.txt inflating: OPatch/datapatch inflating: OPatch/emdpatch.pl ... inflating: OPatch/modules/com.oracle.glcm.patch.opatch-common-api-schema_13.9.5.0.jar inflating: OPatch/modules/com.sun.xml.bind.jaxb-xjc.jar inflating: OPatch/modules/com.oracle.glcm.patch.opatch-common-api-interfaces_13.9.5.0.jar {rac1} /grid19c/app/19.3.0.0/grid $ {rac1} /grid19c/app/19.3.0.0/grid $ cd OPatch {rac1} /grid19c/app/19.3.0.0/grid/OPatch $ {rac1} /grid19c/app/19.3.0.0/grid/OPatch $ ./opatch version OPatch Version: 12.2.0.1.27 |

Step 3: Check existing CRS details from current(source) GRID Home as below . Node1: {rac1} /grid/app/12.2.0.1/grid_home $ kfod op=patches --------------- List of Patches =============== 26839277 31802727 32231681 32253903 {rac1} /grid/app/12.2.0.1/grid_home $ crsctl query crs softwareversion Oracle Clusterware version on node [rac1] is [12.2.0.1.0] {rac1} /grid/app/12.2.0.1/grid_home $ crsctl query crs activeversion -f Oracle Clusterware active version on the cluster is [12.2.0.1.0]. The cluster upgrade state is [NORMAL]. The cluster active patch level is [1419328588]. {rac1} /grid/app/12.2.0.1/grid_home $ crsctl query crs releaseversion Oracle High Availability Services release version on the local node is [12.2.0.1.0] {rac1} /grid/app/12.2.0.1/grid_home $ crsctl query crs softwarepatch Oracle Clusterware patch level on node rac1 is [1419328588]. OPatch succeeded. Node2: {rac2} /grid/app/12.2.0.1/grid_home $ kfod op=patches --------------- List of Patches =============== 26839277 31802727 32231681 32253903 32507738 {rac2} /grid/app/12.2.0.1/grid_home $ crsctl query crs softwareversion Oracle Clusterware version on node [rac2] is [12.2.0.1.0] {rac2} /grid/app/12.2.0.1/grid_home $ crsctl query crs activeversion -f Oracle Clusterware active version on the cluster is [12.2.0.1.0]. The cluster upgrade state is [NORMAL]. The cluster active patch level is [1419328588]. {rac2} /grid/app/12.2.0.1/grid_home $ crsctl query crs releaseversion Oracle High Availability Services release version on the local node is [12.2.0.1.0] {rac2} /grid/app/12.2.0.1/grid_home $ crsctl query crs softwarepatch Oracle Clusterware patch level on node rac2 is [1419328588]. |

Step 4: Execute runcluvfy command to check whether current or source CRS is able to upgrade to 19c. $cat /etc/hosts #public IP 10.20.30.101 rac1.localdomain rac1 10.20.30.102 rac2.localdomain rac2 #Virtual IP 10.20.30.103 rac1-vip.localdomain rac1-vip 10.20.30.104 rac1-vip.localdomain rac1-vip #private ip 10.1.2.201 rac1-priv.localdomain rac1-priv 10.1.2.202 rac2-priv.localdomain rac2-priv #scan ip 10.20.30.105 rac-scan.localdomain rac-scan 10.20.30.106 rac-scan.localdomain rac-scan 10.20.30.107 rac-scan.localdomain rac-scan Execute below cluvfy command to check the upgrade prerequisites. {rac1} /grid19c/app/19.3.0.0/grid $ ./runcluvfy.sh stage -pre crsinst -upgrade -rolling -src_crshome /grid/app/12.2.0.1/grid_home -dest_crshome /grid19c/app/19.3.0.0/grid -dest_version 19.0.0.0.0 -fixup -verbose Verifying Physical Memory ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 256GB (2.68435456E8KB) 8GB (8388608.0KB) passed rac2 256GB (2.68435456E8KB) 8GB (8388608.0KB) passed Verifying Physical Memory ...PASSED Verifying Available Physical Memory ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 191.0375GB (2.00317388E8KB) 250MB (256000.0KB) passed rac2 197.478GB (2.0707072E8KB) 250MB (256000.0KB) passed Verifying Available Physical Memory ...PASSED Verifying Swap Size ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 32GB (3.3554432E7KB) 16GB (1.6777216E7KB) passed rac2 32GB (3.3554432E7KB) 16GB (1.6777216E7KB) passed Verifying Swap Size ...PASSED Verifying Free Space: rac1:/usr ... Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /usr rac1 /usr 11.297GB 25MB passed Verifying Free Space: rac1:/usr ...PASSED Verifying Free Space: rac1:/var ... Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /var rac1 /var 14.3679GB 5MB passed Verifying Free Space: rac1:/var ...PASSED Verifying Free Space: rac1:/etc,rac1:/sbin ... Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /etc rac1 / 14.6253GB 25MB passed /sbin rac1 / 14.6253GB 10MB passed Verifying Free Space: rac1:/etc,rac1:/sbin ...PASSED Verifying Free Space: rac1:/tmp ... Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /tmp rac1 /tmp 9.3977GB 5GB passed Verifying Free Space: rac1:/tmp ...PASSED Verifying Free Space: rac2:/usr ... Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /usr rac2 /usr 11.297GB 25MB passed Verifying Free Space: rac2:/usr ...PASSED Verifying Free Space: rac2:/var ... Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /var rac2 /var 14.3861GB 5MB passed Verifying Free Space: rac2:/var ...PASSED Verifying Free Space: rac2:/etc,rac2:/sbin ... Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /etc rac2 / 645.082MB 25MB passed /sbin rac2 / 645.082MB 10MB passed Verifying Free Space: rac2:/etc,rac2:/sbin ...PASSED Verifying Free Space: rac2:/tmp ... Path Node Name Mount point Available Required Status ---------------- ------------ ------------ ------------ ------------ ------------ /tmp rac2 /tmp 9.6817GB 5GB passed Verifying Free Space: rac2:/tmp ...PASSED Verifying User Existence: grid ... Node Name Status Comment ------------ ------------------------ ------------------------ rac1 passed exists(1100) rac2 passed exists(1100) Verifying Users With Same UID: 1100 ...PASSED Verifying User Existence: grid ...PASSED Verifying Group Existence: asmadmin ... Node Name Status Comment ------------ ------------------------ ------------------------ rac1 passed exists rac2 passed exists Verifying Group Existence: asmadmin ...PASSED Verifying Group Existence: asmoper ... Node Name Status Comment ------------ ------------------------ ------------------------ rac1 passed exists rac2 passed exists Verifying Group Existence: asmoper ...PASSED Verifying Group Existence: asmdba ... Node Name Status Comment ------------ ------------------------ ------------------------ rac1 passed exists rac2 passed exists Verifying Group Existence: asmdba ...PASSED Verifying Group Existence: oinstall ... Node Name Status Comment ------------ ------------------------ ------------------------ rac1 passed exists rac2 passed exists Verifying Group Existence: oinstall ...PASSED Verifying Group Membership: asmdba ... Node Name User Exists Group Exists User in Group Status ---------------- ------------ ------------ ------------ ---------------- rac1 yes yes yes passed rac2 yes yes yes passed Verifying Group Membership: asmdba ...PASSED Verifying Group Membership: asmadmin ... Node Name User Exists Group Exists User in Group Status ---------------- ------------ ------------ ------------ ---------------- rac1 yes yes yes passed rac2 yes yes yes passed Verifying Group Membership: asmadmin ...PASSED Verifying Group Membership: oinstall(Primary) ... Node Name User Exists Group Exists User in Group Primary Status ---------------- ------------ ------------ ------------ ------------ ------------ rac1 yes yes yes yes passed rac2 yes yes yes yes passed Verifying Group Membership: oinstall(Primary) ...PASSED Verifying Group Membership: asmoper ... Node Name User Exists Group Exists User in Group Status ---------------- ------------ ------------ ------------ ---------------- rac1 yes yes yes passed rac2 yes yes yes passed Verifying Group Membership: asmoper ...PASSED Verifying Run Level ... Node Name run level Required Status ------------ ------------------------ ------------------------ ---------- rac1 2 2 passed rac2 2 2 passed Verifying Run Level ...PASSED Verifying Hard Limit: maximum open file descriptors ... Node Name Type Available Required Status ---------------- ------------ ------------ ------------ ---------------- rac1 hard 9223372036854776000 65536 passed rac2 hard 9223372036854776000 65536 passed Verifying Hard Limit: maximum open file descriptors ...PASSED Verifying Soft Limit: maximum open file descriptors ... Node Name Type Available Required Status ---------------- ------------ ------------ ------------ ---------------- rac1 soft 9223372036854776000 1024 passed rac2 soft 9223372036854776000 1024 passed Verifying Soft Limit: maximum open file descriptors ...PASSED Verifying Hard Limit: maximum user processes ... Node Name Type Available Required Status ---------------- ------------ ------------ ------------ ---------------- rac1 hard 16384 16384 passed rac2 hard 16384 16384 passed Verifying Hard Limit: maximum user processes ...PASSED Verifying Soft Limit: maximum user processes ... Node Name Type Available Required Status ---------------- ------------ ------------ ------------ ---------------- rac1 soft 16384 2047 passed rac2 soft 16384 2047 passed Verifying Soft Limit: maximum user processes ...PASSED Verifying Hard Limit: maximum stack size ... Node Name Type Available Required Status ---------------- ------------ ------------ ------------ ---------------- rac1 hard 9007199254740991 32768 passed rac2 hard 9007199254740991 32768 passed Verifying Hard Limit: maximum stack size ...PASSED Verifying Soft Limit: maximum stack size ... Node Name Type Available Required Status ---------------- ------------ ------------ ------------ ---------------- rac1 soft 9007199254740991 10240 passed rac2 soft 9007199254740991 10240 passed Verifying Soft Limit: maximum stack size ...PASSED Verifying Oracle patch:27006180 ... Node Name Applied Required Comment ------------ ------------------------ ------------------------ ---------- rac1 27006180 27006180 passed rac2 27006180 27006180 passed Verifying Oracle patch:27006180 ...PASSED Verifying This test checks that the source home "/grid/app/12.2.0.1/grid_home" is suitable for upgrading to version "19.0.0.0.0". ...PASSED Verifying Architecture ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 powerpc powerpc passed rac2 powerpc powerpc passed Verifying Architecture ...PASSED Verifying OS Kernel Version ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 7.2-7200.04.04.2114 7.2-7200-02-01-1731 passed rac2 7.2-7200.04.04.2114 7.2-7200-02-01-1731 passed Verifying OS Kernel Version ...PASSED Verifying OS Kernel Parameter: ncargs ... Node Name Current Required Status Comment ---------------- ------------ ------------ ------------ ---------------- rac1 1024 128 passed rac2 1024 128 passed Verifying OS Kernel Parameter: ncargs ...PASSED Verifying OS Kernel Parameter: maxuproc ... Node Name Current Required Status Comment ---------------- ------------ ------------ ------------ ---------------- rac1 16384 16384 passed rac2 16384 16384 passed Verifying OS Kernel Parameter: maxuproc ...PASSED Verifying OS Kernel Parameter: tcp_ephemeral_low ... Node Name Current Required Status Comment ---------------- ------------ ------------ ------------ ---------------- rac1 32768 at least 9000 passed rac2 32768 at least 9000 passed Verifying OS Kernel Parameter: tcp_ephemeral_low ...PASSED Verifying OS Kernel Parameter: tcp_ephemeral_high ... Node Name Current Required Status Comment ---------------- ------------ ------------ ------------ ---------------- rac1 65535 at most 65535 passed rac2 65535 at most 65535 passed Verifying OS Kernel Parameter: tcp_ephemeral_high ...PASSED Verifying OS Kernel Parameter: udp_ephemeral_low ... Node Name Current Required Status Comment ---------------- ------------ ------------ ------------ ---------------- rac1 32768 at least 9000 passed rac2 32768 at least 9000 passed Verifying OS Kernel Parameter: udp_ephemeral_low ...PASSED Verifying OS Kernel Parameter: udp_ephemeral_high ... Node Name Current Required Status Comment ---------------- ------------ ------------ ------------ ---------------- rac1 65535 at most 65535 passed rac2 65535 at most 65535 passed Verifying OS Kernel Parameter: udp_ephemeral_high ...PASSED Verifying Package: bos.adt.base ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 bos.adt.base bos.adt.base passed rac2 bos.adt.base bos.adt.base passed Verifying Package: bos.adt.base ...PASSED Verifying Package: xlfrte.aix61-15.1.0.9 ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 xlfrte.aix61-16.1.0.0-0 xlfrte.aix61-15.1.0.9 passed rac2 xlfrte.aix61-16.1.0.0-0 xlfrte.aix61-15.1.0.9 passed Verifying Package: xlfrte.aix61-15.1.0.9 ...PASSED Verifying Package: bos.adt.lib ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 bos.adt.lib bos.adt.lib passed rac2 bos.adt.lib bos.adt.lib passed Verifying Package: bos.adt.lib ...PASSED Verifying Package: bos.adt.libm ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 bos.adt.libm bos.adt.libm passed rac2 bos.adt.libm bos.adt.libm passed Verifying Package: bos.adt.libm ...PASSED Verifying Package: bos.perf.libperfstat ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 bos.perf.libperfstat bos.perf.libperfstat passed rac2 bos.perf.libperfstat bos.perf.libperfstat passed Verifying Package: bos.perf.libperfstat ...PASSED Verifying Package: bos.perf.perfstat ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 bos.perf.perfstat bos.perf.perfstat passed rac2 bos.perf.perfstat bos.perf.perfstat passed Verifying Package: bos.perf.perfstat ...PASSED Verifying Package: bos.perf.proctools ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 bos.perf.proctools bos.perf.proctools passed rac2 bos.perf.proctools bos.perf.proctools passed Verifying Package: bos.perf.proctools ...PASSED Verifying Package: xlC.aix61.rte-13.1.3.1 ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 xlC.aix61.rte-16.1.0.3-0 xlC.aix61.rte-13.1.3.1 passed rac2 xlC.aix61.rte-16.1.0.3-0 xlC.aix61.rte-13.1.3.1 passed Verifying Package: xlC.aix61.rte-13.1.3.1 ...PASSED Verifying Package: xlC.rte-13.1.3.1 ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 xlC.rte-16.1.0.3-0 xlC.rte-13.1.3.1 passed rac2 xlC.rte-16.1.0.3-0 xlC.rte-13.1.3.1 passed Verifying Package: xlC.rte-13.1.3.1 ...PASSED Verifying Package: unzip-6.00 ... Node Name Available Required Status ------------ ------------------------ ------------------------ ---------- rac1 unzip-6.0-3-6.0-3-0 unzip-6.00 passed rac2 unzip-6.0-3-6.0-3-0 unzip-6.00 passed Verifying Package: unzip-6.00 ...PASSED Verifying Users With Same UID: 0 ...PASSED Verifying Current Group ID ...PASSED Verifying Root user consistency ... Node Name Status ------------------------------------ ------------------------ rac1 passed rac2 passed Verifying Root user consistency ...PASSED Verifying correctness of ASM disk group files ownership ...PASSED Verifying selectivity of ASM discovery string ...PASSED Verifying ASM spare parameters ...PASSED Verifying Disk group ASM compatibility setting ...PASSED Verifying Host name ...PASSED Verifying Node Connectivity ... Verifying Hosts File ... Node Name Status ------------------------------------ ------------------------ rac1 passed rac2 passed Verifying Hosts File ...PASSED Interface information for node "rac1" Name IP Address Subnet Gateway Def. Gateway HW Address MTU ------ --------------- --------------- --------------- --------------- ----------------- ------ en0 10.20.30.101 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:6C:9A:FB 1500 en0 10.20.30.107 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:6C:9A:FB 1500 en0 10.20.30.103 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:6C:9A:FB 1500 en0 10.20.30.106 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:6C:9A:FB 1500 en1 10.1.2.201 10.1.2.0 0.0.0.0 UNKNOWN 08:00:27:7E:D7:1A 1500 Interface information for node "rac2" Name IP Address Subnet Gateway Def. Gateway HW Address MTU ------ --------------- --------------- --------------- --------------- ----------------- ------ en0 10.20.30.102 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:79:B4:29 1500 en0 10.20.30.104 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:79:B4:29 1500 en0 10.20.30.105 10.20.30.0 0.0.0.0 UNKNOWN 08:00:27:79:B4:29 1500 en1 10.1.2.202 10.1.2.0 0.0.0.0 UNKNOWN 08:00:27:73:FE:D9 1500 Check: MTU consistency on the private interfaces of subnet "10.1.2.0" Node Name IP Address Subnet MTU ---------------- ------------ ------------ ------------ ---------------- rac1 en1 10.1.2.201 10.1.2.0 1500 rac2 en1 10.1.2.202 10.1.2.0 1500 Check: MTU consistency of the subnet "10.20.30.0". Node Name IP Address Subnet MTU ---------------- ------------ ------------ ------------ ---------------- rac1 en0 10.20.30.101 10.20.30.0 1500 rac1 en0 10.20.30.107 10.20.30.0 1500 rac1 en0 10.20.30.103 10.20.30.0 1500 rac1 en0 10.20.30.106 10.20.30.0 1500 rac2 en0 10.20.30.102 10.20.30.0 1500 rac2 en0 10.20.30.104 10.20.30.0 1500 rac2 en0 10.20.30.105 10.20.30.0 1500 Source Destination Connected? ------------------------------ ------------------------------ ---------------- rac1[en0:10.20.30.101] rac1[en0:10.20.30.107] yes rac1[en0:10.20.30.101] rac1[en0:10.20.30.103] yes rac1[en0:10.20.30.101] rac1[en0:10.20.30.106] yes rac1[en0:10.20.30.101] rac2[en0:10.20.30.102] yes rac1[en0:10.20.30.101] rac2[en0:10.20.30.104] yes rac1[en0:10.20.30.101] rac2[en0:10.20.30.105] yes rac1[en0:10.20.30.107] rac1[en0:10.20.30.103] yes rac1[en0:10.20.30.107] rac1[en0:10.20.30.106] yes rac1[en0:10.20.30.107] rac2[en0:10.20.30.102] yes rac1[en0:10.20.30.107] rac2[en0:10.20.30.104] yes rac1[en0:10.20.30.107] rac2[en0:10.20.30.105] yes rac1[en0:10.20.30.103] rac1[en0:10.20.30.106] yes rac1[en0:10.20.30.103] rac2[en0:10.20.30.102] yes rac1[en0:10.20.30.103] rac2[en0:10.20.30.104] yes rac1[en0:10.20.30.103] rac2[en0:10.20.30.105] yes rac1[en0:10.20.30.106] rac2[en0:10.20.30.102] yes rac1[en0:10.20.30.106] rac2[en0:10.20.30.104] yes rac1[en0:10.20.30.106] rac2[en0:10.20.30.105] yes rac2[en0:10.20.30.102] rac2[en0:10.20.30.104] yes rac2[en0:10.20.30.102] rac2[en0:10.20.30.105] yes rac2[en0:10.20.30.104] rac2[en0:10.20.30.105] yes Source Destination Connected? ------------------------------ ------------------------------ ---------------- rac1[en1:10.1.2.201] rac2[en1:10.1.2.202] yes Verifying subnet mask consistency for subnet "10.1.2.0" ...PASSED Verifying subnet mask consistency for subnet "10.20.30.0" ...PASSED Verifying Node Connectivity ...PASSED Verifying Multicast or broadcast check ... Checking subnet "10.20.30.0" for multicast communication with multicast group "224.0.0.251" Verifying Multicast or broadcast check ...PASSED Verifying ACFS Driver Checks ...PASSED Verifying OCR Integrity ...PASSED Verifying Network Time Protocol (NTP) ... Verifying '/etc/ntp.conf' ... Node Name File exists? ------------------------------------ ------------------------ rac1 yes rac2 yes Verifying '/etc/ntp.conf' ...PASSED Verifying Daemon 'xntpd' ... Node Name Running? ------------------------------------ ------------------------ rac1 yes rac2 yes Verifying Daemon 'xntpd' ...PASSED Verifying NTP daemon or service using UDP port 123 ... Node Name Port Open? ------------------------------------ ------------------------ rac1 yes rac2 yes Verifying NTP daemon or service using UDP port 123 ...PASSED Verifying Network Time Protocol (NTP) ...PASSED Verifying Same core file name pattern ...PASSED Verifying User Mask ... Node Name Available Required Comment ------------ ------------------------ ------------------------ ---------- rac1 022 0022 passed rac2 022 0022 passed Verifying User Mask ...PASSED Verifying User Not In Group "root": grid ... Node Name Status Comment ------------ ------------------------ ------------------------ rac1 passed does not exist rac2 passed does not exist Verifying User Not In Group "root": grid ...PASSED Verifying Time zone consistency ...PASSED Verifying Time offset between nodes ...PASSED Verifying VIP Subnet configuration check ...PASSED Verifying Voting Disk ...PASSED Verifying resolv.conf Integrity ...WARNING (PRVG-2016) Verifying DNS/NIS name service ...PASSED Verifying OS Kernel 64-Bit ...PASSED Verifying Virtual memory manager parameter: minperm% ...INFORMATION (PRVG-11250) Verifying Virtual memory manager parameter: maxperm% ...INFORMATION (PRVG-11250) Verifying Virtual memory manager parameter: maxclient% ...INFORMATION (PRVG-11250) Verifying Virtual memory manager parameter: page_steal_method ...INFORMATION (PRVG-11250) Verifying Virtual memory manager parameter: vmm_klock_mode ...INFORMATION (PRVG-11250) Verifying Processor scheduler manager parameter: vpm_xvcpus ...PASSED Verifying Privileged group consistency for upgrade ...PASSED Verifying CRS user Consistency for upgrade ...PASSED Verifying Clusterware Version Consistency ... Verifying cluster upgrade state ...PASSED Verifying Clusterware Version Consistency ...PASSED Verifying Check incorrectly sized ASM Disks ...PASSED Verifying Network configuration consistency checks ...PASSED Verifying File system mount options for path GI_HOME ...PASSED Verifying OLR Integrity ...PASSED Verifying Verify that the ASM instance was configured using an existing ASM parameter file. ...PASSED Verifying User Equivalence ...PASSED Verifying Network parameter - ipqmaxlen ...PASSED Verifying Network parameter - rfc1323 ...PASSED Verifying Network parameter - sb_max ...PASSED Verifying Network parameter - tcp_sendspace ...PASSED Verifying Network parameter - tcp_recvspace ...PASSED Verifying Network parameter - udp_sendspace ...PASSED Verifying Network parameter - udp_recvspace ...PASSED Verifying File system mount options for path /var ...PASSED Verifying I/O Completion Ports (IOCP) device status ...PASSED Verifying Berkeley Packet Filter devices /dev/bpf* existence and validation check ...PASSED Pre-check for cluster services setup was successful. Warnings were encountered during execution of CVU verification request "stage -pre crsinst". Verifying resolv.conf Integrity ...WARNING rac1: PRVG-2016 : File "/etc/resolv.conf" on node "rac1" has both 'search' and 'domain' entries. rac2: PRVG-2016 : File "/etc/resolv.conf" on node "rac2" has both 'search' and 'domain' entries. Verifying Virtual memory manager parameter: minperm% ...INFORMATION PRVG-11250 : The check "Virtual memory manager parameter: minperm% " was not performed because it needs 'root' user privileges. Verifying Virtual memory manager parameter: maxperm% ...INFORMATION PRVG-11250 : The check "Virtual memory manager parameter: maxperm% " was not performed because it needs 'root' user privileges. Verifying Virtual memory manager parameter: maxclient% ...INFORMATION PRVG-11250 : The check "Virtual memory manager parameter: maxclient% " was not performed because it needs 'root' user privileges. Verifying Virtual memory manager parameter: page_steal_method ...INFORMATION PRVG-11250 : The check "Virtual memory manager parameter: page_steal_method " was not performed because it needs 'root' user privileges. Verifying Virtual memory manager parameter: vmm_klock_mode ...INFORMATION PRVG-11250 : The check "Virtual memory manager parameter: vmm_klock_mode " was not performed because it needs 'root' user privileges. CVU operation performed: stage -pre crsinst Date: Sep 15, 2021 6:56:58 PM CVU home: /grid19c/app/19.3.0.0/grid/ User: grid |

What is Snapshot Standby database? Snapshot Standby database is a read-write copy of physical standby database created by converti |

Step 5: Login as grid user and go to GRID_HOME and execute below command to start the installation. You can download latest patch and apply the same. Here, I have applied 32545008 patch. $unset ORACLE_SID $unset ORACLE_HOME $cd /grid19c/app/19.3.0.0/grid ./gridSetup.sh -applyRU /grid19c/patch_19cRU/32545008 |

Installer asked to run rootpre.sh script by root user before starting GUI. I just took another session by root user and executed rootpre.sh script and again started the installation. |

Once the patch is applied successfully on local node then it will start GUI console. Here, select the option "Upgrade Oracle Grid Infrastructure". Grid upgrade is not similar to Oracle database upgrade. In database upgrade, we first install the RDBMS software and then upgrade the database, but in GRID upgrade, we don't install GRID binaries, instead we do direct upgrade during installation. |

The below screen will ask you to select the cluster nodes to be upgraded.

Select correct GRID_BASE location.

Next screen will show you the info, warning, and failed checks which need to be corrected manually.

I am ignoring these messages since these parameters are set as per expected values.

Once all steps are done then the final step is to execute rootupgrade.sh scripts manually. You will get below pop-up box to do the same. You have to login as root user to execute rootupgrade.sh script one by one on both the nodes. If the script gets failed on 1st node then do not proceed to run this on 2nd node without resolving the issue on 1st. It won't allow you to execute this script on 2nd without completing the same on 1st. |

Node1: root@rac1:/#id uid=0(root) gid=0(system) groups=2(bin),3(sys),7(security),8(cron),10(audit),11(lp),214(sftpgrp) root@rac1:/#ps -ef | grep pmon oracle 5964788 1 0 Jul 09 - 3:38 ora_pmon_ORCL grid 6751028 1 0 Jul 09 - 0:52 asm_pmon_+ASM1 root 26936170 27001412 0 20:35:04 pts/0 0:00 grep pmon grid 4785324 1 0 Jul 09 - 0:51 mdb_pmon_-MGMTDB root@rac1:/#sh /grid19c/app/19.3.0.0/grid/rootupgrade.sh Performing root user operation. The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /grid19c/app/19.3.0.0/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: y Copying oraenv to /usr/local/bin ... The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: y Copying coraenv to /usr/local/bin ... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Relinking oracle with rac_on option Using configuration parameter file: /grid19c/app/19.3.0.0/grid/crs/install/crsconfig_params The log of current session can be found at: /grid19c/app/grid/crsdata/rac1/crsconfig/rootcrs_rac1_2021-09-15_08-36-04PM.log 2021/09/15 20:36:58 CLSRSC-595: Executing upgrade step 1 of 18: 'UpgradeTFA'. 2021/09/15 20:36:59 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector. 2021/09/15 20:37:00 CLSRSC-595: Executing upgrade step 2 of 18: 'ValidateEnv'. 2021/09/15 20:37:16 CLSRSC-595: Executing upgrade step 3 of 18: 'GetOldConfig'. 2021/09/15 20:37:16 CLSRSC-464: Starting retrieval of the cluster configuration data 2021/09/15 20:37:40 CLSRSC-692: Checking whether CRS entities are ready for upgrade. This operation may take a few minutes. 2021/09/15 20:40:43 CLSRSC-693: CRS entities validation completed successfully. 2021/09/15 20:41:04 CLSRSC-515: Starting OCR manual backup. 2021/09/15 20:41:25 CLSRSC-516: OCR manual backup successful. 2021/09/15 20:41:42 CLSRSC-486: At this stage of upgrade, the OCR has changed. Any attempt to downgrade the cluster after this point will require a complete cluster outage to restore the OCR. 2021/09/15 20:41:42 CLSRSC-541: To downgrade the cluster: 1. All nodes that have been upgraded must be downgraded. 2021/09/15 20:41:43 CLSRSC-542: 2. Before downgrading the last node, the Grid Infrastructure stack on all other cluster nodes must be down. 2021/09/15 20:41:59 CLSRSC-465: Retrieval of the cluster configuration data has successfully completed. 2021/09/15 20:41:59 CLSRSC-595: Executing upgrade step 4 of 18: 'GenSiteGUIDs'. 2021/09/15 20:42:02 CLSRSC-595: Executing upgrade step 5 of 18: 'UpgPrechecks'. 2021/09/15 20:42:13 CLSRSC-4003: Successfully patched Oracle Trace File Analyzer (TFA) Collector. 2021/09/15 20:42:43 CLSRSC-595: Executing upgrade step 6 of 18: 'SetupOSD'. 0513-095 The request for subsystem refresh was completed successfully. 2021/09/15 20:42:44 CLSRSC-595: Executing upgrade step 7 of 18: 'PreUpgrade'. 2021/09/15 20:45:01 CLSRSC-468: Setting Oracle Clusterware and ASM to rolling migration mode 2021/09/15 20:45:02 CLSRSC-482: Running command: '/grid/app/12.2.0.1/grid_home/bin/crsctl start rollingupgrade 19.0.0.0.0' CRS-1131: The cluster was successfully set to rolling upgrade mode. 2021/09/15 20:45:07 CLSRSC-482: Running command: '/grid19c/app/19.3.0.0/grid/bin/asmca -silent -upgradeNodeASM -nonRolling false -oldCRSHome /grid/app/12.2.0.1/grid_home -oldCRSVersion 12.2.0.1.0 -firstNode true -startRolling false ' ASM configuration upgraded in local node successfully. 2021/09/15 20:45:22 CLSRSC-469: Successfully set Oracle Clusterware and ASM to rolling migration mode 2021/09/15 20:45:36 CLSRSC-466: Starting shutdown of the current Oracle Grid Infrastructure stack 2021/09/15 20:46:13 CLSRSC-467: Shutdown of the current Oracle Grid Infrastructure stack has successfully completed. 2021/09/15 20:46:21 CLSRSC-595: Executing upgrade step 8 of 18: 'CheckCRSConfig'. 2021/09/15 20:46:26 CLSRSC-595: Executing upgrade step 9 of 18: 'UpgradeOLR'. User grid has the required capabilities to run CSSD in realtime mode 2021/09/15 20:46:50 CLSRSC-595: Executing upgrade step 10 of 18: 'ConfigCHMOS'. 2021/09/15 20:46:51 CLSRSC-595: Executing upgrade step 11 of 18: 'UpgradeAFD'. 2021/09/15 20:47:11 CLSRSC-595: Executing upgrade step 12 of 18: 'createOHASD'. 2021/09/15 20:47:31 CLSRSC-595: Executing upgrade step 13 of 18: 'ConfigOHASD'. 2021/09/15 20:47:32 CLSRSC-329: Replacing Clusterware entries in file '/etc/inittab' 2021/09/15 20:48:47 CLSRSC-595: Executing upgrade step 14 of 18: 'InstallACFS'. 2021/09/15 20:50:27 CLSRSC-595: Executing upgrade step 15 of 18: 'InstallKA'. 2021/09/15 20:50:45 CLSRSC-595: Executing upgrade step 16 of 18: 'UpgradeCluster'. 2021/09/15 20:53:55 CLSRSC-343: Successfully started Oracle Clusterware stack clscfg: EXISTING configuration version 5 detected. Successfully taken the backup of node specific configuration in OCR. Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'system'.. Operation successful. 2021/09/15 20:54:57 CLSRSC-595: Executing upgrade step 17 of 18: 'UpgradeNode'. 2021/09/15 20:55:10 CLSRSC-474: Initiating upgrade of resource types 2021/09/15 20:56:58 CLSRSC-475: Upgrade of resource types successfully initiated. 2021/09/15 20:57:35 CLSRSC-595: Executing upgrade step 18 of 18: 'PostUpgrade'. 2021/09/15 20:57:59 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded root@rac1:/grid/app/12.2.0.1# Node2: root@rac2:/#sh /grid19c/app/19.3.0.0/grid/rootupgrade.sh Performing root user operation. The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /grid19c/app/19.3.0.0/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: y Copying oraenv to /usr/local/bin ... The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: y Copying coraenv to /usr/local/bin ... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Relinking oracle with rac_on option Using configuration parameter file: /grid19c/app/19.3.0.0/grid/crs/install/crsconfig_params The log of current session can be found at: /grid19c/app/grid/crsdata/rac2/crsconfig/rootcrs_rac2_2021-09-15_09-01-07PM.log 2021/09/15 21:01:36 CLSRSC-595: Executing upgrade step 1 of 18: 'UpgradeTFA'. 2021/09/15 21:01:37 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector. 2021/09/15 21:01:37 CLSRSC-595: Executing upgrade step 2 of 18: 'ValidateEnv'. 2021/09/15 21:01:40 CLSRSC-595: Executing upgrade step 3 of 18: 'GetOldConfig'. 2021/09/15 21:01:40 CLSRSC-464: Starting retrieval of the cluster configuration data 2021/09/15 21:02:13 CLSRSC-465: Retrieval of the cluster configuration data has successfully completed. 2021/09/15 21:02:14 CLSRSC-595: Executing upgrade step 4 of 18: 'GenSiteGUIDs'. 2021/09/15 21:02:14 CLSRSC-595: Executing upgrade step 5 of 18: 'UpgPrechecks'. 2021/09/15 21:02:21 CLSRSC-595: Executing upgrade step 6 of 18: 'SetupOSD'. 0513-095 The request for subsystem refresh was completed successfully. 2021/09/15 21:02:21 CLSRSC-595: Executing upgrade step 7 of 18: 'PreUpgrade'. ASM configuration upgraded in local node successfully. 2021/09/15 21:02:46 CLSRSC-466: Starting shutdown of the current Oracle Grid Infrastructure stack 2021/09/15 21:03:31 CLSRSC-467: Shutdown of the current Oracle Grid Infrastructure stack has successfully completed. 2021/09/15 21:03:41 CLSRSC-595: Executing upgrade step 8 of 18: 'CheckCRSConfig'. 2021/09/15 21:03:43 CLSRSC-595: Executing upgrade step 9 of 18: 'UpgradeOLR'. User grid has the required capabilities to run CSSD in realtime mode 2021/09/15 21:03:54 CLSRSC-595: Executing upgrade step 10 of 18: 'ConfigCHMOS'. 2021/09/15 21:03:54 CLSRSC-595: Executing upgrade step 11 of 18: 'UpgradeAFD'. 2021/09/15 21:03:58 CLSRSC-595: Executing upgrade step 12 of 18: 'createOHASD'. 2021/09/15 21:04:01 CLSRSC-595: Executing upgrade step 13 of 18: 'ConfigOHASD'. 2021/09/15 21:04:02 CLSRSC-329: Replacing Clusterware entries in file '/etc/inittab' 2021/09/15 21:04:44 CLSRSC-595: Executing upgrade step 14 of 18: 'InstallACFS'. 2021/09/15 21:05:57 CLSRSC-595: Executing upgrade step 15 of 18: 'InstallKA'. 2021/09/15 21:06:01 CLSRSC-595: Executing upgrade step 16 of 18: 'UpgradeCluster'. 2021/09/15 21:06:23 CLSRSC-4003: Successfully patched Oracle Trace File Analyzer (TFA) Collector. 2021/09/15 21:08:57 CLSRSC-343: Successfully started Oracle Clusterware stack clscfg: EXISTING configuration version 19 detected. Successfully taken the backup of node specific configuration in OCR. Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'system'.. Operation successful. 2021/09/15 21:09:32 CLSRSC-595: Executing upgrade step 17 of 18: 'UpgradeNode'. Start upgrade invoked.. 2021/09/15 21:09:48 CLSRSC-478: Setting Oracle Clusterware active version on the last node to be upgraded 2021/09/15 21:09:49 CLSRSC-482: Running command: '/grid19c/app/19.3.0.0/grid/bin/crsctl set crs activeversion' Started to upgrade the active version of Oracle Clusterware. This operation may take a few minutes. Started to upgrade CSS. CSS was successfully upgraded. Started to upgrade Oracle ASM. Started to upgrade CRS. CRS was successfully upgraded. Started to upgrade Oracle ACFS. Oracle ACFS was successfully upgraded. Successfully upgraded the active version of Oracle Clusterware. Oracle Clusterware active version was successfully set to 19.0.0.0.0. 2021/09/15 21:10:59 CLSRSC-479: Successfully set Oracle Clusterware active version 2021/09/15 21:11:00 CLSRSC-476: Finishing upgrade of resource types 2021/09/15 21:11:19 CLSRSC-477: Successfully completed upgrade of resource types 2021/09/15 21:12:46 CLSRSC-595: Executing upgrade step 18 of 18: 'PostUpgrade'. Successfully updated XAG resources. 2021/09/15 21:13:42 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded Once the script is executed successfully on both the nodes then click Ok to continue. |

The final screen will appear on your screen which shows that GRID upgrade is completed successfully.

You can perform below post checks to verify the upgrade activity. Node1: root@rac1:/grid19c/app/19.3.0.0/grid/bin#./crsctl query crs softwareversion Oracle Clusterware version on node [rac1] is [19.0.0.0.0] root@rac1:/grid19c/app/19.3.0.0/grid/bin#./crsctl query crs activeversion -f Oracle Clusterware active version on the cluster is [19.0.0.0.0]. The cluster upgrade state is [NORMAL]. The cluster active patch level is [2367005043]. root@rac1:/grid19c/app/19.3.0.0/grid/bin#./crsctl query crs releaseversion Oracle High Availability Services release version on the local node is [19.0.0.0.0] root@rac1:/grid19c/app/19.3.0.0/grid/bin#./crsctl query crs softwarepatch Oracle Clusterware patch level on node rac1 is [2367005043]. root@rac1:/grid19c/app/19.3.0.0/grid/bin#./kfod op=patches --------------- List of Patches =============== 32545013 32576499 32579761 32584670 32585572 root@rac1:/grid19c/app/19.3.0.0/grid/bin#./kfod op=patchlvl ------------------- Current Patch level =================== 2367005043 root@rac1:/grid19c/app/19.3.0.0/grid/bin#./crsctl check crs CRS-4638: Oracle High Availability Services is online CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online root@rac1:/grid19c/app/19.3.0.0/grid/bin#./crsctl stat res -t -init -------------------------------------------------------------------------------- Name Target State Server State details -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.asm 1 ONLINE ONLINE rac1 Started,STABLE ora.cluster_interconnect.haip 1 ONLINE ONLINE rac1 STABLE ora.crf 1 ONLINE ONLINE rac1 STABLE ora.crsd 1 ONLINE ONLINE rac1 STABLE ora.cssd 1 ONLINE ONLINE rac1 STABLE ora.cssdmonitor 1 ONLINE ONLINE rac1 STABLE ora.ctssd 1 ONLINE ONLINE rac1 OBSERVER,STABLE ora.diskmon 1 OFFLINE OFFLINE STABLE ora.drivers.acfs 1 ONLINE ONLINE rac1 STABLE ora.evmd 1 ONLINE ONLINE rac1 STABLE ora.gipcd 1 ONLINE ONLINE rac1 STABLE ora.gpnpd 1 ONLINE ONLINE rac1 STABLE ora.mdnsd 1 ONLINE ONLINE rac1 STABLE ora.storage 1 ONLINE ONLINE rac1 STABLE Node2: root@rac2:/grid19c/app/19.3.0.0/grid/bin#./kfod op=patches --------------- List of Patches =============== 32545013 32576499 32579761 32584670 32585572 root@rac2:/grid19c/app/19.3.0.0/grid/bin#./kfod op=patchlvl ------------------- Current Patch level =================== 2367005043 root@rac2:/grid19c/app/19.3.0.0/grid/bin#./crsctl query crs softwareversion Oracle Clusterware version on node [rac2] is [19.0.0.0.0] root@rac2:/grid19c/app/19.3.0.0/grid/bin#./crsctl query crs activeversion -f Oracle Clusterware active version on the cluster is [19.0.0.0.0]. The cluster upgrade state is [NORMAL]. The cluster active patch level is [2367005043]. root@rac2:/grid19c/app/19.3.0.0/grid/bin#./crsctl query crs releaseversion Oracle High Availability Services release version on the local node is [19.0.0.0.0] root@rac2:/grid19c/app/19.3.0.0/grid/bin#./crsctl query crs softwarepatch Oracle Clusterware patch level on node rac2 is [2367005043]. root@rac2:/grid19c/app/19.3.0.0/grid/bin#./crsctl check crs CRS-4638: Oracle High Availability Services is online CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online root@rac2:/grid19c/app/19.3.0.0/grid/bin#./crsctl stat res -t -init -------------------------------------------------------------------------------- Name Target State Server State details -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.asm 1 ONLINE ONLINE rac2 STABLE ora.cluster_interconnect.haip 1 ONLINE ONLINE rac2 STABLE ora.crf 1 ONLINE ONLINE rac2 STABLE ora.crsd 1 ONLINE ONLINE rac2 STABLE ora.cssd 1 ONLINE ONLINE rac2 STABLE ora.cssdmonitor 1 ONLINE ONLINE rac2 STABLE ora.ctssd 1 ONLINE ONLINE rac2 OBSERVER,STABLE ora.diskmon 1 OFFLINE OFFLINE STABLE ora.drivers.acfs 1 ONLINE ONLINE rac2 STABLE ora.evmd 1 ONLINE ONLINE rac2 STABLE ora.gipcd 1 ONLINE ONLINE rac2 STABLE ora.gpnpd 1 ONLINE ONLINE rac2 STABLE ora.mdnsd 1 ONLINE ONLINE rac2 STABLE ora.storage 1 ONLINE ONLINE rac2 STABLE |

Thanks for reading this post ! Please comment if you like this post !

Excellent post Rupesh Sir!!!

ReplyDelete