Environment Configuration Details:

Operating System: Oracle Linux 8.7 64 Bit

Oracle and Grid Software version: 19.0.0.0

RAC: YES

Oracle and Grid Software version: 19.0.0.0

RAC: YES

DNS: No

Note: I have observed that oracleasm fails to load in current kernel version kernel version "5.15.0-3.60.5.1.el8uek.x86_64" on Linux 8.7 though the oracleasmlib, oracleasm-support, and kmod RPM packages are properly installed. [root@rac1 ~]# oracleasm init Creating /dev/oracleasm mount point: /dev/oracleasm Loading module "oracleasm": failed Unable to load module "oracleasm" Mounting ASMlib driver filesystem: failed Unable to mount ASMlib driver filesystem Instead of downgrading the kernel version, I decided to configure ASM devices using udev rules, not by oracleasm. Let's configure the Oracle Grid Infrastructure on Linux 8.7 by configuring storage devices using udev.rules method. |

Points to be checked before starting RAC installation prerequisites: 1) Am I downloading GRID and RDBMS software of correct version for the correct Operating System ? 2) Are my GRID and database certified on installed Operating System ? 3) Are my GRID and database software architecture 32 bit or 64 bit ? 4) Is Operating System architecture 32 bit or 64 bit ? 5) Is Operating System Kernel Version compatible with Oracle Software to be installed ? 6) Is server runlevel 3 or 5 ? 7) Oracle clusterware version must be equal to or greater than Oracle RAC version you plan to install. 8) To install Oracle 19c RAC, you must install Oracle Grid Infrastructure (Oracle Clusterware and ASM) 19c on your cluster. 9) Oracle clusterware requires the same timezone environment variable settings on all cluster nodes. 10) Use identical server hardware on each cluster node to simplify the server maintenance activities. |

|

High

Level

Steps

to

configure

Oracle

RAC on

Virtual

Box

Linux:

1) Virtual

Box

Configuration

Step

by

Step

2) Linux

Operating

System

RHEL

8.7 Installation

Step

by

Step

3) GRID 19c Software Installation

Step

by

Step - Create Logical Partitions - Configure ASM devices by udev.rules method - SSH Configuration manually - Download Softwares - GRID | RDBMS | Patches | OPatch - Applying Patch before installation |

|

Oracle 19c 2-Node RAC Software Installation Requirement

- For Test Environment

|

||

|

RAM and CPU

|

||

|

RAM

- At least 8 GB RAM on each cluster Node. Later, it can

be increased as per business requirement.

|

||

|

Swap Memory

–

- If RAM is between 4 GB and 16 GB -

equal to RAM

- If RAM is more than 16 GB - 16 GB

|

||

|

CPU

– Minimum 2 CPUs for installation. Later, it can be

increased as per business requirement.

|

||

|

|

||

|

Storage

|

||

|

Disk Group/File System

|

Size (GB)

|

Description

|

|

OCR

|

30

|

3 disks of 10 GB each (ASM Disk)

|

|

DATA

|

10

|

2 disks of 5 GB each (ASM Disk)

|

|

REDO1

|

5

|

1 disk of 5 GB (ASM Disk)

|

|

REDO2

|

5

|

1 disk of 5 GB (ASM Disk)

|

|

ARCH

|

5

|

1 disk of 5 GB (ASM Disk) - Optional

|

|

/oracle

|

50

|

1 disk of 50 GB (Local FS on each Node)

|

|

/oswatcher

|

50

|

1 disk of 50 GB (Local FS on each Node) - Optional

|

|

/tmp

|

1

|

At least 1 GB of space in the /tmp directory.

|

|

|

||

|

Network

|

||

|

1 Physical Network Card (at least 1 GbE) – Public

(Local)

|

||

|

1 Physical Network Card (at least 1 GbE) – Private

(Local)

|

||

Note: Please note that the above requirements are for my

testing environment only. You can refer below requirement for your

actual production server. DATA and ARCH disk group

space requirement is based on your production requirements.

|

Oracle 19c 2-Node RAC Software Installation Requirement

- For Actual Production

|

||

|

RAM and CPU

|

||

|

RAM

- At least 8 GB RAM on each cluster Node. Later, it can

be increased as per business requirement.

|

||

|

Swap Memory

–

- If RAM is between 4 GB and 16 GB -

equal to RAM

- If RAM is more than 16 GB - 16 GB

|

||

|

CPU

– Minimum 2 CPUs for installation. Later, it can be

increased as per business requirement.

|

||

|

|

||

|

Storage

|

||

|

Disk Group/File System

|

Size (GB)

|

Description

|

|

OCR |

150

|

3 disks of 50 GB each (ASM Disk)

|

|

DATA

|

2 TB

|

4 disks of 500 GB each (ASM Disk)

|

|

REDO1

|

50

|

1 disk of 50 GB (ASM Disk)

|

|

REDO2

|

50

|

1 disk of 50 GB (ASM Disk)

|

|

ARCH

|

500

|

1 disk of 500 GB (ASM Disk)

|

|

/oracle

|

200

|

1 disk of 200 GB (Local FS on each Node)

|

|

/oswatcher

|

100

|

1 disk of 100 GB (Local FS on each Node) - Optional

|

|

/tmp

|

1

|

At least 1 GB of space in the /tmp directory.

|

|

|

||

|

Network

|

||

|

1 Physical Network Card (at least 1 GbE) – Public

(Local)

|

||

|

1 Physical Network Card (at least 1 GbE) – Private

(Local)

|

||

|

Important

Tips

before

installing

any

Oracle

RAC

software:

1)

Cluster

IPs

(VIPs,

SCAN

IPs)

should

not

be

in

use

or

ping

before

installation,

except

Public

and

Private

IPs.

2)

Stop

any

Third

Party

Antivirus

Tools

on

all

cluster

nodes

before

installation.

Ask

Third

Party

Vendor

to

bypass

the

Private

IP

scanning

to

avoid

RAC

Node

evictions

and

resource

utilisation

issues.

3)

Public

and

Private

ethernet

card

names

must

be

same

across

all

cluster

nodes

i.e.

If

public

ethernet

card

name

on

Node1

is

eth1

and

private

ethernet

card

name

on

Node1

is

eth2 then, public

ethernet

card

name

on

Node2

must

be

eth1 and

private

ethernet

card

name

on

Node2

must

be

eth2.

4)

Ensure

that

/dev/shm

mount

area

is

of

type

tmpfs and

is

mounted

with

below

options:

- rw

and

exec

permissions

- Without

noexec

or

nosuid

5)

Oracle

strongly

recommends

to

disable

THP

(Transparent

HugePages)

and

use

standard

HugePages

to

avoid

memory

allocation

delays

and

performance

issues.

6) For

2-Node

RAC

configuration,

- 2

public

IPs

(One

for

each

Node)

- 2

private

IPs (One

for

each

Node)

- 2

virtual

IPs (One

for

each

Node)

- 1

or

3

scan

IPs

(If

it

is

1

then,

mention

it

is

in

/etc/hosts

file

and

if

3

then,

use

DNS

for

round-robin). 7) Public, VIP, and SCAN IP series should be same and private IP series should be different than Public, VIP, and SCAN series. Please note that public, vip, and scan series can be different if the same IP range is not available, but they must be on the same subnet as public IP address. |

Step 1: Downloading Software

- VirtualBox

- Linux Operating System

- Oracle GRID and RDBMS Software

- Patches and Opatch

1) For downloading Virtual Box, refer below screens. You can download latest VIrtualBox.

2) For downloading Linux Operating System Image Setup, refer

below screens:

3) For downloading Oracle Software (GRID and RDBMS), refer

below screens:

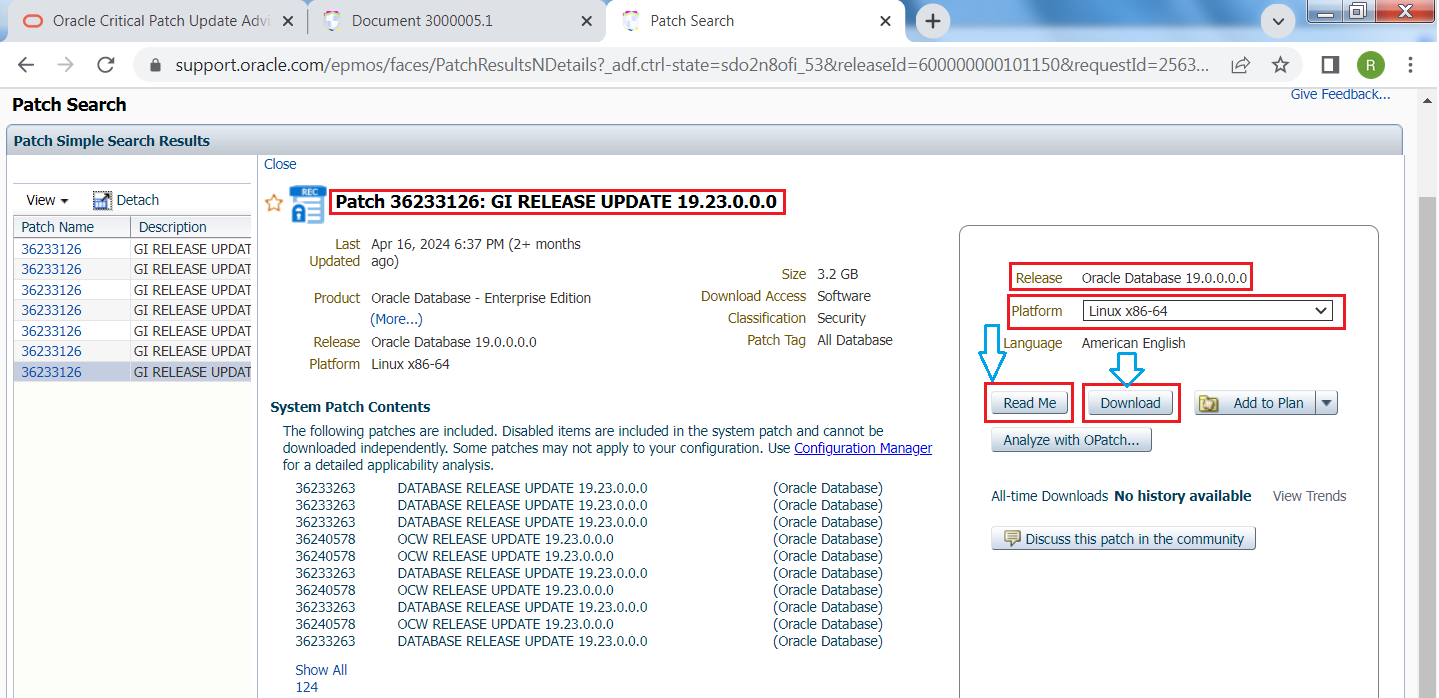

4) For downloading Oracle GRID Patches and OPatch version), refer below screens:

Step 2: Virtual Box Configuration Step by Step

Open VirtualBox, and click option "NEW". A new pop-up box

will be appeared on the screen.

Give name to the new VirtualBox machine. Select

"Machine Folder" to a customized location to save

VirtualBox Machine.

You can define Operating System Memory. You can also

increased/decreased memory later.

You can see that your VDI machine is ready. Now its time to change the default settings for configuring Oracle RAC. Click the new machine and go to the "Settings" option.

Make below changes to newly created VDI machine and click OK

to save the changes.

- Disable audio

- Change CPU count if required.

- Define public and private ethernet cards.(Both as internal

network).

- Locate your "Linux Operating System" Image setup.

You can verify the settings parameters as below.

Now its time to start the Linux Operating System installation

step by step.

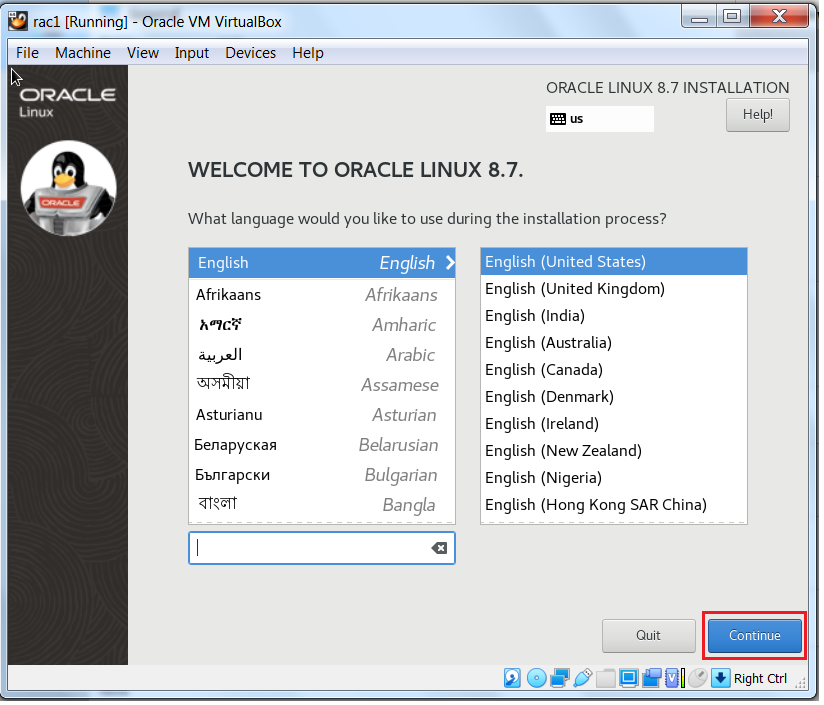

Step 3 : Linux Operating System Installation Step by Step

Click VirtualBox machine "rac1" and click option "Start". Please note that during entire Linux installation, use "Right CtrL" button from your keyboard to navigate the mouse cursor from

VirtualBox to your desktop.

Here, you can customize your storage options i.e. you have to

manually create each Linux partitions (/u01, /tmp, /opt, /var,

etc.) with customized size. I have used

"Automatic" option to create all partitions automatically

to avoid manual intervention. (Defined storage space : 40

GB).

Here, select "Server with GUI" option to manage the

server with a graphical interface. Select additional required

options from Right Side Box.

Add IP addressed for your defined Network Ethernet cards.

Give hostname for your virtual Machine and save the network

settings.

Turn off the security policy since this is testing

environment.

Set the password for the root user and click"Begin Installation"

option.

Your Linux Operating System is successfully installed now. Click"Reboot System" option.

Accept the "License Information" and click"Finish Configuration" option.

Enter your name as Username and set password for the same.

Now locate the "Devices" option and click"Insert Guest Additions CD image" option. Below pop-up box will be appeared on your screen. Click

"Run" to continue. It will ask you to enter the password for the user.

Give the password to continue.

Your Once VirtualBox Guest Additions installation is done then

click "Enter" to close the window.

Your Again locate "Devices" option and set "Shared Clipboard" and

"Drag and Drop" as Bidirectional. This will

help you to copy/paste any file/content from your desktop to VDI

machine and vice-versa.

Step 4 : Operating System Configuration

|

Now

its

time

to

make

changes

in

OS.

Perform

below

changes

in

OS

after

installation.

-

Update

/etc/hosts

file

-

Stop

and

disable

Firewall

-

Disable

SELINUX

-

Create

directory

structure

-

User

and

group

creation

with

permissions

-

Add

limits

and

kernel

parameters

in

configuration

files

-

Make

above

changes

on

server

and

then

clone

the

machine

otherwise

you

have

to

make

changes

on

cloned

server

as

well.

- Install required RPM packages.

Make

above

changes

on

server

and

then

clone

the

machine

otherwise

you

have

to

make

changes

on

cloned

server

as

well.

1)

Updating

/etc/hosts

(Applies

to

both

Nodes).

#vi /etc/hosts

#Public IP 10.20.30.101 rac1.localdomain rac1 10.20.30.102 rac2.localdomain rac2 #Private IP 10.1.2.201 rac1-priv.localdomain rac1-priv 10.1.2.202 rac2-priv.localdomain rac2-priv #VIP IP 10.20.30.103 rac1-vip.localdomain rac1-vip 10.20.30.104 rac2-vip.localdomain rac2-vip #scan IP 10.20.30.105 rac-scan.localdomain rac-scan

2) Commands

to

view/stop/disable

firewall

(Applies

to

both

Nodes).

#systemctl

status

firewalld

#systemctl

stop

firewalld

#systemctl

disable

firewalld

#systemctl

status

firewalld

3)

Disable

SELINUX

configuration (Applies

to

both

Nodes).

#cat

/etc/selinux/config

Check

the

value

"SELINUX=disabled"

#cat

/etc/selinux/config

4) User

and

group

creation

with

permissions (Applies

to

both

Nodes).

#Create

groups

with

customized

group

ID.

[root@rac1

~]#

groupadd

-g

2000

oinstall

[root@rac1

~]#

groupadd

-g

2100

asmadmin

[root@rac1

~]#

groupadd

-g

2200

dba

[root@rac1

~]#

groupadd

-g

2300

oper

[root@rac1

~]#

groupadd

-g

2400

asmdba

[root@rac1

~]#

groupadd

-g

2500

asmoper

#Create

users

for

both

GRID

and

RDBMS

owners

and

change

password.

[root@rac1

/]#

useradd

grid

[root@rac1

/]#

useradd

oracle

[root@rac1

/]#

passwd

grid

[root@rac1

/]#

passwd

oracle

#Assigning

primary

and

secondary

groups

to

users.

[root@rac1

/]#

usermod

-g

oinstall

-G

asmadmin,dba,oper,asmdba,asmoper

grid

[root@rac1

/]#

usermod

-g

oinstall

-G

asmadmin,dba,oper,asmdba,asmoper

oracle

5)

Create

directory

structure

(Applies

to

both

Nodes).

grid:

ORACLE_BASE(GRID_BASE)

: /u01/app/grid

ORACLE_HOME (GRID_HOME) : /u01/app/19.0.0/grid

oracle:

ORACLE_BASE

: /u01/app/oracle

ORACLE_HOME

: /u01/app/oracle/product/19.0.0/dbhome_1

Note: Please

note

that

ORACLE_HOME

for

the

oracle

user

is

not

needed

to

create

since

it

will

be automatically created

under ORACLE_BASE

as ORACLE_HOME always

locates

under

the ORACLE_BASE

for

rdbms

user(oracle),

but

for grid user,

the

case

is

different.

For grid user,

the ORACLE_HOME(GRID_HOME)

always

locates outside the

ORACLE_BASE(GRID_BASE).

This

is

because

some

cluster

files

under ORACLE_HOME(GRID_HOME)

are

owned

by

root

user

and

the

files

located

under ORACLE_BASE(GRID_BASE)

are

owned

by

grid

user

and

hence

it

is

required

to

locate

the ORACLE_BASE(GRID_BASE)

and ORACLE_HOME(GRID_HOME)

in

a

separate

directory.

#Create

GRID_BASE,GRID_HOME,ORACLE_BASE,ORACLE_HOME directories.

[root@rac1

/]#

mkdir

-p

/u01/app/grid

[root@rac1

/]#

mkdir

-p

/u01/app/19.0.0/grid

[root@rac1

/]#

mkdir

-p

/u01/app/oraInventory

[root@rac1

/]#

mkdir

-p

/u01/app/oracle

#Grant user and group

permissions on

above

directories.

[root@rac1

/]#

chown

-R

grid:oinstall

/u01/app/grid

[root@rac1

/]#

chown

-R

grid:oinstall

/u01/app/19.0.0/grid

[root@rac1

/]#

chown

-R

grid:oinstall

/u01/app/oraInventory

[root@rac1

/]#

chown

-R

oracle:oinstall

/u01/app/oracle

#Grant

read,write,execute

permissions

to

the

above

directories.

[root@rac1

/]#

chmod

-R

755

/u01/app/grid

[root@rac1

/]#

chmod

-R

755

/u01/app/19.0.0/grid

[root@rac1

/]#

chmod

-R

755

/u01/app/oraInventory

[root@rac1

/]#

chmod

-R

755

/u01/app/oracle

#Verify

the

directory

permissions.

[root@rac1

/]#

ls

-ld

/u01/app/grid

[root@rac1

/]#

ls

-ld

/u01/app/19.0.0/grid

[root@rac1

/]#

ls

-ld

/u01/app/oraInventory

[root@rac1

/]#

ls

-ld

/u01/app/oracle

6) Adding

kernel

and

limits

configuration

parameters(Applies

to

both

Nodes).

[root@rac1

/]#

cat

/etc/sysctl.conf

|

grep

-v

"#"

[root@rac1

/]#

vi

/etc/sysctl.conf

fs.file-max

=

6815744

kernel.sem

=

250

32000

100

128

kernel.shmmni

=

4096

kernel.shmall

=

1073741824

kernel.shmmax

=

4398046511104

kernel.panic_on_oops

=

1

net.core.rmem_default

=

262144

net.core.rmem_max

=

4194304

net.core.wmem_default

=

262144

net.core.wmem_max

=

1048576

net.ipv4.conf.all.rp_filter

=

2

net.ipv4.conf.default.rp_filter

=

2

fs.aio-max-nr

=

1048576

net.ipv4.ip_local_port_range

=

9000

65500

[root@rac1

/]#

sysctl

-p

[root@rac1

/]#

cat

/etc/security/limits.conf

|

grep

-v

"#"

[root@rac1

/]#

vi

/etc/security/limits.conf

oracle

soft

nofile

1024

oracle

hard

nofile

65536

oracle

soft

nproc

16384

oracle

hard

nproc

16384

oracle

soft

stack

10240

oracle

hard

stack

32768

oracle

hard

memlock

134217728

oracle

soft

memlock

134217728

oracle

soft

data

unlimited

oracle

hard

data

unlimited

grid

soft

nofile

1024

grid

hard

nofile

65536

grid

soft

nproc

16384

grid

hard

nproc

16384

grid

soft

stack

10240

grid

hard

stack

32768

grid

hard

memlock

134217728

grid

soft

memlock

134217728

grid

soft

data

unlimited

grid

hard

data

unlimited

7) Install required RPM packages. - ksh - sysstat - cvu Download required RPM packages for Oracle Linux 8: 1) ksh - This will be part of your Yum Repository. 2) sysstat - This will be part of your Yum Repository. 3) libnsl-2.28-211.0.1.el8.x86_64.rpm - This will be part of your Yum Repository. (To avoid installation error "error while loading shared libraries: libnsl.so.1: cannot open shared object file: No such file or directory" 4) cvu - This will be part of Grid Infrastructure Software. Note: Since Please note that I am not using oracleasm here to configure logical disk partitions instead, I am using udev rules to configure ASM devices and hence I am not going to install oracleasmlib and oracleasm-support packages. Node1: [root@rac1 Packages]# ls -ltr *ksh* -rwxrwx--- 1 root vboxsf 951068 Oct 4 2022 ksh-20120801-257.0.1.el8.x86_64.rpm [root@rac1 Packages]# rpm -ivh ksh-20120801-257.0.1.el8.x86_64.rpm warning: ksh-20120801-257.0.1.el8.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ad986da3: NOKEY Verifying... ################################# [100%] Preparing... ################################# [100%] Updating / installing... 1:ksh-20120801-257.0.1.el8 ################################# [100%] [root@rac1 Packages]# pwd /media/sf_Setups/V1032420-01/AppStream/Packages [root@rac1 Packages]# ls -ltr *sysstat* -rwxrwx--- 1 root vboxsf 435944 Oct 18 2022 sysstat-11.7.3-7.0.1.el8.x86_64.rpm [root@rac1 Packages]# rpm -ivh sysstat-11.7.3-7.0.1.el8.x86_64.rpm warning: sysstat-11.7.3-7.0.1.el8.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ad986da3: NOKEY Verifying... ################################# [100%] Preparing... ################################# [100%] Updating / installing... 1:sysstat-11.7.3-7.0.1.el8 ################################# [100%] [root@rac1 Packages]# pwd /media/sf_Setups/V1032420-01/BaseOS/Packages [root@rac1 Packages]# rpm -ivh libnsl-2.28-211.0.1.el8.x86_64.rpm warning: libnsl-2.28-211.0.1.el8.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ad986da3: NOKEY Verifying... ################################# [100%] Preparing... ################################# [100%] Updating / installing... 1:libnsl-2.28-211.0.1.el8 ################################# [100%] [root@rac1 Packages]# scp *ksh* *sysstat* root@rac2:/tmp root@rac2's password: ksh-20120801-257.0.1.el8.x86_64.rpm 100% 929KB 24.5MB/s 00:00 sysstat-11.7.3-7.0.1.el8.x86_64.rpm 100% 426KB 127.3MB/s 00:00 [root@rac1 Packages]# scp libnsl-2.28-211.0.1.el8.x86_64.rpm root@rac2:/tmp root@rac2's password: libnsl-2.28-211.0.1.el8.x86_64.rpm 100% 105KB 41.6MB/s 00:00 rac2: [root@rac1 Packages]# ssh rac2 root@rac2's password: Activate the web console with: systemctl enable --now cockpit.socket Last login: Tue Jul 2 08:19:15 2024 from 10.20.30.101 [root@rac2 ~]# cd /tmp [root@rac2 tmp]# ls -ltr *ksh* *sysstat* -rwxr-x--- 1 root root 951068 Jul 2 08:19 ksh-20120801-257.0.1.el8.x86_64.rpm -rwxr-x--- 1 root root 435944 Jul 2 08:19 sysstat-11.7.3-7.0.1.el8.x86_64.rpm [root@rac2 tmp]# rpm -ivh ksh-20120801-257.0.1.el8.x86_64.rpm warning: ksh-20120801-257.0.1.el8.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ad986da3: NOKEY Verifying... ################################# [100%] Preparing... ################################# [100%] Updating / installing... 1:ksh-20120801-257.0.1.el8 ################################# [100%] [root@rac2 tmp]# rpm -ivh sysstat-11.7.3-7.0.1.el8.x86_64.rpm warning: sysstat-11.7.3-7.0.1.el8.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ad986da3: NOKEY Verifying... ################################# [100%] Preparing... ################################# [100%] Updating / installing... 1:sysstat-11.7.3-7.0.1.el8 ################################# [100%] [root@rac2 tmp]# ls -ltr libnsl-2.28-211.0.1.el8.x86_64.rpm -rwxr-x--- 1 root root 107496 Jul 2 08:22 libnsl-2.28-211.0.1.el8.x86_64.rpm [root@rac2 tmp]# rpm -ivh libnsl-2.28-211.0.1.el8.x86_64.rpm warning: libnsl-2.28-211.0.1.el8.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID ad986da3: NOKEY Verifying... ################################# [100%] Preparing... ################################# [100%] Updating / installing... 1:libnsl-2.28-211.0.1.el8 ################################# [100%] |

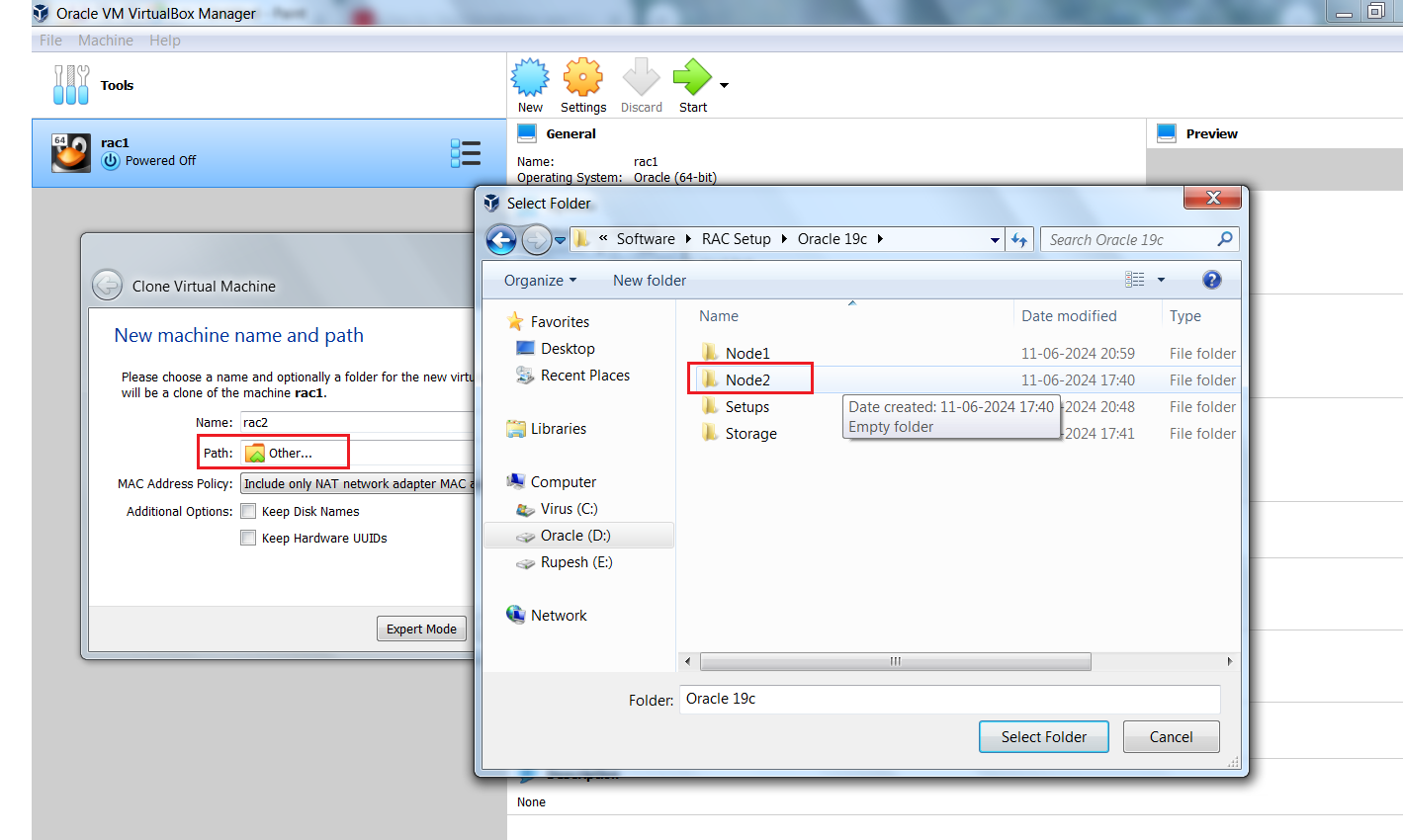

Step 5 : Clone VDI machine to create 2nd RAC Node.

Now it's time to clone the virtual box. Right Click existing virtual box and click "Clone" option.

In "MAC Address Policy" column, select the option "Generate new mac addresses for all network adapters". This will remove the need of manually adding mac address post cloning activity. In previous Virtual Box releases, we had to manually change the mac addresses of cloned server, but now we have an option of generating new mac address for cloned machine while cloning the server.

Here, you have two options to clone the machine.

1) Full Clone

2) Linked Clone

If you choose "Full Clone" option then, exact copy with all virtual hard disks of original virtual box will be created. This will take some time to complete the clone. In this type, you can move the cloned machine to different computer without moving the original virtual box machine.

If you choose "Linked Clone" option then, clone machine will be created within seconds, but the virtual hard disk files of cloned machine will be tied with virtual hard disk files of the original virtual box machine.

You can not move this cloned virtual box machine to a different computer machine without moving the original machine. Suppose, you want to share this cloned virtual box machine with your friends then, you will not be able to do this without sharing original virtual box machine.

You can see that your new clone box i.e. rac2 is ready. Go to the settings options for rac2 machine and verify the new mac addresses assigned to both public and private ethernet cards. You can also verify RAM,CPU,storage settings.

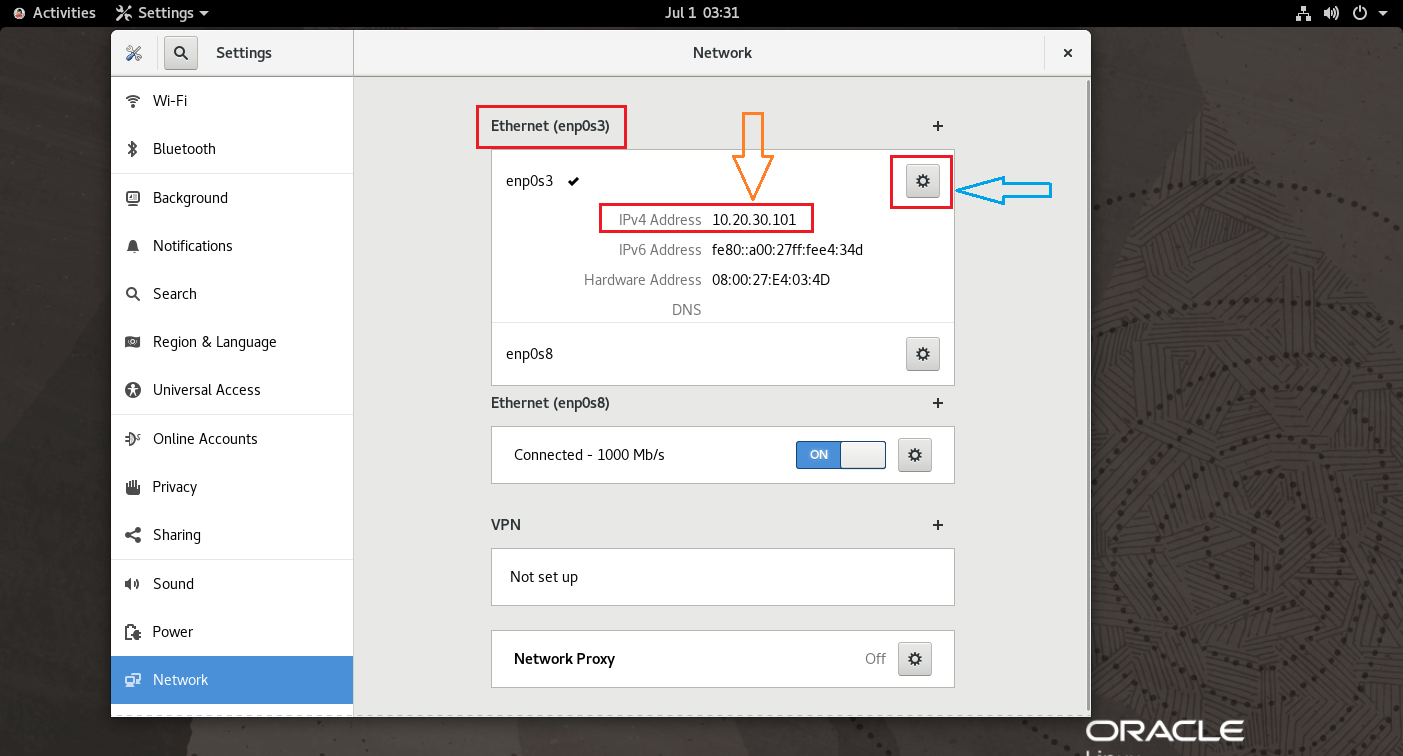

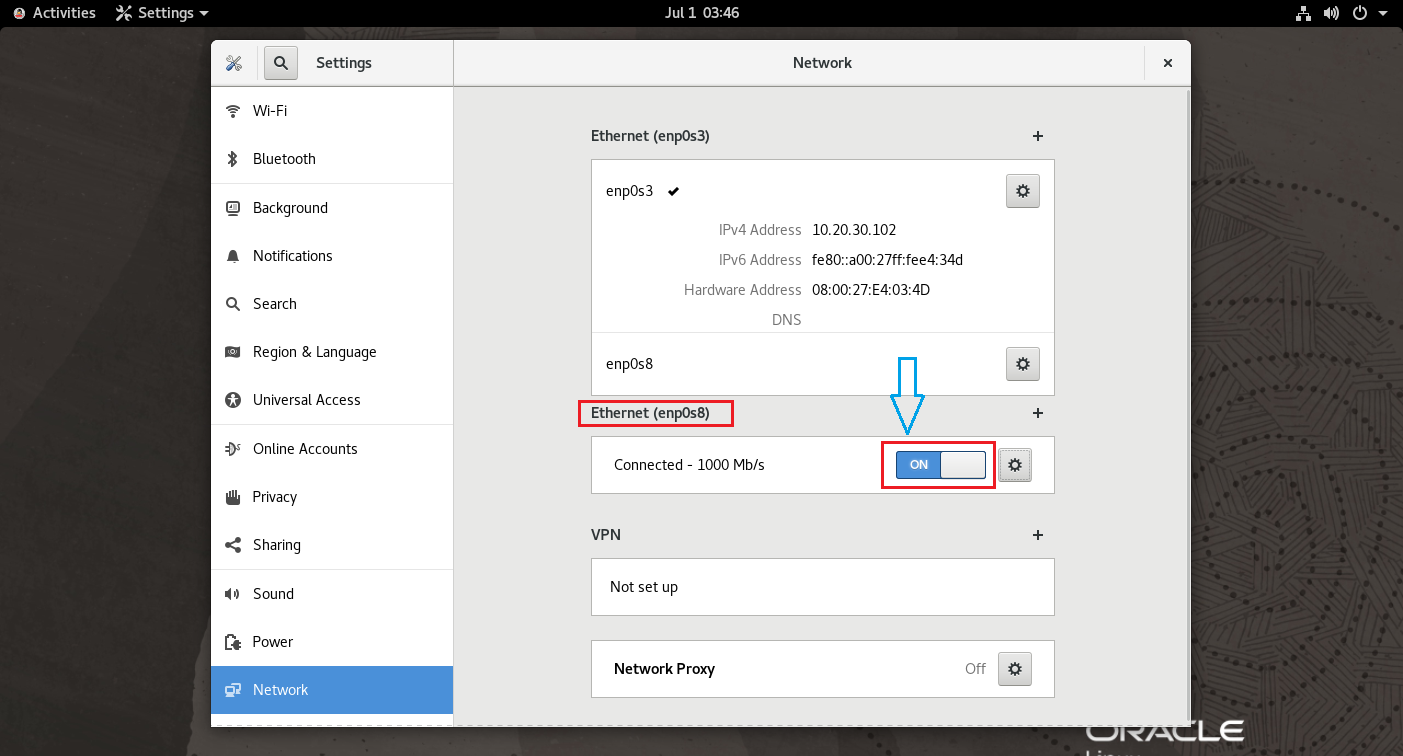

Start the rac2 machine and make below changes.

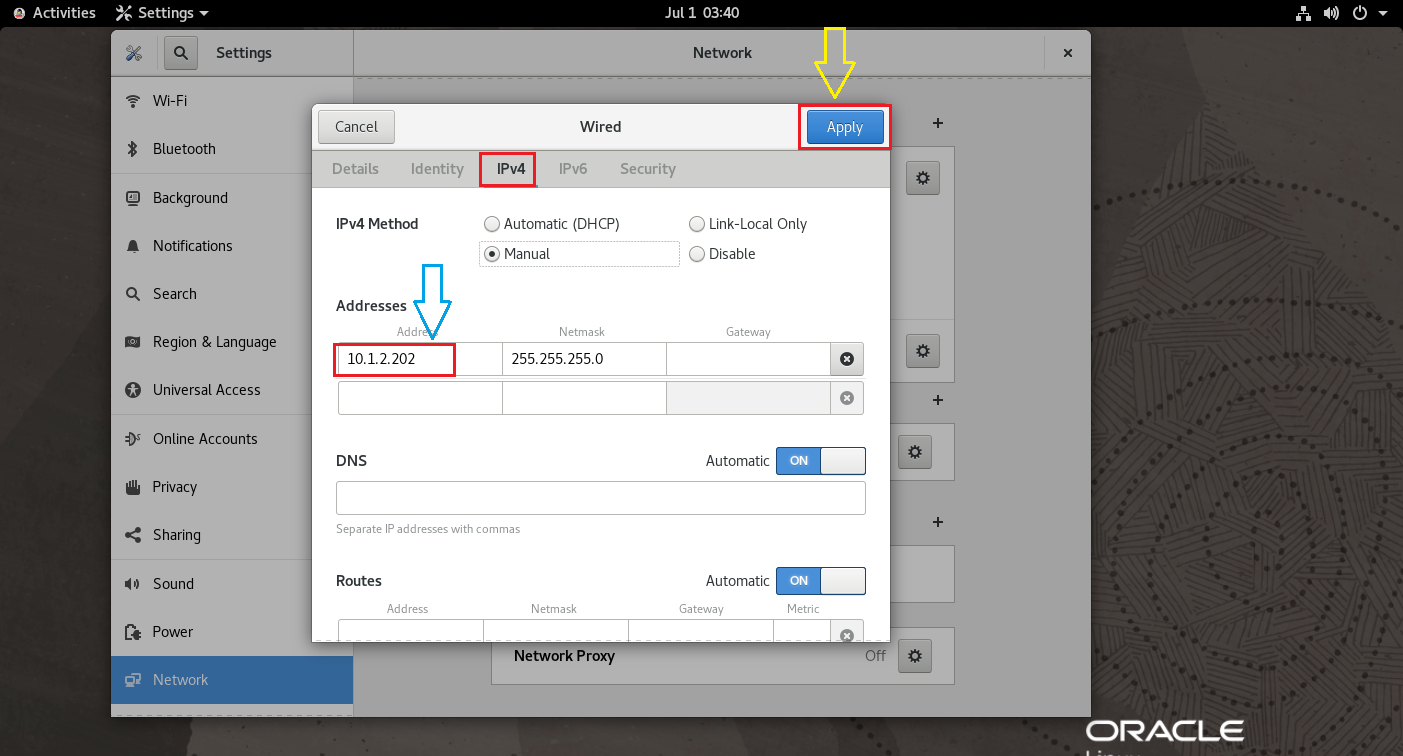

- Change IP addresses of public and private ethernet cards. Since this is cloned virtual machine, IP addresses of original machine will be there. You have to manually change the same.

- Change hostname from rac1 to rac2.

- Restart the Network settings (Off/ON).

- Reboot rac2 machine.

Here, you can see that though you changed the IP address, still its is showing old IP address. This is because you have not yet restarted network.

Now you can see that new IPs are assigned to both public and private ethernet cards post restarting network.

Now open the terminal and change the hostname. Since this is clone machine, you have to change the hostname manually and restart the machine.

Now stop both the machines, start the same and check ping is working fine from both the nodes for public IP.

Step 6 : Configure shared storage for RAC database.

Click"Create" option to create 3 OCR disks for installation purpose as normal redundancy. Please note that for normal redundancy, minimum 2 disks are required, but considering odd number of voting files, Oracle will not allow even number of disks i.e. you can configure disks 1,3, or 5.

Minimum Number of disks in different redundancy levels:

- External Redundancy - 1 OCR disk

- Normal Redundancy - 3 OCR disk

- High Redundancy - 5 OCR disk

Here, I have used Normal Redundancy i.e. 3 disks.

- ocr1 - 10 GB

- ocr2 - 10 GB

- ocr3 - 10 GB

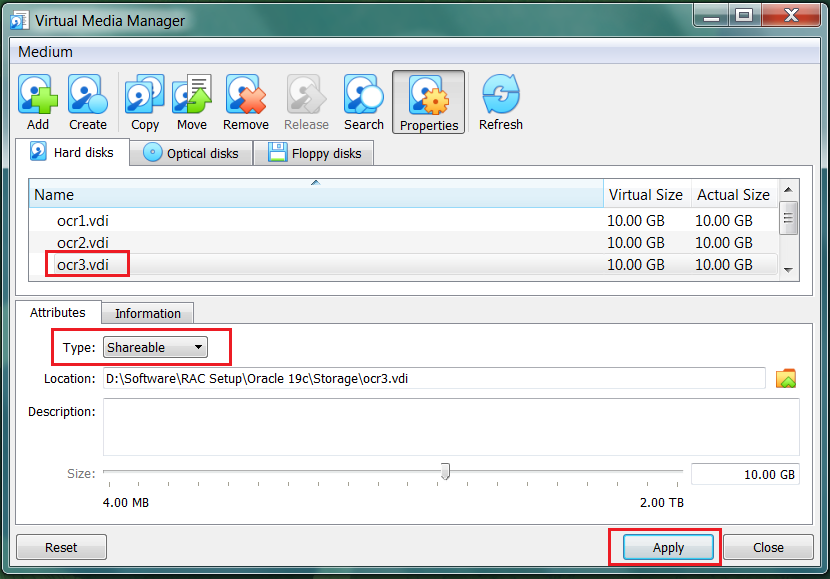

Click "File" and then "Virtual Media Manager" to create virtual disks.

Here, choose whether the new virtual hard disk should grow as it is used(dynamically allocated) or if it should be created at its maximum size(fixed size). Fixed size disk will take some time to create, but it is faster to use.

You can customise your storage location.

Your 1st disk ocr1 is ready to use. Follow the same steps for remaining two disks as well.

All three disks are ready to use now.

Now its time to mark these disks as Shareable. Select any one disk to be marked as Shreable and select type as Shareable. Click "Apply" to save the changes. Follow the same steps for other virtual disks as well.

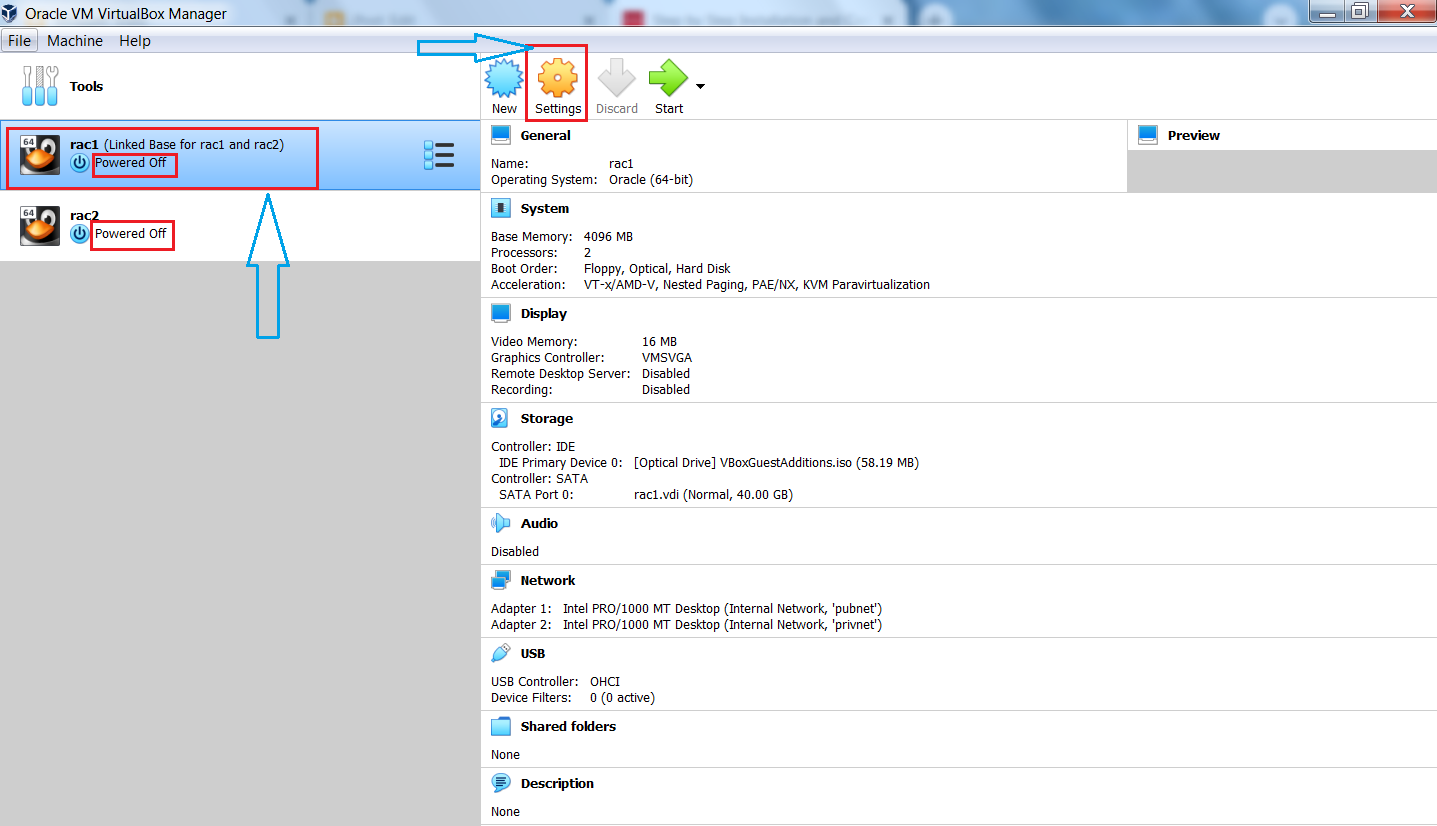

Once sharing is done then, you have to attach all these disks to both Node1 and Node2 as shown in below screenshots.

Select Node1 and attach all the OCR disks as shown in below screenshots. Follow the same steps for Node2 as well.

You can see all OCR disks are attached to both the nodes.

Now its time to create logical partitions on given storage devices.

Node1: Execute below commands to partition the disks. Please execute fdisk commands on Node1 only or any of the node of the cluster. No need to execute these commands on all the nodes of the cluster since these are shared disks and all are visible to all the nodes of the cluster once you create from any node. fdisk </dev/device_name> n - To create new partition p - To create Primary partition so that it can not be further extended. ENTER - Press ENTER button from your keyboard so that it will consider default value. ENTER - Press ENTER button from your keyboard so that it will consider default value. ENTER - Press ENTER button from your keyboard so that it will consider default value. w - Save the created partition [root@rac1 ~]# fdisk -l Disk /dev/sdb: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdd: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdc: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sda: 40 GiB, 42949672960 bytes, 83886080 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x924f9c86 Device Boot Start End Sectors Size Id Type /dev/sda1 * 2048 2099199 2097152 1G 83 Linux /dev/sda2 2099200 83886079 81786880 39G 8e Linux LVM Disk /dev/mapper/ol-root: 35 GiB, 37622906880 bytes, 73482240 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/mapper/ol-swap: 4 GiB, 4248829952 bytes, 8298496 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes [root@rac1 ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 40G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 39G 0 part ├─ol-root 252:0 0 35G 0 lvm / └─ol-swap 252:1 0 4G 0 lvm [SWAP] sdb 8:16 0 10G 0 disk sdc 8:32 0 10G 0 disk sdd 8:48 0 10G 0 disk sr0 11:0 1 1024M 0 rom [root@rac1 ~]# fdisk -l /dev/sdb Disk /dev/sdb: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes [root@rac1 ~]# fdisk /dev/sdb Welcome to fdisk (util-linux 2.32.1). Changes will remain in memory only, until you decide to write them. Be careful before using the write command. Device does not contain a recognized partition table. Created a new DOS disklabel with disk identifier 0x137a23e2. Command (m for help): p Disk /dev/sdb: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x137a23e2 Command (m for help): n Partition type p primary (0 primary, 0 extended, 4 free) e extended (container for logical partitions) Select (default p): p Partition number (1-4, default 1): First sector (2048-20971519, default 2048): Last sector, +sectors or +size{K,M,G,T,P} (2048-20971519, default 20971519): Created a new partition 1 of type 'Linux' and of size 10 GiB. Command (m for help): w The partition table has been altered. Calling ioctl() to re-read partition table. Syncing disks. [root@rac1 ~]# fdisk -l /dev/sdb Disk /dev/sdb: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x137a23e2 Device Boot Start End Sectors Size Id Type /dev/sdb1 2048 20971519 20969472 10G 83 Linux [root@rac1 ~]# fdisk -l /dev/sdc Disk /dev/sdc: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes [root@rac1 ~]# fdisk /dev/sdc Welcome to fdisk (util-linux 2.32.1). Changes will remain in memory only, until you decide to write them. Be careful before using the write command. Device does not contain a recognized partition table. Created a new DOS disklabel with disk identifier 0xbcded622. Command (m for help): p Disk /dev/sdc: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0xbcded622 Command (m for help): n Partition type p primary (0 primary, 0 extended, 4 free) e extended (container for logical partitions) Select (default p): p Partition number (1-4, default 1): First sector (2048-20971519, default 2048): Last sector, +sectors or +size{K,M,G,T,P} (2048-20971519, default 20971519): Created a new partition 1 of type 'Linux' and of size 10 GiB. Command (m for help): w The partition table has been altered. Calling ioctl() to re-read partition table. Syncing disks. [root@rac1 ~]# fdisk -l /dev/sdc Disk /dev/sdc: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0xbcded622 Device Boot Start End Sectors Size Id Type /dev/sdc1 2048 20971519 20969472 10G 83 Linux [root@rac1 ~]# fdisk -l /dev/sdd Disk /dev/sdd: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes [root@rac1 ~]# fdisk /dev/sdd Welcome to fdisk (util-linux 2.32.1). Changes will remain in memory only, until you decide to write them. Be careful before using the write command. Device does not contain a recognized partition table. Created a new DOS disklabel with disk identifier 0x646b0a4d. Command (m for help): p Disk /dev/sdd: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x646b0a4d Command (m for help): n Partition type p primary (0 primary, 0 extended, 4 free) e extended (container for logical partitions) Select (default p): p Partition number (1-4, default 1): First sector (2048-20971519, default 2048): Last sector, +sectors or +size{K,M,G,T,P} (2048-20971519, default 20971519): Created a new partition 1 of type 'Linux' and of size 10 GiB. Command (m for help): w The partition table has been altered. Calling ioctl() to re-read partition table. Syncing disks. [root@rac1 ~]# fdisk -l /dev/sdd Disk /dev/sdd: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x646b0a4d Device Boot Start End Sectors Size Id Type /dev/sdd1 2048 20971519 20969472 10G 83 Linux You can see the original device names are: /dev/sdb /dev/sdc /dev/sdd and the logical partition names are: /dev/sdb1 /dev/sdc1 /dev/sdd1 [root@rac1 ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 40G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 39G 0 part ├─ol-root 252:0 0 35G 0 lvm / └─ol-swap 252:1 0 4G 0 lvm [SWAP] sdb 8:16 0 10G 0 disk └─sdb1 8:17 0 10G 0 part sdc 8:32 0 10G 0 disk └─sdc1 8:33 0 10G 0 part sdd 8:48 0 10G 0 disk └─sdd1 8:49 0 10G 0 part sr0 11:0 1 1024M 0 rom #Execute below commands to load updated block device partition tables. [root@rac1 ~]# partx -u /dev/sdb1 [root@rac1 ~]# partx -u /dev/sdc1 [root@rac1 ~]# partx -u /dev/sdd1 #Find SCSI ID [root@rac1 ~]# /usr/lib/udev/scsi_id -g -u -d /dev/sdb 1ATA_VBOX_HARDDISK_VBe8e80ce7-20d1c609 [root@rac1 ~]# /usr/lib/udev/scsi_id -g -u -d /dev/sdc 1ATA_VBOX_HARDDISK_VB48d1861f-805b331f [root@rac1 ~]# /usr/lib/udev/scsi_id -g -u -d /dev/sdd 1ATA_VBOX_HARDDISK_VBe7171eaa-a7dcddcd #Create below file and add below entries. [root@rac1 ~]# ls -ltr /etc/udev/rules.d -rw-r--r--. 1 root root 628 May 30 2022 70-persistent-ipoib.rules -rw-r--r--. 1 root root 67 Oct 2 2022 69-vdo-start-by-dev.rules -rw-r--r--. 1 root root 148 Nov 9 2022 99-vmware-scsi-timeout.rules -rw-r--r--. 1 root root 134 Jul 8 17:47 60-vboxadd.rules [root@rac1 ~]# vi /etc/udev/rules.d/asm_devices.rules [root@rac1 ~]# cat /etc/udev/rules.d/asm_devices.rules KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VBe8e80ce7-20d1c609", SYMLINK+="oracleasm/disks/OCR1", OWNER="grid", GROUP="oinstall", MODE="0660" KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VB48d1861f-805b331f", SYMLINK+="oracleasm/disks/OCR2", OWNER="grid", GROUP="oinstall", MODE="0660" KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VBe7171eaa-a7dcddcd", SYMLINK+="oracleasm/disks/OCR3", OWNER="grid", GROUP="oinstall", MODE="0660" [root@rac1 ~]# ls -ltr /etc/udev/rules.d -rw-r--r--. 1 root root 628 May 30 2022 70-persistent-ipoib.rules -rw-r--r--. 1 root root 67 Oct 2 2022 69-vdo-start-by-dev.rules -rw-r--r--. 1 root root 148 Nov 9 2022 99-vmware-scsi-timeout.rules -rw-r--r--. 1 root root 134 Jul 8 17:47 60-vboxadd.rules -rw-r--r-- 1 root root 657 Jul 12 01:52 asm_devices.rules #Below commands will reload the complete udev configuration and will trigger all the udev rules. On a critical production server, this can interrupt ongoing operations and can impact business applications. Please use the below commands during downtime window only. [root@rac1 ~]# udevadm control --reload-rules [root@rac1 ~]# ls -ld /dev/sd*1 brw-rw---- 1 root disk 8, 1 Jul 8 17:47 /dev/sda1 brw-rw---- 1 root disk 8, 17 Jul 12 01:46 /dev/sdb1 brw-rw---- 1 root disk 8, 33 Jul 12 01:47 /dev/sdc1 brw-rw---- 1 root disk 8, 49 Jul 12 01:47 /dev/sdd1 [root@rac1 ~]# udevadm trigger [root@rac1 ~]# ls -ld /dev/sd*1 brw-rw---- 1 root disk 8, 1 Jul 12 01:55 /dev/sda1 brw-rw---- 1 grid oinstall 8, 17 Jul 12 01:55 /dev/sdb1 brw-rw---- 1 grid oinstall 8, 33 Jul 12 01:55 /dev/sdc1 brw-rw---- 1 grid oinstall 8, 49 Jul 12 01:55 /dev/sdd1 #Execute below commands to list the oracleasm disks. You can see that the symbolic links are owned by root:root, but the logical partition devices are owned by grid:oinstall. [root@rac1 ~]# ls -ltra /dev/oracleasm/disks/* lrwxrwxrwx 1 root root 10 Jul 12 01:55 /dev/oracleasm/disks/OCR3 -> ../../sdd1 lrwxrwxrwx 1 root root 10 Jul 12 01:55 /dev/oracleasm/disks/OCR2 -> ../../sdc1 lrwxrwxrwx 1 root root 10 Jul 12 01:55 /dev/oracleasm/disks/OCR1 -> ../../sdb1 [root@rac1 ~]# ls -ld /dev/sd*1 brw-rw---- 1 root disk 8, 1 Jul 12 01:55 /dev/sda1 brw-rw---- 1 grid oinstall 8, 17 Jul 12 01:55 /dev/sdb1 brw-rw---- 1 grid oinstall 8, 33 Jul 12 01:55 /dev/sdc1 brw-rw---- 1 grid oinstall 8, 49 Jul 12 01:55 /dev/sdd1 on Node2: #Execute below commands to load updated block device partition tables. [root@rac2 ~]# partx -u /dev/sdb [root@rac2 ~]# partx -u /dev/sdc [root@rac2 ~]# partx -u /dev/sdd [root@rac2 ~]# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 40G 0 disk ├─sda1 8:1 0 1G 0 part /boot └─sda2 8:2 0 39G 0 part ├─ol-root 252:0 0 35G 0 lvm / └─ol-swap 252:1 0 4G 0 lvm [SWAP] sdb 8:16 0 10G 0 disk └─sdb1 8:17 0 10G 0 part sdc 8:32 0 10G 0 disk └─sdc1 8:33 0 10G 0 part sdd 8:48 0 10G 0 disk └─sdd1 8:49 0 10G 0 part sr0 11:0 1 58.2M 0 rom /run/media/grid/VBox_GAs_6.1.22 #Create below file and add below entries. [root@rac2 ~]# ls -ltr /etc/udev/rules.d -rw-r--r--. 1 root root 628 May 30 2022 70-persistent-ipoib.rules -rw-r--r--. 1 root root 67 Oct 2 2022 69-vdo-start-by-dev.rules -rw-r--r--. 1 root root 148 Nov 9 2022 99-vmware-scsi-timeout.rules -rw-r--r--. 1 root root 134 Jul 12 01:32 60-vboxadd.rules [root@rac2 ~]# vi /etc/udev/rules.d/asm_devices.rules [root@rac2 ~]# cat /etc/udev/rules.d/asm_devices.rules KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VBe8e80ce7-20d1c609", SYMLINK+="oracleasm/disks/OCR1", OWNER="grid", GROUP="oinstall", MODE="0660" KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VB48d1861f-805b331f", SYMLINK+="oracleasm/disks/OCR2", OWNER="grid", GROUP="oinstall", MODE="0660" KERNEL=="sd?1", SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d /dev/$parent", RESULT=="1ATA_VBOX_HARDDISK_VBe7171eaa-a7dcddcd", SYMLINK+="oracleasm/disks/OCR3", OWNER="grid", GROUP="oinstall", MODE="0660" [root@rac2 ~]# ls -ltr /etc/udev/rules.d -rw-r--r--. 1 root root 628 May 30 2022 70-persistent-ipoib.rules -rw-r--r--. 1 root root 67 Oct 2 2022 69-vdo-start-by-dev.rules -rw-r--r--. 1 root root 148 Nov 9 2022 99-vmware-scsi-timeout.rules -rw-r--r--. 1 root root 134 Jul 12 01:32 60-vboxadd.rules -rw-r--r-- 1 root root 657 Jul 12 01:59 asm_devices.rules #Below commands will reload the complete udev configuration and will trigger all the udev rules. On a critical production server, this can interrupt ongoing operations and can impact business applications. Please use the below commands during downtime window only. [root@rac2 ~]# udevadm control --reload-rules [root@rac2 ~]# udevadm trigger [root@rac2 ~]# ls -ld /dev/sd*1 brw-rw---- 1 root disk 8, 1 Jul 12 02:02 /dev/sda1 brw-rw---- 1 grid oinstall 8, 17 Jul 12 02:02 /dev/sdb1 brw-rw---- 1 grid oinstall 8, 33 Jul 12 02:02 /dev/sdc1 brw-rw---- 1 grid oinstall 8, 49 Jul 12 02:02 /dev/sdd1 #Execute below commands to list the oracleasm disks. You can see that the symbolic links are owned by root:root, but the logical partition devices are owned by grid:oinstall. [root@rac2 ~]# ls -ltra /dev/oracleasm/disks/* lrwxrwxrwx 1 root root 10 Jul 12 02:02 /dev/oracleasm/disks/OCR3 -> ../../sdc1 lrwxrwxrwx 1 root root 10 Jul 12 02:02 /dev/oracleasm/disks/OCR1 -> ../../sdd1 lrwxrwxrwx 1 root root 10 Jul 12 02:02 /dev/oracleasm/disks/OCR2 -> ../../sdb1 [root@rac2 ~]# ls -ld /dev/sd*1 brw-rw---- 1 root disk 8, 1 Jul 12 02:02 /dev/sda1 brw-rw---- 1 grid oinstall 8, 17 Jul 12 02:02 /dev/sdb1 brw-rw---- 1 grid oinstall 8, 33 Jul 12 02:02 /dev/sdc1 brw-rw---- 1 grid oinstall 8, 49 Jul 12 02:02 /dev/sdd1 |

Lets configrue ssh authentication/passwordless configuration/user equivalence for grid user which will be required for Grid Infrastructure installation. 1) Delete "/home/grid/.ssh" file fron both the nodes and create again. Node1: [grid@rac1 ~]$ id uid=1001(grid) gid=2000(oinstall) groups=2000(oinstall),2100(asmadmin),2200(dba),2300(oper),2400(asmdba),2500(asmoper) [grid@rac1 ~]$ rm -rf .ssh [grid@rac1 ~]$ mkdir .ssh [grid@rac1 ~]$ chmod 700 .ssh 2) Go to the "/home/grid/.ssh" directory and generate RSA and DSA keys for both the nodes. [grid@rac1 ~]$ cd /home/grid/.ssh [grid@rac1 .ssh]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_rsa. Your public key has been saved in /home/grid/.ssh/id_rsa.pub. The key fingerprint is: SHA256:HFTHfSOs2rnv7rN/A9YsYAYZoKllRuCj5uDyzpu2uc0 grid@rac1.localdomain The key's randomart image is: +---[RSA 3072]----+ | ... .oo+.o. | | . . o. o ..o...| | o * . . . ...| | . * . . = | |.o . S = o o | |= . o + o | |.o o o | |.o.= . . ..| | oX+E =*+.o| +----[SHA256]-----+ [grid@rac1 .ssh]$ ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_dsa. Your public key has been saved in /home/grid/.ssh/id_dsa.pub. The key fingerprint is: SHA256:xaz5aSTRBFcNCz3gKZlai3n5KNh7RkalMfgS4ehsCAY grid@rac1.localdomain The key's randomart image is: +---[DSA 1024]----+ |E .o..=+oo | |. oo oX.oo.. | |.. . .o*=B .. | |.. + .=+O | | . + +oS . | | . o .o* . | | . oo. = | | oo. | | .o | +----[SHA256]-----+ Node2: [grid@rac2 ~]$ id uid=1001(grid) gid=2000(oinstall) groups=2000(oinstall),2100(asmadmin),2200(dba),2300(oper),2400(asmdba),2500(asmoper) [grid@rac2 ~]$ rm -rf .ssh [grid@rac2 ~]$ mkdir .ssh [grid@rac2 ~]$ chmod 700 .ssh [grid@rac2 ~]$ cd /home/grid/.ssh [grid@rac2 .ssh]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_rsa. Your public key has been saved in /home/grid/.ssh/id_rsa.pub. The key fingerprint is: SHA256:TMtJQPBG0+8O1eZ9TQ8lYXEHg/QQ9fieP7cfxJWdqW4 grid@rac2.localdomain The key's randomart image is: +---[RSA 3072]----+ | .o=. .++B+o| | o o. .+.=B| | o o. . o*+| | . = oo o +.o| | So o o =+| | . . o o.=| | o E +.| | . . .+| | .B| +----[SHA256]-----+ [grid@rac2 .ssh]$ ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_dsa. Your public key has been saved in /home/grid/.ssh/id_dsa.pub. The key fingerprint is: SHA256:+pzskRLyVtCy6PiffdZb5w8AiiZpLcwIIZ3woEh2xP8 grid@rac2.localdomain The key's randomart image is: +---[DSA 1024]----+ |==o+ | |*++. . | |+ . . o . . | | . + = = . . | | . X B S . | | + * E . . | | . . = o . o .| | . . B oo .. + | | ..o.Bo .. +| +----[SHA256]-----+ 3) Redirect all *.pub files data to respective Node as authorized_keys.hostname and transfter the files from Node1 to Node2 and vice-versa. Node1: [grid@rac1 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 575 Jul 12 07:52 id_rsa.pub -rw------- 1 grid oinstall 2610 Jul 12 07:52 id_rsa -rw-r--r-- 1 grid oinstall 611 Jul 12 07:52 id_dsa.pub -rw------- 1 grid oinstall 1393 Jul 12 07:52 id_dsa [grid@rac1 .ssh]$ cat *.pub >> authorized_keys.rac1 [grid@rac1 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 575 Jul 12 07:52 id_rsa.pub -rw------- 1 grid oinstall 2610 Jul 12 07:52 id_rsa -rw-r--r-- 1 grid oinstall 611 Jul 12 07:52 id_dsa.pub -rw------- 1 grid oinstall 1393 Jul 12 07:52 id_dsa -rw-r--r-- 1 grid oinstall 1186 Jul 12 07:55 authorized_keys.rac1 [grid@rac1 .ssh]$ scp authorized_keys.rac1 grid@rac2:/home/grid/.ssh/ The authenticity of host 'rac2 (10.20.30.102)' can't be established. ECDSA key fingerprint is SHA256:3uNcrQ/0wh3IZIls5I2SqsOA2VHc4YrF7MQLqBR7jXQ. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added 'rac2,10.20.30.102' (ECDSA) to the list of known hosts. grid@rac2's password: authorized_keys.rac1 100% 1186 1.3MB/s 00:00 Node2: [grid@rac2 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 575 Jul 12 07:53 id_rsa.pub -rw------- 1 grid oinstall 2610 Jul 12 07:53 id_rsa -rw-r--r-- 1 grid oinstall 611 Jul 12 07:53 id_dsa.pub -rw------- 1 grid oinstall 1393 Jul 12 07:53 id_dsa [grid@rac2 .ssh]$ cat *.pub >> authorized_keys.rac2 [grid@rac2 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 575 Jul 12 07:53 id_rsa.pub -rw------- 1 grid oinstall 2610 Jul 12 07:53 id_rsa -rw-r--r-- 1 grid oinstall 611 Jul 12 07:53 id_dsa.pub -rw------- 1 grid oinstall 1393 Jul 12 07:53 id_dsa -rw-r--r-- 1 grid oinstall 1186 Jul 12 07:55 authorized_keys.rac2 [grid@rac2 .ssh]$ scp authorized_keys.rac2 grid@rac1:/home/grid/.ssh/ The authenticity of host 'rac1 (10.20.30.101)' can't be established. ECDSA key fingerprint is SHA256:3uNcrQ/0wh3IZIls5I2SqsOA2VHc4YrF7MQLqBR7jXQ. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added 'rac1,10.20.30.101' (ECDSA) to the list of known hosts. grid@rac1's password: authorized_keys.rac2 100% 1186 1.1MB/s 00:00 4) List the files now and check if authorized_keys files from both nodes exist in the location. Node1: [grid@rac1 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 575 Jul 12 07:52 id_rsa.pub -rw------- 1 grid oinstall 2610 Jul 12 07:52 id_rsa -rw-r--r-- 1 grid oinstall 611 Jul 12 07:52 id_dsa.pub -rw------- 1 grid oinstall 1393 Jul 12 07:52 id_dsa -rw-r--r-- 1 grid oinstall 1186 Jul 12 07:55 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 179 Jul 12 07:57 known_hosts -rw-r--r-- 1 grid oinstall 1186 Jul 12 07:57 authorized_keys.rac2 Node2: [grid@rac2 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 575 Jul 12 07:53 id_rsa.pub -rw------- 1 grid oinstall 2610 Jul 12 07:53 id_rsa -rw-r--r-- 1 grid oinstall 611 Jul 12 07:53 id_dsa.pub -rw------- 1 grid oinstall 1393 Jul 12 07:53 id_dsa -rw-r--r-- 1 grid oinstall 1186 Jul 12 07:55 authorized_keys.rac2 -rw-r--r-- 1 grid oinstall 1186 Jul 12 07:57 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 358 Jul 12 07:57 known_hosts 5) Redirect all *.rac files data to respective Node as authorized_keys. Node1: [grid@rac1 .ssh]$ cat *.rac* >> authorized_keys [grid@rac1 .ssh]$ chmod 600 authorized_keys [grid@rac1 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 575 Jul 12 07:52 id_rsa.pub -rw------- 1 grid oinstall 2610 Jul 12 07:52 id_rsa -rw-r--r-- 1 grid oinstall 611 Jul 12 07:52 id_dsa.pub -rw------- 1 grid oinstall 1393 Jul 12 07:52 id_dsa -rw-r--r-- 1 grid oinstall 1186 Jul 12 07:55 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 179 Jul 12 07:57 known_hosts -rw-r--r-- 1 grid oinstall 1186 Jul 12 07:57 authorized_keys.rac2 -rw------- 1 grid oinstall 2372 Jul 12 07:58 authorized_keys Node2: [grid@rac2 .ssh]$ cat *.rac* >> authorized_keys [grid@rac2 .ssh]$ chmod 600 authorized_keys [grid@rac2 .ssh]$ ls -ltr -rw-r--r-- 1 grid oinstall 575 Jul 12 07:53 id_rsa.pub -rw------- 1 grid oinstall 2610 Jul 12 07:53 id_rsa -rw-r--r-- 1 grid oinstall 611 Jul 12 07:53 id_dsa.pub -rw------- 1 grid oinstall 1393 Jul 12 07:53 id_dsa -rw-r--r-- 1 grid oinstall 1186 Jul 12 07:55 authorized_keys.rac2 -rw-r--r-- 1 grid oinstall 1186 Jul 12 07:57 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 358 Jul 12 07:57 known_hosts -rw------- 1 grid oinstall 2372 Jul 12 07:59 authorized_keys 6) Now we can test the ssh authentication by below commands. Ensure self authentication is also required i.e. Node1-Node1,Node1-Node2, Node2-Node2, Node2-Node1 without which we cannot proceed the installation. [grid@rac1 .ssh]$ ssh rac1 The authenticity of host 'rac1 (10.20.30.101)' can't be established. ECDSA key fingerprint is SHA256:3uNcrQ/0wh3IZIls5I2SqsOA2VHc4YrF7MQLqBR7jXQ. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added 'rac1,10.20.30.101' (ECDSA) to the list of known hosts. Activate the web console with: systemctl enable --now cockpit.socket Last login: Fri Jul 12 07:49:56 2024 [grid@rac1 ~]$ ssh rac2 Activate the web console with: systemctl enable --now cockpit.socket Last login: Fri Jul 12 07:51:08 2024 from 10.20.30.101 [grid@rac2 ~]$ ssh rac2 Activate the web console with: systemctl enable --now cockpit.socket Last login: Fri Jul 12 08:01:37 2024 from 10.20.30.101 [grid@rac2 ~]$ ssh rac1 Activate the web console with: systemctl enable --now cockpit.socket Last login: Fri Jul 12 08:01:33 2024 from 10.20.30.101 |

GRID Installation and configuration 1)Copy the GRID Infra to target server in GRID_HOME location since the setup is Gold Image Copy setup. Login as grid user and unzip the GRID setup files, you will get complete HOME binaries in GRID_HOME. 2)Start GRID Installation with patch. #Create directory on Linux server to copy softwares. [root@rac1 ~]# cd /u01/setup/ [root@rac1 setup]# mkdir grid [root@rac1 setup]# chown -R grid:oinstall grid [root@rac1 setup]# chmod -R 755 grid [root@rac1 setup]# ls -ltr drwxr-xr-x 2 grid oinstall 6 Jul 16 10:12 grid [root@rac1 setup]# cd grid [root@rac1 grid]# pwd /u01/setup/grid #Go to the software location where all softwares are located. copy these softwares to Linux server. [root@rac1 grid]# cd /media/sf_Setups/ [root@rac1 sf_Setups]# ll -rwxrwx--- 1 root vboxsf 3059705302 Jun 11 11:14 LINUX.X64_193000_db_home.zip -rwxrwx--- 1 root vboxsf 2889184573 Jun 11 10:59 LINUX.X64_193000_grid_home.zip -rwxrwx--- 1 root vboxsf 62860 Jul 12 08:55 'oracle 19c rac installation.txt' -rwxrwx--- 1 root vboxsf 27092 Jun 18 2022 oracleasmlib-2.0.17-1.el8.x86_64.rpm -rwxrwx--- 1 root vboxsf 99852 Jun 18 2022 oracleasm-support-2.1.12-1.el8.x86_64.rpm -rwxrwx--- 1 root vboxsf 6841259 Jul 12 09:24 'Oracle® Database Patch 36233126 - GI Release Update 19.23.0.0.pdf' -rwxrwx--- 1 root vboxsf 3411816300 Jul 12 09:20 p36233126_190000_Linux-x86-64.zip -rwxrwx--- 1 root vboxsf 28324541 Jul 12 09:16 p6880880_230000_Linux-x86-64.zip drwxrwx--- 1 root vboxsf 20480 Jul 1 05:45 Screens drwxrwx--- 1 root vboxsf 4096 Jul 2 08:00 V1032420-01 -rwxrwx--- 1 root vboxsf 12062818304 May 1 2023 V1032420-01.iso #Copy these softwares to Linux server and grant read,write,execute permissions to the files. [root@rac1 sf_Setups]# cp LINUX.X64_193000_grid_home.zip /u01/app/19.0.0/grid/ [root@rac1 sf_Setups]# cp p36233126_190000_Linux-x86-64.zip /u01/setup/grid/ [root@rac1 sf_Setups]# cp p6880880_230000_Linux-x86-64.zip /u01/app/19.0.0/grid/ [root@rac1 sf_Setups]# chmod 777 /u01/app/19.0.0/grid/LINUX.X64_193000_grid_home.zip [root@rac1 sf_Setups]# chmod 777 /u01/app/19.0.0/grid/p6880880_230000_Linux-x86-64.zip [root@rac1 sf_Setups]# chmod 777 /u01/setup/grid/p36233126_190000_Linux-x86-64.zip #Now login by grid user and extract the GRID Infrastructure software, GI patch, and OPatch. [grid@rac1 ~]$ id uid=1001(grid) gid=2000(oinstall) groups=2000(oinstall),2100(asmadmin),2200(dba),2300(oper),2400(asmdba),2500(asmoper) [grid@rac1 ~]$ cd /u01/app/19.0.0/grid/ [grid@rac1 grid]$ ll -rwxrwxrwx 1 root root 2889184573 Jul 16 10:09 LINUX.X64_193000_grid_home.zip -rwxrwxrwx 1 root root 28324541 Jul 16 10:16 p6880880_230000_Linux-x86-64.zip [grid@rac1 grid]$ unzip LINUX.X64_193000_grid_home.zip Archive: LINUX.X64_193000_grid_home.zip creating: instantclient/ inflating: instantclient/libsqlplusic.so creating: opmn/ creating: opmn/logs/ creating: opmn/conf/ inflating: opmn/conf/ons.config creating: opmn/admin/ ... jdk/jre/bin/ControlPanel -> jcontrol javavm/admin/libjtcjt.so -> ../../javavm/jdk/jdk8/admin/libjtcjt.so javavm/admin/classes.bin -> ../../javavm/jdk/jdk8/admin/classes.bin javavm/admin/lfclasses.bin -> ../../javavm/jdk/jdk8/admin/lfclasses.bin javavm/lib/security/cacerts -> ../../../javavm/jdk/jdk8/lib/security/cacerts javavm/lib/sunjce_provider.jar -> ../../javavm/jdk/jdk8/lib/sunjce_provider.jar javavm/lib/security/README.txt -> ../../../javavm/jdk/jdk8/lib/security/README.txt javavm/lib/security/java.security -> ../../../javavm/jdk/jdk8/lib/security/java.security jdk/jre/lib/amd64/server/libjsig.so -> ../libjsig.so [grid@rac1 grid]$ #Don't forget to move the existing OPatch directory before extracting new OPatch. [grid@rac1 grid]$ ls -ld OPatch drwxr-x--- 14 grid oinstall 4096 Apr 12 2019 OPatch [grid@rac1 grid]$ mv OPatch OPatch_bkp [grid@rac1 grid]$ ll p6880880_230000_Linux-x86-64.zip -rwxrwxrwx 1 root root 28324541 Jul 16 10:16 p6880880_230000_Linux-x86-64.zip [grid@rac1 grid]$ unzip p6880880_230000_Linux-x86-64.zip Archive: p6880880_230000_Linux-x86-64.zip creating: OPatch/ inflating: OPatch/opatchauto creating: OPatch/ocm/ creating: OPatch/ocm/doc/ creating: OPatch/ocm/bin/ creating: OPatch/ocm/lib/ .... inflating: OPatch/modules/features/com.oracle.orapki.jar inflating: OPatch/modules/features/com.oracle.glcm.patch.opatch-common-api-classpath.jar inflating: OPatch/modules/com.sun.org.apache.xml.internal.resolver.jar inflating: OPatch/modules/com.oracle.glcm.patch.opatchauto-wallet_12.2.1.42.0.jar inflating: OPatch/modules/com.sun.xml.bind.jaxb-jxc.jar inflating: OPatch/modules/javax.activation.javax.activation.jar [grid@rac1 grid]$ [grid@rac1 grid]$ cd /u01/setup/grid/ [grid@rac1 grid]$ ll -rwxrwxrwx 1 root root 3411816300 Jul 16 10:15 p36233126_190000_Linux-x86-64.zip [grid@rac1 grid]$ unzip p36233126_190000_Linux-x86-64.zip Archive: p36233126_190000_Linux-x86-64.zip creating: 36233126/ creating: 36233126/36240578/ creating: 36233126/36240578/files/ creating: 36233126/36240578/files/inventory/ creating: 36233126/36240578/files/inventory/Templates/ creating: 36233126/36240578/files/inventory/Templates/crs/ ... inflating: 36233126/automation/messages.properties inflating: 36233126/README.txt inflating: 36233126/README.html inflating: 36233126/bundle.xml inflating: PatchSearch.xml [grid@rac1 grid]$ #Install cvuqdisk RPM on both the nodes. This RPM is part of your GI software located under below directory. [root@rac1 ~]# cd /u01/app/19.0.0/grid/cv/rpm/ [root@rac1 rpm]# ll -rw-r--r-- 1 grid oinstall 11412 Mar 13 2019 cvuqdisk-1.0.10-1.rpm [root@rac1 rpm]# rpm -ivh cvuqdisk-1.0.10-1.rpm Verifying... ################################# [100%] Preparing... ################################# [100%] Using default group oinstall to install package Updating / installing... 1:cvuqdisk-1.0.10-1 ################################# [100%] [root@rac1 rpm]# scp cvuqdisk-1.0.10-1.rpm root@rac2:/tmp root@rac2's password: cvuqdisk-1.0.10-1.rpm 100% 11KB 7.8MB/s 00:00 [root@rac1 rpm]# ssh rac2 root@rac2's password: Activate the web console with: systemctl enable --now cockpit.socket Last login: Fri Jul 12 01:57:34 2024 [root@rac2 ~]# cd /tmp [root@rac2 tmp]# ll cvuqdisk-1.0.10-1.rpm -rw-r--r-- 1 root root 11412 Jul 16 11:06 cvuqdisk-1.0.10-1.rpm [root@rac2 tmp]# rpm -ivh cvuqdisk-1.0.10-1.rpm Verifying... ################################# [100%] Preparing... ################################# [100%] Using default group oinstall to install package Updating / installing... 1:cvuqdisk-1.0.10-1 ################################# [100%] |

All our settings are now configured. We are ready to begin the installation process. Login as grid user and execute below command to start the Grid Insfrastructure installation. It is strongly recommended to start the Grid Infrastructure or RDBMS software installation with -applyRU option which will apply the patch first and then start the GUI console. Apply latest patch.

[grid@rac1 ~]$ cd /u01/app/19.0.0/grid/

[grid@rac1 ~]$ ./gridSetup.sh -applyRU /u01/setup/grid/36233126

Once you start the installation with -applyRU option, you can go to patch log directory and verify all patches are applied successfully. The location would be "/u01/app/19.0.0/grid/cfgtoollogs/opatchauto". [grid@rac1 opatchauto]$ pwd /u01/app/19.0.0/grid/cfgtoollogs/opatchauto [grid@rac1 opatchauto]$ ll drwxr-xr-x 4 grid oinstall 42 Jul 16 11:59 core -rw-r----- 1 grid oinstall 611497 Jul 16 12:23 opatchauto_2024-07-16_11-57-39_binary.log [grid@rac1 opatchauto]$ cat opatchauto_2024-07-16_11-57-39_binary.log ... ==Following patches were SUCCESSFULLY applied: Patch: /u01/setup/grid/36233126/36233263 Log: /u01/app/19.0.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2024-07-16_11-57-41AM_1.log Patch: /u01/setup/grid/36233126/36233343 Log: /u01/app/19.0.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2024-07-16_11-57-41AM_1.log Patch: /u01/setup/grid/36233126/36240578 Log: /u01/app/19.0.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2024-07-16_11-57-41AM_1.log Patch: /u01/setup/grid/36233126/36383196 Log: /u01/app/19.0.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2024-07-16_11-57-41AM_1.log Patch: /u01/setup/grid/36233126/36460248 Log: /u01/app/19.0.0/grid/cfgtoollogs/opatchauto/core/opatch/opatch2024-07-16_11-57-41AM_1.log 2024-07-16 12:23:43,041 INFO [1] oracle.opatchauto.core.binary.OACLogger - ... Patching operations Completed. 2024-07-16 12:23:43,041 INFO [1] oracle.opatchauto.core.binary.OACLogger - Backing up the SystemPatch metadata ... 2024-07-16 12:23:43,052 INFO [1] oracle.opatchauto.core.binary.OACLogger - Backing up SystemPatch bundle.xml from "/u01/setup/grid/36233126" to "/u01/app/19.0.0/grid/.opatchauto_storage/system_patches/36233126" 2024-07-16 12:23:43,059 INFO [1] oracle.opatchauto.core.binary.OACLogger - Successfully backed up the bundle.xml 2024-07-16 12:23:43,059 INFO [1] oracle.opatchauto.core.binary.OACLogger - This File already exists and it will be deleted first: /u01/app/19.0.0/grid/.opatchauto_storage/system_patches/36233126/bundle.xml 2024-07-16 12:23:43,059 INFO [1] oracle.opatchauto.core.binary.OACLogger - Backing up SystemPatch bundle.xml from "/u01/setup/grid/36233126" to "/u01/app/19.0.0/grid/.opatchauto_storage/system_patches/36233126" 2024-07-16 12:23:43,059 INFO [1] oracle.opatchauto.core.binary.OACLogger - Successfully backed up the bundle.xml 2024-07-16 12:23:43,060 INFO [1] oracle.opatchauto.core.binary.OACLogger - opatchauto SUCCEEDED. |

Select "Configure Oracle Grid Infrastructure for a New Cluster" option and click NEXT to proceed.

Select "Configure an Oracle Standalone Cluster" option and click NEXT to proceed.

Add scan name that you added in "/etc/hosts" file and port.

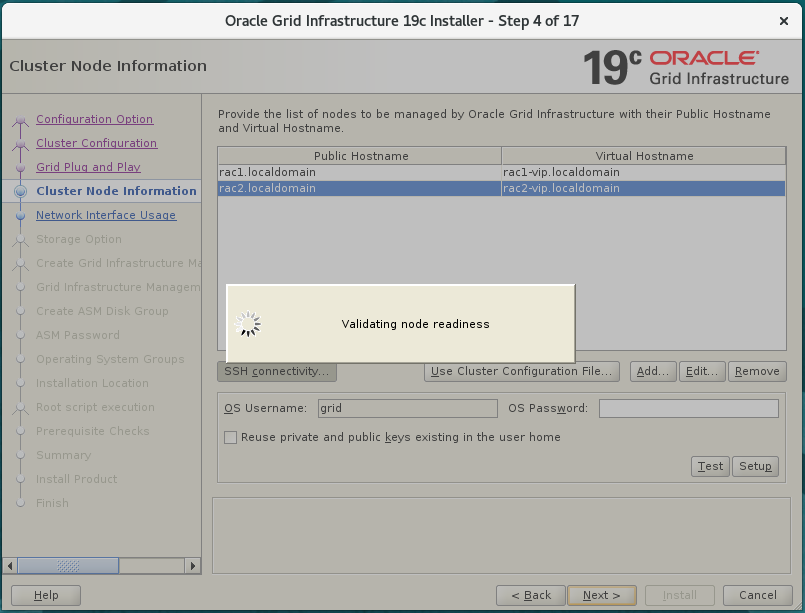

By default, you will find the public and virtual node details on the screen. You have to manually add public and virtual hostname for 2nd node as well.

Please note that SSH authentication or passwordless configuration or user equivalence can be configured here by clicking "ssh connectivity" option. Here, I am not going to configure ssh since I have already configured it manually.

The below message confirms that ssh connectivity is working fine.

Specify Network Interface Usage:

For each interface, in the Interface Name column, identify the interface using one of the following options:

- Public: A public network interface, identified with a public subnet.

- Private: A private network interface, which should be accessible only to other cluster member nodes, and should be identified with a subnet in the private range.

- ASM: A private network interface, which should be accessible only to other ASM Server or Client cluster member nodes, and should be identified with a subnet in the private range. Interfaces connected to this network are used for the cluster interconnect, for storage access, or for access to voting files and OCR files. Because you must place OCR and voting files on Oracle ASM, you must have at least one interface designated either as ASM, or as ASM & Private.

- ASM & Private: A private network interface, which should be accessible only to other ASM Server or Client cluster member nodes, and should be identified with a subnet in the private range. Interfaces connected to this network are used for the cluster interconnect, for storage access, or for access to voting disk files and OCR files placed on Oracle ASM.

- Do Not Use: An interface that you do not want the Oracle Grid Infrastructure installation to use, because you intend to use it for other applications.

Storage Option Information:

The Oracle Cluster Registry (OCR) and voting disks are used to manage the cluster. You must place OCR and voting disks on shared storage. On Linux and UNIX, you can use either Oracle Automatic Storage Management (Oracle ASM) or shared file system to store OCR and voting disks, and on Windows, you must place them on Oracle ASM. Select from among the following options:

- Use Oracle Flex ASM for storage: In Oracle Flex Cluster configurations, storage that is managed with Oracle ASM instances installed on the same cluster, where the access you are configuring is an Oracle Flex ASM configuration. In an Oracle Flex ASM configuration, IOServer cluster resources are started on a subset of nodes in the Oracle Flex Cluster instances.

- Use Shared File System: Select this method if you want to store the OCR and voting disks on a shared file system. This option is available only for Linux and UNIX platforms.

Create Grid Infrastructure Management Repository Option:

As a part of the Cluster Health Monitor (CHM) server monitor service, the Grid Infrastructure Management Repository can be optionally configured as part of Grid Infrastructure installations. The Grid Infrastructure Management Repository is an Oracle database that collects data from all cluster member nodes, and performs analysis on operating system data from each cluster member node to determine resource issues for the cluster as a whole. The data that is collected and analyzed by the GIMR is stored on shared storage.

Select from the following options:

Yes: Select this option if you want to create Grid Infrastructure Management Repository for your cluster.

No: Select this option if you do not want to create Grid Infrastructure Management Repository for your cluster.

Create ASM Disk Group: Provide the name of the initial disk group you want to configure in the Disk Group Name field. The Add Disks table displays disks that are configured as candidate disks. Select the number of candidate or provisioned disks (or partitions on a file system) required for the level of redundancy that you want for your first disk group. For standard disk groups, High redundancy requires a minimum of three disks. Normal requires a minimum of two disks. External requires a minimum of one disk. Flex redundancy requires a minimum of three disks. Oracle Cluster Registry and voting files for Oracle Grid Infrastructure for a cluster are configured on Oracle ASM. Hence, the minimum number of disks required for the disk group is higher. High redundancy requires a minimum of five disks. Normal redundancy requires a minimum of three disks. External redundancy requires a minimum of one disk. Flex redundancy requires a minimum of three disks. If you are configuring an Oracle Extended Cluster installation, then you can also choose an additional Extended redundancy option. The number of supported sites for extended redundancy is three. For extended redundancy with three sites, for example, two data sites, and one quorum failure group, the minimum number of disks is seven. For an Oracle Extended Cluster, you also need to select the site for each failure group. Voting disk files require a higher number of minimum disks to provide the required separate physical devices for quorum failure groups, so that a quorum of voting disk files are available even if one failure group becomes unavailable. You must place voting disk files on Oracle ASM, therefore, ensure that you have enough disks available for the redundancy level you require. If you selected redundancy as Flex, Normal, or High, then you can click Specify Failure Groups and provide details of the failure groups to use for Oracle ASM disks. Select the quorum failure group for voting files. If you do not see candidate disks displayed, then click Change Discovery Path, and enter a path where Oracle Universal Installer (OUI) can find candidate disks. Ensure that you specify the Oracle ASM discovery path for Oracle ASM disks. Select Configure Oracle ASM Filter Driver to use Oracle Automatic Storage Management Filter Driver (Oracle ASMFD) for configuring and managing your Oracle ASM disk devices. Oracle ASMFD simplifies the configuration and management of disk devices by eliminating the need to rebind disk devices used with Oracle ASM each time the system is restarted. Here, ASM disks are configured by udev rules, not by oracleasm and hence you can see the grid:oinstall permissions are assigned to /dev/sdb1, /dev/sbc1, and /dev/sdd1. You don't have to change the discovery path. Also, I am using NORMAL redundancy and hence I have configured 3 disks. [root@rac1 ~]# ls -ltra /dev/oracleasm/disks/* lrwxrwxrwx 1 root root 10 Jul 12 01:55 /dev/oracleasm/disks/OCR3 -> ../../sdd1 lrwxrwxrwx 1 root root 10 Jul 12 01:55 /dev/oracleasm/disks/OCR2 -> ../../sdc1 lrwxrwxrwx 1 root root 10 Jul 12 01:55 /dev/oracleasm/disks/OCR1 -> ../../sdb1 [root@rac1 ~]# ls -ld /dev/sd*1 brw-rw---- 1 root disk 8, 1 Jul 12 01:55 /dev/sda1 brw-rw---- 1 grid oinstall 8, 17 Jul 12 01:55 /dev/sdb1 brw-rw---- 1 grid oinstall 8, 33 Jul 12 01:55 /dev/sdc1 brw-rw---- 1 grid oinstall 8, 49 Jul 12 01:55 /dev/sdd1 The question remains if NORMAL redundancy requires minimum of 2 disks then why do we configure 3 disks? This is because Voting disks should be in odd numbers to prevent node eviction issues. |

Specify ASM Password:

The Oracle Automatic Storage Management (Oracle ASM) system privileges (SYS) is called SYSASM, to distinguish it from the SYS privileges for database administration. The ASMSNMP privilege has a subset of SYS privileges.

Specify passwords for the SYSASM user and ASMSNMP user to grant access to administer the Oracle ASM storage tier. You can use different passwords for each account, to create role-based system privileges, or you can use the same password for each set of system privileges.

Failure Isolation Support:

The Intelligent Platform Management Interface (IPMI) specification defines a set of common interfaces to computer hardware and firmware that system administrators can use to monitor system health, and manage the server. IPMI operates independently of the operating system and allows administrators to manage a system remotely even in the absence of the operating system or the system management software, or even if the monitored system is not powered on. IPMI can also function when the operating system has started, and offers enhanced features when used with the system management software.

1) Use Intelligent Platform Management Interface (IPMI):

Oracle provides the option of implementing Failure Isolation Support using Intelligent Platform Management Interface (IPMI). Ensure that you have hardware installed and drivers in place before you select this option.

2) Do not use Intelligent Platform Management Interface (IPMI):

Select this option to choose not to use IPMI.

Specify Management Options:

You can manage Oracle Grid Infrastructure and Oracle Automatic Storage Management (Oracle ASM) using Oracle Enterprise Manager Cloud Control. Untick this option if not required. If you select this option then specify below configuration details:

OMS Host: Host name where the Management repository is running.

OMS Port: Oracle Enterprise Manager port number to receive requests from the Management Service.

EM Admin User Name and Password: User name and password credentials to log in to Oracle Enterprise Manager.

Privileged Operating System Groups:

On Microsoft Windows operating systems, privileged operating system groups are created for you by default.

On Linux and UNIX, select operating system groups whose members you want to grant administrative privileges for the Oracle Automatic Storage Management storage. Members of these groups are granted system privileges for administration using operating system group authentication. You can use the same group to grant all system privileges, or you can define separate groups to provide role-based system privileges through membership in operating system groups:

Oracle ASM Administrator (OSASM) Group: Members are granted the SYSASM administrative privilege for ASM, which provides full administrator access to configure and manage the storage instance. If the installer finds a group on your system named asmadmin, then that is the default OSASM group.

You can use one group as your administrator group (such as dba), or you can separate system privileges by designating specific groups for each system privilege group. ASM can support multiple databases. If you plan to have more than one database on your system, then you can designate a separate OSASM group, and use a separate user from the database user to own the Oracle Clusterware and ASM installation.

Oracle ASM DBA (OSDBA for ASM) Group: Members are granted read and write access to files managed by ASM. The Oracle Grid Infrastructure installation owner must be a member of this group, and all database installation owners of databases that you want to have access to the files managed by ASM should be members of the OSDBA group for ASM. If the installer finds a group on your system called asmdba, then that is the default OSDBA for ASM group. Do not provide this value when you configure a client cluster, that is, if you have selected the Oracle ASM Client storage option.

Oracle ASM Operator (OSOPER for ASM) Group (Optional): Members are granted access to a subset of the SYSASM privileges, such as starting and stopping the storage tier. If the installer finds a group on your system called asmoper, then that is the default OSOPER for ASM group. If you do not have an asmoper group on your servers, or you want to designate another group whose members are granted the OSOPER for ASM privilege, then you can designate a group on the server. Leave the field blank if you choose not to specify an OSOPER for ASM privileges group.

During installation, you are prompted to specify an Oracle base location, which is owned by the user performing the installation. The Oracle base directory is where log files specific to the user are placed. You can choose a directory location that does not have the structure for an Oracle base directory. The Oracle base directory for the Oracle Grid Infrastructure installation the location where diagnostic and administrative logs, and other logs associated with Oracle ASM and Oracle Clusterware are stored. For Oracle installations other than Oracle Grid Infrastructure for a cluster, it is also the location under which an Oracle home is placed.

Oracle recommends that you install Oracle Grid Infrastructure binaries on local homes, rather than using a shared home on shared storage.

Root Script Execution Configuration:

1) If you want to run scripts manually as root for each cluster member node, then click Next to proceed to the next screen.

2) If you want to delegate the privilege to run scripts with administration privileges, then select Automatically run configuration scripts, and select from one of the following delegation options:

Use the root password: Provide the password to the installer as you are providing other configuration information. The root user password must be identical on each cluster member node.

Use Sudo: Sudo is a UNIX and Linux utility that allows members of the sudoers list privileges to run individual commands as root. Provide the user name and password of an operating system user that is a member of sudoers, and is authorized to run sudo on each cluster member node.