Environment Configuration Details:

Operating System: Redhat Enterprise Linux 8.4 64 Bit

Oracle and Grid Software version: 21.0.0.0

RAC: YES

Oracle and Grid Software version: 21.0.0.0

RAC: YES

Pluggable: No

DNS: No

Points to be checked before starting RAC installation prerequisites:

1) Am I downloading GRID and Oracle DB software of correct version?

2) Are my GRID and database certified on current Operating System ?

3) Are my GRID and database software architecture 32 bit or 64 bit ?

4) Is Operating System architecture 32 bit or 64 bit ?

5) Is Operating System Kernel Version compatible with software to be installed?

2) Are my GRID and database certified on current Operating System ?

3) Are my GRID and database software architecture 32 bit or 64 bit ?

4) Is Operating System architecture 32 bit or 64 bit ?

5) Is Operating System Kernel Version compatible with software to be installed?

6) Is my server runlevel 3 or 5 ?

7) Ensure at least 8 GB RAM is free for Oracle Grid Infrastructure installation.

8) Oracle strongly recommends to disable Transparent HugePages and use standard HugePages for enhanced performance.

9) To install Oracle RAC 21c, you must install Oracle Grid Infrastructure (Oracle Clusterware and Oracle ASM) 21c on your cluster.

10) The Oracle Clusterware version must be equal to or greater than the Oracle RAC version that you plan to install.

11) Use identical server hardware on each node, to simplify server maintenance.

Steps to install and configure Oracle 21c Grid Infrastructure -- Part - I

Step 1: Certification Matrix

Oracle Real Application Clusters 21.0.0.0.0 is certified on Linux x86-64 Red Hat Enterprise Linux 8 Update 2+

RHEL 8.2 with kernel version: 4.18.0-193.19.1.el8_2.x86_64 or later

32/64 Bit Compatibility:

- Oracle Real Application Clusters 21.0.0.0 64 Bit is compatible with Linux x86-64 Red Hat Enterprise Linux 8 64 Bit.

- Oracle Real Application Clusters 21.0.0.0 64 Bit is not compatible with Linux x86-64 Red Hat Enterprise Linux 8 32 Bit.

Step 2: Server Configuration

- At least 1 GB of space in the temporary disk space (/tmp) directory.

- Swap space :

- Between 4 GB and 16 GB: Equal to RAM

- More than 16 GB: 16 GB

If you enable HugePages for your Linux servers, then you should deduct the memory allocated to HugePages from the available RAM before calculating swap space.

- Allocate memory to HugePages large enough for the System Global Areas (SGA) of all databases planned to run on the cluster, and to accommodate the System Global Area for the Grid Infrastructure Management Repository.

- Oracle Clusterware requires the same time zone environment variable setting on all cluster nodes. Ensure that you set the time zone synchronization across all cluster nodes using either an operating system configured network time protocol (NTP) or Oracle Cluster Time Synchronization Service.

- By default, your operating system includes an entry in /etc/fstab to mount /dev/shm. Ensure that the /dev/shm mount area is of type tmpfs and is mounted with the following options:

- rw and exec permissions set on it

- Without noexec or nosuid set on it

- Oracle home or Oracle base cannot be symlinks, nor can any of their parent directories, all the way to up to the root directory.

Public Networks:

- Public network switch connected to a public gateway and to the public interface ports for each cluster member node (redundant switches recommended).

- Ethernet interface card (redundant network cards recommended, bonded as one Ethernet port name).

- The switches and network interfaces must be at least 1 GbE.

- The network protocol is Transmission Control Protocol (TCP) and Internet Protocol (IP).

Private network hardware for the interconnect:

- Private dedicated network switches (redundant switches recommended), connected to the private interface ports for each cluster member node.If you have more than one private network interface card for each server, then Oracle Clusterware automatically associates these interfaces for the private network using Grid Interprocess Communication (GIPC) and Grid Infrastructure Redundant Interconnect, also known as Cluster High Availability IP (HAIP).

- The switches and network interface adapters must be at least 1 GbE.

- The interconnect must support the user datagram protocol (UDP).

- Jumbo Frames (Ethernet frames greater than 1500 bits) are not an IEEE standard, but can reduce UDP overhead if properly configured. Oracle recommends the use of Jumbo Frames for interconnects. However, be aware that you must load-test your system, and ensure that they are enabled throughout the stack.

Oracle Flex ASM Network Hardware:

- Oracle Flex ASM can use either the same private networks as Oracle Clusterware, or use its own dedicated private networks. Each network can be classified PUBLIC or PRIVATE+ASM or PRIVATE or ASM. Oracle ASM networks use the TCP protocol.

For 2-Node RAC configuration:

- 2 public IPs

- 2 private IPs

- 2 virtual IPs

- 1 or 3 scan IPs (If it is 1 then mention it is in /etc/hosts file and if 3 then use DNS for round-robin)

Here, I have used below series of IPs for 2- node RAC configuration.

Note that Public and, VIP, and SCAN IP series is same and private IP series is different than Public, VIP, and SCAN series. However, public, vip, and scan series can be different.

- Each node must have at least two network adapters: one for the public network interface (TCP/IP) and one for the private network interconnect (UDP).

- To improve availability, backup public and private network adapters can be configured for each node.

- The interface names associated with the network adapter(s) for each network must be the same on all nodes. i.e. if eth1 is used for public on 1st node then eth1 must be public on 2nd node as well. You can not use eth1 for private ethernet.

- The virtual IP address and the network name must not be currently in use.

- The virtual IP address must be on the same subnet as your public IP address.

#Public IP 10.20.30.101 rac1.localdomain rac1 10.20.30.102 rac2.localdomain rac2 #Private IP 10.1.2.201 rac1-priv.localdomain rac1-priv 10.1.2.202 rac2-priv.localdomain rac2-priv #VIP IP 10.20.30.103 rac1-vip.localdomain rac1-vip 10.20.30.104 rac2-vip.localdomain rac2-vip #scan IP 10.20.30.105 rac-scan.localdomain rac-scan |

Storage Configuration:

At least 12 GB of space for the Oracle Grid Infrastructure for a cluster home (Grid home). Oracle recommends that you allocate 100 GB to allow additional space for patches. At least 10 GB for Oracle Database Enterprise Edition.

Starting with Oracle Grid Infrastructure 19c, configuring GIMR is optional for Oracle Standalone Cluster deployments.

For installation, configure minimum storage disk space requeirement:

- 100 GB on each node - local storage /u01 for storing GRID and ORACLE binaries

- 20 GB on each node - local storage /osw for storing oswatcher logs (Optional)

- 10 GB * 3 disks for storing OCR and Voting files - shared storage in case of normal redundancy. If you are using high redundancy then use 10 GB * 5 disks.

Step 3: Download Software

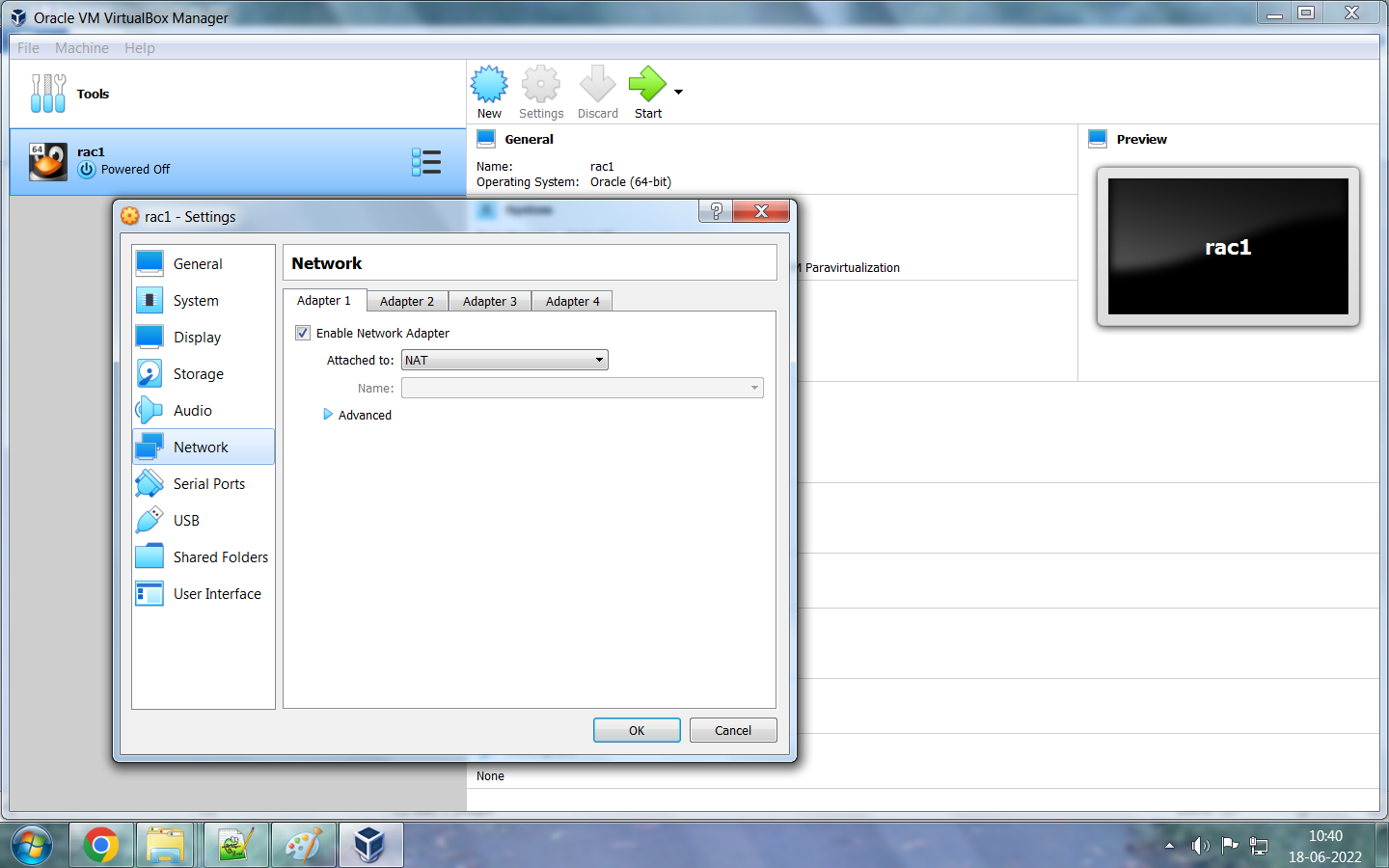

Step 4: Virtual Machine Configuration

Click on "New" tab.

You will get below pop-up box, edit the details and keep as below.

In memory section, i am declaring 6 GB only. You can add more memory for actual prod environment.

Click on "Create a virtual hard disk now" tab.

Click on "VDI(Virtual Box Disk Image)" tab.

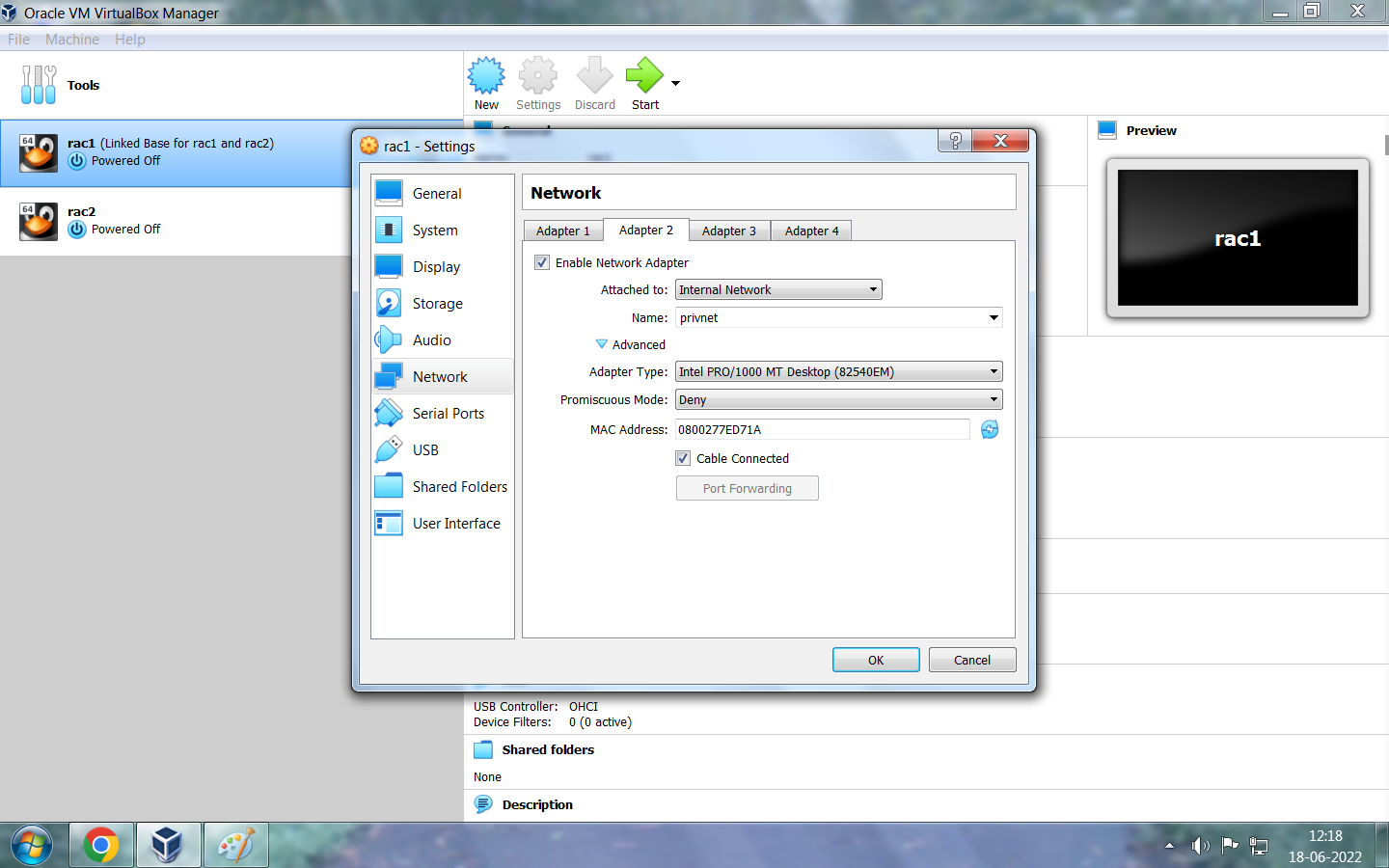

Click Define public network as pubnet and private ethernet as privnet.

For Node1 : pubnet and privnet

For Node2: pubnet and privnet

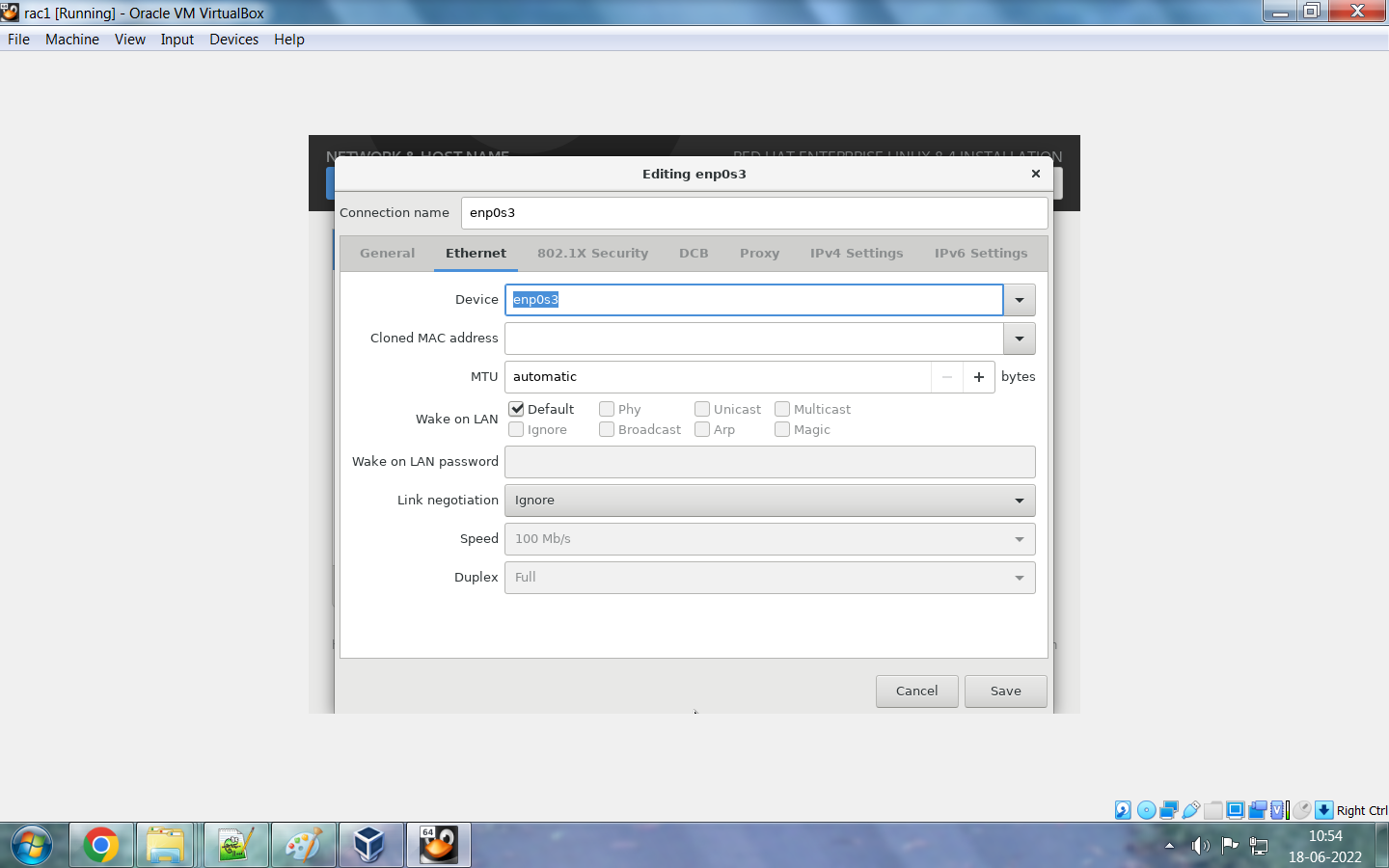

Here, I have used below IPs for public and private ethernets. Also, add hostname for the node1. We will add 2nd node IPs later by cloning process.

#Public IP 10.20.30.101 rac1.localdomain rac1 10.20.30.102 rac2.localdomain rac2 #Private IP 10.1.2.201 rac1-priv.localdomain rac1-priv 10.1.2.202 rac2-priv.localdomain rac2-priv

Click on "ON" and then "Done" option. The network status of both adapters should be "connected".

Step 6: Perform post installation checks.

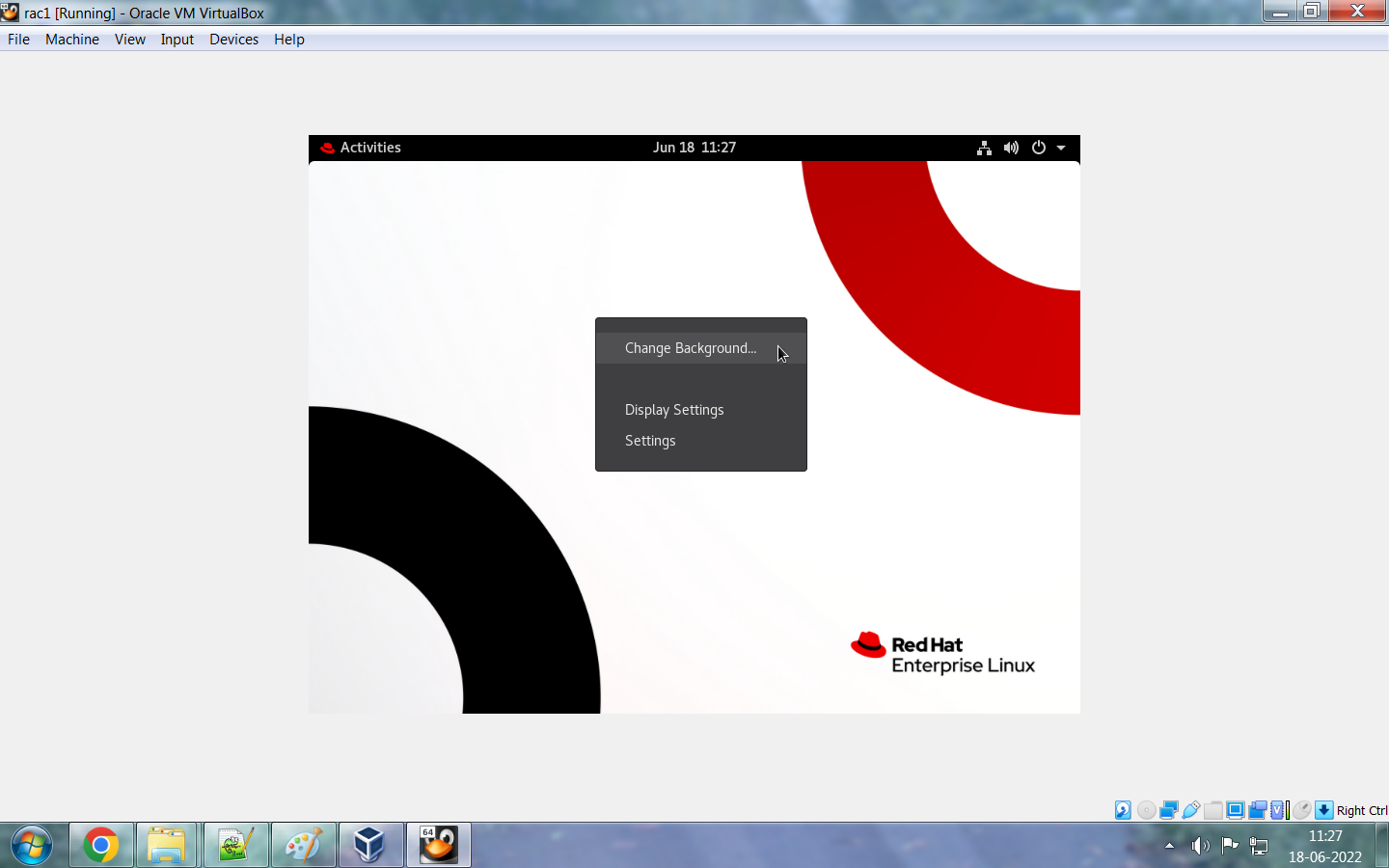

Click on "Insert Guest Additions CD image" option to copy/paste data and drag/drop files from your desktop machine to VDI machine and vice-versa. It will ask root user credentials or login as a root user to run this.

Now its time to make changes at server level. Perform below posts checks post OS installation.

- Update /etc/hosts file

- Stop and disable Firewall

- Disable SELINUX

- Create directory structure

- User and group creation with permissions

- Add limits and kernel parameters in configuration files

Make above changes on server and then clone the machine otherwise you have to make changes on cloned server as well.

/etc/hosts file entry for both Nodes: #Public IP 10.20.30.101 rac1.localdomain rac1 10.20.30.102 rac2.localdomain rac2 #Private IP 10.1.2.201 rac1-priv.localdomain rac1-priv 10.1.2.202 rac2-priv.localdomain rac2-priv #VIP IP 10.20.30.103 rac1-vip.localdomain rac1-vip 10.20.30.104 rac2-vip.localdomain rac2-vip #scan IP 10.20.30.105 rac-scan.localdomain rac-scan Commands to view/stop/disable firewall: #systemctl status firewalld #systemctl stop firewalld #systemctl disable firewalld #systemctl status firewalld Commands to view/stop/disable firewall: #systemctl status firewalld User and group creation with permissions: [root@rac1 ~]# groupadd -g 2000 oinstall [root@rac1 ~]# groupadd -g 2100 asmadmin [root@rac1 ~]# groupadd -g 2200 dba [root@rac1 ~]# groupadd -g 2300 oper [root@rac1 ~]# groupadd -g 2400 asmdba [root@rac1 ~]# groupadd -g 2500 asmoper [root@rac1 /]# useradd grid [root@rac1 /]# useradd oracle [root@rac1 /]# passwd grid Changing password for user grid. New password: BAD PASSWORD: The password is shorter than 8 characters Retype new password: passwd: all authentication tokens updated successfully. [root@rac1 /]# [root@rac1 /]# passwd oracle Changing password for user oracle. New password: BAD PASSWORD: The password is shorter than 8 characters Retype new password: passwd: all authentication tokens updated successfully. [root@rac1 /]# usermod -g oinstall -G asmadmin,dba,oper,asmdba,asmoper grid [root@rac1 /]# usermod -g oinstall -G asmadmin,dba,oper,asmdba,asmoper oracle [root@rac1 /]# id grid uid=1001(grid) gid=2000(oinstall) groups=2000(oinstall),2100(asmadmin),2200(dba),2300(oper),2400(asmdba),2500(asmoper) [root@rac1 /]# id oracle uid=1002(oracle) gid=2000(oinstall) groups=2000(oinstall),2100(asmadmin),2200(dba),2300(oper),2400(asmdba),2500(asmoper) Create directory structure: [root@rac1 /]# mkdir -p /u01/app/grid [root@rac1 /]# mkdir -p /u01/app/21.0.0/grid [root@rac1 /]# mkdir -p /u01/app/oraInventory [root@rac1 /]# mkdir -p /u01/app/oracle [root@rac1 /]# chown -R grid:oinstall /u01/app/grid [root@rac1 /]# chown -R grid:oinstall /u01/app/21.0.0/grid [root@rac1 /]# chown -R grid:oinstall /u01/app/oraInventory [root@rac1 /]# chown -R oracle:oinstall /u01/app/oracle [root@rac1 /]# chmod -R 755 /u01/app/grid [root@rac1 /]# chmod -R 755 /u01/app/21.0.0/grid [root@rac1 /]# chmod -R 755 /u01/app/oraInventory [root@rac1 /]# chmod -R 755 /u01/app/oracle Adding kernel and limits configuration parameters: [root@rac1 /]# vi /etc/sysctl.conf [root@rac1 /]# sysctl -p fs.file-max = 6815744 kernel.sem = 250 32000 100 128 kernel.shmmni = 4096 kernel.shmall = 1073741824 kernel.shmmax = 4398046511104 kernel.panic_on_oops = 1 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048576 net.ipv4.conf.all.rp_filter = 2 net.ipv4.conf.default.rp_filter = 2 fs.aio-max-nr = 1048576 net.ipv4.ip_local_port_range = 9000 65500 [root@rac1 /]# vi /etc/security/limits.conf [root@rac1 /]# cat /etc/security/limits.conf | grep -v "#" oracle soft nofile 1024 oracle hard nofile 65536 oracle soft nproc 16384 oracle hard nproc 16384 oracle soft stack 10240 oracle hard stack 32768 oracle hard memlock 134217728 oracle soft memlock 134217728 oracle soft data unlimited oracle hard data unlimited grid soft nofile 1024 grid hard nofile 65536 grid soft nproc 16384 grid hard nproc 16384 grid soft stack 10240 grid hard stack 32768 grid hard memlock 134217728 grid soft memlock 134217728 grid soft data unlimited grid hard data unlimited |

Rename machine name as rac2 with option "Generate new mac addresses for all network adapters". This will remove the need of manually adding mac address post cloning activity.

In previous Virtual Box releases, we had to manually change the mac addressed of clone server, but now we have the option of generating new mac address while cloning the server.

|

Click on "File" then "Virtual Media Manager".

Click on "Create" option to create 3 OCR disks for installation purpose for normal redundancy.

Again to go "File" and then "Virtual Media Manager" option. Click on each ocr disk and mark 3 OCR disks as "Shareable".

You can verify whether all disks are appeared on below screen for both the nodes.

- oracleasmlib

- oracleasm-support

- kmod ----- This is for Redhat Linux only

Below are the few links to download oracleasm and kmod packages.

- The oracleasmlib package can be downloaded from https://www.oracle.com/linux/downloads/linux-asmlib-v8-downloads.html

- Link to download RPM "oracleasm-support": https://public-yum.oracle.com/repo/OracleLinux/OL8/addons/x86_64/index.html

- Link to download kmod package https://public-yum.oracle.com/oracle-linux-8.html

#Execute below commands to install RPM "libnsl" on both Nodes. [root@rac1 grid]# cd /media/ [root@rac1 media]# ll total 12 drwxrwx--- 1 root vboxsf 4096 Jun 18 14:42 sf_RHEL_8.4_64-bit drwxrwx--- 1 root vboxsf 8192 Jun 6 18:37 sf_Software [root@rac1 media]# cd sf_RHEL_8.4_64-bit/ [root@rac1 sf_RHEL_8.4_64-bit]# ll drwxrwx--- 1 root vboxsf 0 May 4 2021 AppStream drwxrwx--- 1 root vboxsf 0 May 4 2021 BaseOS drwxrwx--- 1 root vboxsf 0 May 4 2021 EFI -rwxrwx--- 1 root vboxsf 8154 May 4 2021 EULA -rwxrwx--- 1 root vboxsf 1455 May 4 2021 extra_files.json -rwxrwx--- 1 root vboxsf 18092 May 4 2021 GPL drwxrwx--- 1 root vboxsf 0 May 4 2021 images drwxrwx--- 1 root vboxsf 4096 May 4 2021 isolinux -rwxrwx--- 1 root vboxsf 103 May 4 2021 media.repo -rwxrwx--- 1 root vboxsf 10130292736 Sep 25 2021 rhel-8.4-x86_64-dvd.iso -rwxrwx--- 1 root vboxsf 1669 May 4 2021 RPM-GPG-KEY-redhat-beta -rwxrwx--- 1 root vboxsf 5134 May 4 2021 RPM-GPG-KEY-redhat-release -rwxrwx--- 1 root vboxsf 1796 May 4 2021 TRANS.TBL [root@rac1 sf_RHEL_8.4_64-bit]# pwd /media/sf_RHEL_8.4_64-bit [root@rac1 sf_RHEL_8.4_64-bit]# cp media.repo /etc/yum.repos.d/rhel7dvd.repo [root@rac1 sf_RHEL_8.4_64-bit]# vi /etc/yum.repos.d/rhel7dvd.repo [root@rac1 sf_RHEL_8.4_64-bit]# pwd /media/sf_RHEL_8.4_64-bit [root@rac1 sf_RHEL_8.4_64-bit]# ll drwxrwx--- 1 root vboxsf 0 May 4 2021 AppStream drwxrwx--- 1 root vboxsf 0 May 4 2021 BaseOS drwxrwx--- 1 root vboxsf 0 May 4 2021 EFI -rwxrwx--- 1 root vboxsf 8154 May 4 2021 EULA -rwxrwx--- 1 root vboxsf 1455 May 4 2021 extra_files.json -rwxrwx--- 1 root vboxsf 18092 May 4 2021 GPL drwxrwx--- 1 root vboxsf 0 May 4 2021 images drwxrwx--- 1 root vboxsf 4096 May 4 2021 isolinux -rwxrwx--- 1 root vboxsf 103 May 4 2021 media.repo -rwxrwx--- 1 root vboxsf 10130292736 Sep 25 2021 rhel-8.4-x86_64-dvd.iso -rwxrwx--- 1 root vboxsf 1669 May 4 2021 RPM-GPG-KEY-redhat-beta -rwxrwx--- 1 root vboxsf 5134 May 4 2021 RPM-GPG-KEY-redhat-release -rwxrwx--- 1 root vboxsf 1796 May 4 2021 TRANS.TBL [root@rac1 sf_RHEL_8.4_64-bit]# cd BaseOS/ [root@rac1 BaseOS]# pwd /media/sf_RHEL_8.4_64-bit/BaseOS [root@rac1 BaseOS]# ll drwxrwx--- 1 root vboxsf 1048576 May 4 2021 Packages drwxrwx--- 1 root vboxsf 4096 May 4 2021 repodata [root@rac1 BaseOS]# cd - /media/sf_RHEL_8.4_64-bit [root@rac1 sf_RHEL_8.4_64-bit]# vi /etc/yum.repos.d/rhel7dvd.repo [root@rac1 sf_RHEL_8.4_64-bit]# [root@rac1 sf_RHEL_8.4_64-bit]# cat /etc/yum.repos.d/rhel7dvd.repo [InstallMedia] name=Red Hat Enterprise Linux 8.4.0 mediaid=None metadata_expire=-1 gpgcheck=1 cost=500 enabled=1 baseurl=file:///media/sf_RHEL_8.4_64-bit/BaseOS gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release [root@rac1 sf_RHEL_8.4_64-bit]# chmod 644 /etc/yum.repos.d/rhel7dvd.repo [root@rac1 sf_RHEL_8.4_64-bit]# yum install libnsl Updating Subscription Management repositories. Unable to read consumer identity This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register. Red Hat Enterprise Linux 8.4.0 104 MB/s | 2.3 MB 00:00 Dependencies resolved. ============================================================================================================================================================== Package Architecture Version Repository Size ============================================================================================================================================================== Installing: libnsl x86_64 2.28-151.el8 InstallMedia 102 k Transaction Summary ============================================================================================================================================================== Install 1 Package Total size: 102 k Installed size: 160 k Is this ok [y/N]: y Downloading Packages: warning: /media/sf_RHEL_8.4_64-bit/BaseOS/Packages/libnsl-2.28-151.el8.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID fd431d51: NOKEY Red Hat Enterprise Linux 8.4.0 459 kB/s | 5.0 kB 00:00 Importing GPG key 0xFD431D51: Userid : "Red Hat, Inc. (release key 2) <security@redhat.com>" Fingerprint: 567E 347A D004 4ADE 55BA 8A5F 199E 2F91 FD43 1D51 From : /etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release Is this ok [y/N]: y Key imported successfully Importing GPG key 0xD4082792: Userid : "Red Hat, Inc. (auxiliary key) <security@redhat.com>" Fingerprint: 6A6A A7C9 7C88 90AE C6AE BFE2 F76F 66C3 D408 2792 From : /etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release Is this ok [y/N]: y Key imported successfully Running transaction check Transaction check succeeded. Running transaction test Transaction test succeeded. Running transaction Preparing : 1/1 Installing : libnsl-2.28-151.el8.x86_64 1/1 Running scriptlet: libnsl-2.28-151.el8.x86_64 1/1 Verifying : libnsl-2.28-151.el8.x86_64 1/1 Installed products updated. Installed: libnsl-2.28-151.el8.x86_64 Complete! [root@rac2 sf_RHEL_8.4_64-bit]# cp media.repo /etc/yum.repos.d/rhel7dvd.repo [root@rac2 sf_RHEL_8.4_64-bit]# vi /etc/yum.repos.d/rhel7dvd.repo [root@rac2 sf_RHEL_8.4_64-bit]# cat /etc/yum.repos.d/rhel7dvd.repo [InstallMedia] name=Red Hat Enterprise Linux 8.4.0 mediaid=None metadata_expire=-1 gpgcheck=1 cost=500 enabled=1 baseurl=file:///media/sf_RHEL_8.4_64-bit/BaseOS gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release [root@rac2 sf_RHEL_8.4_64-bit]# chmod 644 /etc/yum.repos.d/rhel7dvd.repo [root@rac2 sf_RHEL_8.4_64-bit]# yum install libnsl Updating Subscription Management repositories. Unable to read consumer identity This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register. Red Hat Enterprise Linux 8.4.0 120 MB/s | 2.3 MB 00:00 Dependencies resolved. ============================================================================================================================================================== Package Architecture Version Repository Size ============================================================================================================================================================== Installing: libnsl x86_64 2.28-151.el8 InstallMedia 102 k Transaction Summary ============================================================================================================================================================== Install 1 Package Total size: 102 k Installed size: 160 k Is this ok [y/N]: y Downloading Packages: warning: /media/sf_RHEL_8.4_64-bit/BaseOS/Packages/libnsl-2.28-151.el8.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID fd431d51: NOKEY Red Hat Enterprise Linux 8.4.0 257 kB/s | 5.0 kB 00:00 Importing GPG key 0xFD431D51: Userid : "Red Hat, Inc. (release key 2) <security@redhat.com>" Fingerprint: 567E 347A D004 4ADE 55BA 8A5F 199E 2F91 FD43 1D51 From : /etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release Is this ok [y/N]: y Key imported successfully Importing GPG key 0xD4082792: Userid : "Red Hat, Inc. (auxiliary key) <security@redhat.com>" Fingerprint: 6A6A A7C9 7C88 90AE C6AE BFE2 F76F 66C3 D408 2792 From : /etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release Is this ok [y/N]: y Key imported successfully Running transaction check Transaction check succeeded. Running transaction test Transaction test succeeded. Running transaction Preparing : 1/1 Installing : libnsl-2.28-151.el8.x86_64 1/1 Running scriptlet: libnsl-2.28-151.el8.x86_64 1/1 Verifying : libnsl-2.28-151.el8.x86_64 1/1 Installed products updated. Installed: libnsl-2.28-151.el8.x86_64 Complete! |

Install RPM "cvuqdisk" [grid@rac1 rpm]$ su - Password: [root@rac1 ~]# cd /u01/app/21.0.0/grid/cv cv/ cvu/ [root@rac1 ~]# cd /u01/app/21.0.0/grid/cv/r remenv/ rpm/ [root@rac1 ~]# cd /u01/app/21.0.0/grid/cv/rpm/ [root@rac1 rpm]# pwd /u01/app/21.0.0/grid/cv/rpm [root@rac1 rpm]# ll -rw-r--r-- 1 grid oinstall 11904 Jul 8 2021 cvuqdisk-1.0.10-1.rpm [root@rac1 rpm]# rpm -ivh cvuqdisk-1.0.10-1.rpm Verifying... ################################# [100%] Preparing... ################################# [100%] Using default group oinstall to install package Updating / installing... 1:cvuqdisk-1.0.10-1 ################################# [100%] [root@rac1 rpm]# scp cvuqdisk-1.0.10-1.rpm grid@rac2:/tmp/ The authenticity of host 'rac2 (10.20.30.102)' can't be established. ECDSA key fingerprint is SHA256:paSUsPHPoUwF04C4TJffskwngg82TS389hoEYRvbWJ4. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added 'rac2,10.20.30.102' (ECDSA) to the list of known hosts. grid@rac2's password: [root@rac1 rpm]# scp cvuqdisk-1.0.10-1.rpm grid@rac2:/tmp/^C [root@rac1 rpm]# scp cvuqdisk-1.0.10-1.rpm root@rac2:/tmp/ root@rac2's password: cvuqdisk-1.0.10-1.rpm 100% 12KB 14.6MB/s 00:00 [root@rac2 ~]# cd /tmp [root@rac2 tmp]# ll -rw-r--r--. 1 root root 2956 Jun 18 11:22 anaconda.log drwxr-x--- 3 grid oinstall 4096 Jun 18 23:11 CVU_21.0.0.0.0_grid -rw-r--r-- 1 root root 11904 Jun 18 23:13 cvuqdisk-1.0.10-1.rpm -rw-r--r--. 1 root root 2286 Jun 18 11:20 dbus.log -rw-r--r--. 1 root root 0 Jun 18 11:20 dnf.librepo.log drwxr-xr-x. 2 root root 19 Jun 18 11:10 hsperfdata_root -rwx------. 1 root root 701 Jun 18 11:19 ks-script-7ob6m6ob -rwx------. 1 root root 291 Jun 18 11:19 ks-script-9ryflhsk -rw-r--r--. 1 root root 0 Jun 18 11:20 packaging.log -rw-r--r--. 1 root root 131 Jun 18 11:20 program.log -rw-r--r--. 1 root root 0 Jun 18 11:20 sensitive-info.log drwx------ 3 root root 17 Jun 18 22:49 systemd-private-618118b2a7c04baca8d32b7487e3a26d-colord.service-Kt0f9i drwx------ 3 root root 17 Jun 18 23:13 systemd-private-618118b2a7c04baca8d32b7487e3a26d-fprintd.service-h0qY6i drwx------ 3 root root 17 Jun 18 22:55 systemd-private-618118b2a7c04baca8d32b7487e3a26d-fwupd.service-X9p7Kh drwx------ 3 root root 17 Jun 18 22:47 systemd-private-618118b2a7c04baca8d32b7487e3a26d-ModemManager.service-Mkm12g drwx------ 3 root root 17 Jun 18 22:47 systemd-private-618118b2a7c04baca8d32b7487e3a26d-rtkit-daemon.service-YUTjcf drwx------. 2 rupesh rupesh 6 Jun 18 14:52 tracker-extract-files.1000 drwx------ 2 grid oinstall 6 Jun 18 22:55 tracker-extract-files.1001 -rw-r--r--. 1 root root 25965 Jun 18 11:29 vboxguest-Module.symvers [root@rac2 tmp]# [root@rac2 tmp]# rpm -ivh cvuqdisk-1.0.10-1.rpm Verifying... ################################# [100%] Preparing... ################################# [100%] Using default group oinstall to install package Updating / installing... 1:cvuqdisk-1.0.10-1 ################################# [100%] |

Node1: [root@rac1 Oracle 21c]# oracleasm configure -i Configuring the Oracle ASM library driver. This will configure the on-boot properties of the Oracle ASM library driver. The following questions will determine whether the driver is loaded on boot and what permissions it will have. The current values will be shown in brackets ('[]'). Hitting <ENTER> without typing an answer will keep that current value. Ctrl-C will abort. Default user to own the driver interface []: grid Default group to own the driver interface []: oinstall Start Oracle ASM library driver on boot (y/n) [n]: y Scan for Oracle ASM disks on boot (y/n) [y]: y Writing Oracle ASM library driver configuration: done [root@rac1 Oracle 21c]# oracleasm init Creating /dev/oracleasm mount point: /dev/oracleasm Loading module "oracleasm": oracleasm Configuring "oracleasm" to use device physical block size Mounting ASMlib driver filesystem: /dev/oracleasm Node2: [root@rac2 Oracle 21c]# oracleasm configure -i Configuring the Oracle ASM library driver. This will configure the on-boot properties of the Oracle ASM library driver. The following questions will determine whether the driver is loaded on boot and what permissions it will have. The current values will be shown in brackets ('[]'). Hitting <ENTER> without typing an answer will keep that current value. Ctrl-C will abort. Default user to own the driver interface []: grid Default group to own the driver interface []: oinstall Start Oracle ASM library driver on boot (y/n) [n]: y Scan for Oracle ASM disks on boot (y/n) [y]: y Writing Oracle ASM library driver configuration: done [root@rac2 Oracle 21c]# oracleasm init Creating /dev/oracleasm mount point: /dev/oracleasm Loading module "oracleasm": oracleasm Configuring "oracleasm" to use device physical block size Mounting ASMlib driver filesystem: /dev/oracleasm |

Note: Partition the devices from any one node. No need to partition devices on all nodes.

[rupesh@rac1 ~]$ su - Password: [root@rac1 ~]# fdisk -l Disk /dev/sdc: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sda: 35 GiB, 37580963840 bytes, 73400320 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x81d908ce Device Boot Start End Sectors Size Id Type /dev/sda1 * 2048 2099199 2097152 1G 83 Linux /dev/sda2 2099200 73400319 71301120 34G 8e Linux LVM Disk /dev/sdb: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/sdd: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/mapper/rhel-root: 30.5 GiB, 32744931328 bytes, 63954944 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/mapper/rhel-swap: 3.5 GiB, 3758096384 bytes, 7340032 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes [root@rac1 ~]# fdisk -l /dev/sdb Disk /dev/sdb: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes [root@rac1 ~]# fdisk -l /dev/sdc Disk /dev/sdc: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes [root@rac1 ~]# fdisk -l /dev/sdd Disk /dev/sdd: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes [root@rac1 ~]# fdisk /dev/sdb Welcome to fdisk (util-linux 2.32.1). Changes will remain in memory only, until you decide to write them. Be careful before using the write command. Device does not contain a recognized partition table. Created a new DOS disklabel with disk identifier 0x6f9ec9f0. Command (m for help): m Help: DOS (MBR) a toggle a bootable flag b edit nested BSD disklabel c toggle the dos compatibility flag Generic d delete a partition F list free unpartitioned space l list known partition types n add a new partition p print the partition table t change a partition type v verify the partition table i print information about a partition Misc m print this menu u change display/entry units x extra functionality (experts only) Script I load disk layout from sfdisk script file O dump disk layout to sfdisk script file Save & Exit w write table to disk and exit q quit without saving changes Create a new label g create a new empty GPT partition table G create a new empty SGI (IRIX) partition table o create a new empty DOS partition table s create a new empty Sun partition table Command (m for help): n Partition type p primary (0 primary, 0 extended, 4 free) e extended (container for logical partitions) Select (default p): p Partition number (1-4, default 1): First sector (2048-20971519, default 2048): Last sector, +sectors or +size{K,M,G,T,P} (2048-20971519, default 20971519): Created a new partition 1 of type 'Linux' and of size 10 GiB. Command (m for help): w The partition table has been altered. Calling ioctl() to re-read partition table. Syncing disks. [root@rac1 ~]# fdisk /dev/sdc Welcome to fdisk (util-linux 2.32.1). Changes will remain in memory only, until you decide to write them. Be careful before using the write command. Device does not contain a recognized partition table. Created a new DOS disklabel with disk identifier 0xe7c7d1b9. Command (m for help): n Partition type p primary (0 primary, 0 extended, 4 free) e extended (container for logical partitions) Select (default p): p Partition number (1-4, default 1): First sector (2048-20971519, default 2048): Last sector, +sectors or +size{K,M,G,T,P} (2048-20971519, default 20971519): Created a new partition 1 of type 'Linux' and of size 10 GiB. Command (m for help): w The partition table has been altered. Calling ioctl() to re-read partition table. Syncing disks. [root@rac1 ~]# fdisk /dev/sdd Welcome to fdisk (util-linux 2.32.1). Changes will remain in memory only, until you decide to write them. Be careful before using the write command. Device does not contain a recognized partition table. Created a new DOS disklabel with disk identifier 0x0541b9c6. Command (m for help): n Partition type p primary (0 primary, 0 extended, 4 free) e extended (container for logical partitions) Select (default p): p Partition number (1-4, default 1): First sector (2048-20971519, default 2048): Last sector, +sectors or +size{K,M,G,T,P} (2048-20971519, default 20971519): Created a new partition 1 of type 'Linux' and of size 10 GiB. Command (m for help): w The partition table has been altered. Calling ioctl() to re-read partition table. Syncing disks. [root@rac1 ~]# fdisk -l /dev/sdb Disk /dev/sdb: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x6f9ec9f0 Device Boot Start End Sectors Size Id Type /dev/sdb1 2048 20971519 20969472 10G 83 Linux [root@rac1 ~]# fdisk -l /dev/sdc Disk /dev/sdc: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0xe7c7d1b9 Device Boot Start End Sectors Size Id Type /dev/sdc1 2048 20971519 20969472 10G 83 Linux [root@rac1 ~]# fdisk -l /dev/sdd Disk /dev/sdd: 10 GiB, 10737418240 bytes, 20971520 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: dos Disk identifier: 0x0541b9c6 Device Boot Start End Sectors Size Id Type /dev/sdd1 2048 20971519 20969472 10G 83 Linux [root@rac1 Oracle 21c]# id uid=0(root) gid=0(root) groups=0(root) [root@rac1 Oracle 21c]# oracleasm createdisk OCRDISK1 /dev/sdb1 Writing disk header: done Instantiating disk: done [root@rac1 Oracle 21c]# oracleasm createdisk OCRDISK2 /dev/sdc1 Writing disk header: done Instantiating disk: done [root@rac1 Oracle 21c]# oracleasm createdisk OCRDISK3 /dev/sdd1 Writing disk header: done Instantiating disk: done |

#ssh configuration for grid user on both node: Node1: [grid@rac1 ~]$ rm -rf .ssh [grid@rac1 ~]$ mkdir .ssh [grid@rac1 ~]$ chmod 700 .ssh [grid@rac1 ~]$ cd .ssh [grid@rac1 .ssh]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_rsa. Your public key has been saved in /home/grid/.ssh/id_rsa.pub. The key fingerprint is: SHA256:Wh0g51+87cEgTuPsV4xfYUr8OwDkFzOPCLCp6/9xmPQ grid@rac1.localdomain The key's randomart image is: +---[RSA 3072]----+ | ..+. . + | | +o.+.o * | | o. =++= + | | . B =+B+ .| | . S.* oo*..| | .o..+ +.o.| | .. +.E. oo | | . o. .| | ..... | +----[SHA256]-----+ [grid@rac1 .ssh]$ ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_dsa. Your public key has been saved in /home/grid/.ssh/id_dsa.pub. The key fingerprint is: SHA256://6QZ2gvCr4xk8Bhm+djuO7kkIc8jVgSo+rEQ0YqAQ8 grid@rac1.localdomain The key's randomart image is: +---[DSA 1024]----+ |E | |.o | |..+ o | |oo o o + | |+o. . = S | |* = = = o o | |.+. B = X . = o | |o . * + * o.= | | . o= o..oooo | +----[SHA256]-----+ [grid@rac1 .ssh]$ cat *.pub >> authorized_keys.rac1 [grid@rac1 .ssh]$ ll -rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac1 -rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa -rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub -rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa -rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub [grid@rac1 .ssh]$ scp authorized_keys.rac1 grid@rac2:/home/grid/.ssh/ The authenticity of host 'rac2 (10.20.30.102)' can't be established. ECDSA key fingerprint is SHA256:paSUsPHPoUwF04C4TJffskwngg82TS389hoEYRvbWJ4. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added 'rac2,10.20.30.102' (ECDSA) to the list of known hosts. grid@rac2's password: authorized_keys.rac1 100% 1186 1.6MB/s 00:00 [grid@rac1 .ssh]$ ll -rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 1186 Jun 18 23:00 authorized_keys.rac2 -rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa -rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub -rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa -rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub -rw-r--r-- 1 grid oinstall 179 Jun 18 23:00 known_hosts [grid@rac1 .ssh]$ cd $HOME/.ssh [grid@rac1 .ssh]$ cat *.rac* >> authorized_keys [grid@rac1 .ssh]$ chmod 600 authorized_keys [grid@rac1 .ssh]$ ll -rw------- 1 grid oinstall 2372 Jun 18 23:01 authorized_keys -rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 1186 Jun 18 23:00 authorized_keys.rac2 -rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa -rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub -rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa -rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub -rw-r--r-- 1 grid oinstall 179 Jun 18 23:00 known_hosts Node2: [grid@rac2 ~]$ cd $HOME [grid@rac2 ~]$ pwd /home/grid [grid@rac2 ~]$ mkdir .ssh [grid@rac2 ~]$ chmod 700 .ssh [grid@rac2 ~]$ cd .ssh [grid@rac2 .ssh]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_rsa. Your public key has been saved in /home/grid/.ssh/id_rsa.pub. The key fingerprint is: SHA256:KEuM3Mq0C0JFkbRyAKlVVU96WRoNeCtVdJrtoZNoK5E grid@rac2.localdomain The key's randomart image is: +---[RSA 3072]----+ |oo.=+.....+=+ . | |. +.. .+o=.= | |.o + .o=.o o | |..++ ..o.. + . | | .+ = . E.o + . | |.o + o o . . | |o + . . . | |.. . . | | . | +----[SHA256]-----+ [grid@rac2 .ssh]$ ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_dsa. Your public key has been saved in /home/grid/.ssh/id_dsa.pub. The key fingerprint is: SHA256:QcEIHs/2Pqdwvrk458WufjiEcFfjD1SZpt00s5/n4Yw grid@rac2.localdomain The key's randomart image is: +---[DSA 1024]----+ | o. oo. ..o | | . +... + + + | | . + .+ = o + | | ......+ . o | | o oS o ..| | .... . oo| | ..+.+ +.o| | .=+B. E o.| | .=X*. | +----[SHA256]-----+ [grid@rac2 .ssh]$ cat *.pub >> authorized_keys.rac2 [grid@rac2 .ssh]$ ll -rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac2 -rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa -rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub -rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa -rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub -rw-r--r-- 1 grid oinstall 179 Jun 18 22:57 known_hosts [grid@rac2 .ssh]$ ll -rw-r--r-- 1 grid oinstall 1186 Jun 18 23:00 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac2 -rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa -rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub -rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa -rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub -rw-r--r-- 1 grid oinstall 179 Jun 18 22:57 known_hosts [grid@rac2 .ssh]$ scp authorized_keys.rac2 grid@rac1:/home/grid/.ssh/ grid@rac1's password: authorized_keys.rac2 100% 1186 2.0MB/s 00:00 [grid@rac2 .ssh]$ ll -rw-r--r-- 1 grid oinstall 1186 Jun 18 23:00 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac2 -rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa -rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub -rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa -rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub -rw-r--r-- 1 grid oinstall 179 Jun 18 22:57 known_hosts [grid@rac2 .ssh]$ cd $HOME/.ssh [grid@rac2 .ssh]$ cat *.rac* >> authorized_keys [grid@rac2 .ssh]$ chmod 600 authorized_keys [grid@rac2 .ssh]$ ll -rw------- 1 grid oinstall 2372 Jun 18 23:01 authorized_keys -rw-r--r-- 1 grid oinstall 1186 Jun 18 23:00 authorized_keys.rac1 -rw-r--r-- 1 grid oinstall 1186 Jun 18 22:59 authorized_keys.rac2 -rw------- 1 grid oinstall 1393 Jun 18 22:59 id_dsa -rw-r--r-- 1 grid oinstall 611 Jun 18 22:59 id_dsa.pub -rw------- 1 grid oinstall 2610 Jun 18 22:58 id_rsa -rw-r--r-- 1 grid oinstall 575 Jun 18 22:58 id_rsa.pub -rw-r--r-- 1 grid oinstall 179 Jun 18 22:57 known_hosts [grid@rac1 .ssh]$ ssh rac1 The authenticity of host 'rac1 (10.20.30.101)' can't be established. ECDSA key fingerprint is SHA256:paSUsPHPoUwF04C4TJffskwngg82TS389hoEYRvbWJ4. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added 'rac1,10.20.30.101' (ECDSA) to the list of known hosts. Activate the web console with: systemctl enable --now cockpit.socket This system is not registered to Red Hat Insights. See https://cloud.redhat.com/ To register this system, run: insights-client --register Last login: Sat Jun 18 22:57:45 2022 from 10.20.30.102 [grid@rac1 ~]$ ssh rac2 Activate the web console with: systemctl enable --now cockpit.socket This system is not registered to Red Hat Insights. See https://cloud.redhat.com/ To register this system, run: insights-client --register Last login: Sat Jun 18 22:57:33 2022 from 10.20.30.101 [grid@rac2 ~]$ ssh rac1 Activate the web console with: systemctl enable --now cockpit.socket This system is not registered to Red Hat Insights. See https://cloud.redhat.com/ To register this system, run: insights-client --register Last login: Sat Jun 18 23:03:35 2022 from 10.20.30.101 grid@rac2 .ssh]$ ssh rac2 The authenticity of host 'rac2 (10.20.30.102)' can't be established. ECDSA key fingerprint is SHA256:paSUsPHPoUwF04C4TJffskwngg82TS389hoEYRvbWJ4. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added 'rac2,10.20.30.102' (ECDSA) to the list of known hosts. Activate the web console with: systemctl enable --now cockpit.socket This system is not registered to Red Hat Insights. See https://cloud.redhat.com/ To register this system, run: insights-client --register Last login: Sat Jun 18 23:03:37 2022 from 10.20.30.101 [grid@rac2 ~]$ ssh rac1 Activate the web console with: systemctl enable --now cockpit.socket This system is not registered to Red Hat Insights. See https://cloud.redhat.com/ To register this system, run: insights-client --register Last login: Sat Jun 18 23:03:46 2022 from 10.20.30.102 [grid@rac1 ~]$ [grid@rac1 ~]$ ssh rac2 Activate the web console with: systemctl enable --now cockpit.socket This system is not registered to Red Hat Insights. See https://cloud.redhat.com/ To register this system, run: insights-client --register Last login: Sat Jun 18 23:04:00 2022 from 10.20.30.102 |

Step 10: GRID Installation and configuration

1)Copy the GRID Infra to target server in GRID_HOME location since the setup is Gold Image Copy setup. Login as grid user and unzip the GRID setup files, you will get complete HOME binaries in GRID_HOME.

2)Run cluvfy and fix any failed issue.

3)Start GRID Installation with patch.

Keep all softwares and RPMs files in one common folder and make it as shared.